Latest Announcements

Deepseek OCR: Vision‑Language Compression Meets Dynamic OCR

Published on 2025-11-19More News

Trending Models

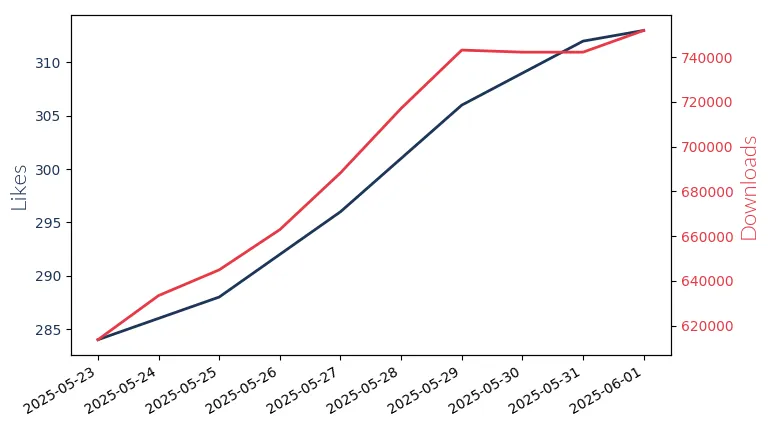

Gpt Oss 20B

GPT-OSS 20B: OpenAI's 20 billion parameter model for low-latency, local applications. Mono-linguistic.

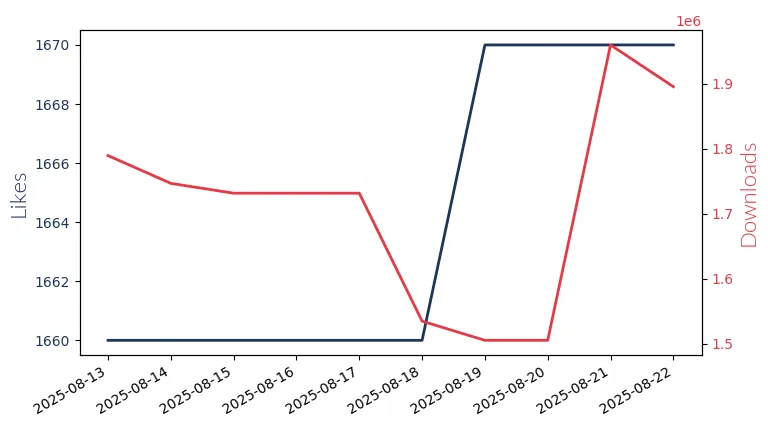

Llama3.1 8B Instruct

Llama3.1 8B Instruct by Meta Llama, an 8 billion parameter, multi-lingual model with a 128k context window, excels in assistant-like chat.

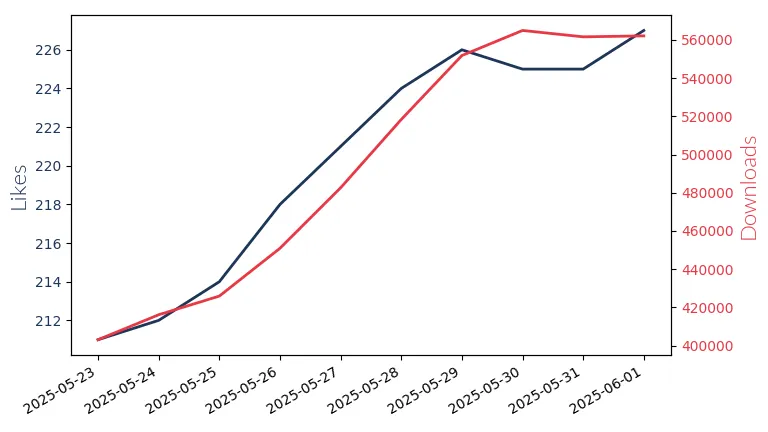

Qwen3 0.6B

Qwen3 0.6B: Alibaba's 0.6 billion param model excels in reasoning tasks with a 32k context window.

Llama3.2 3B Instruct

Llama3.2 3B Instruct by Meta Llama Enterprise: A multilingual, 3 billion parameter model with 8k to 128k context-length, designed for assistant-like chat apps.

Qwen3 1.7B

Qwen3 1.7B: Alibaba's 1.7 billion param model for enhanced reasoning & problem-solving in English.

Llama4 109B Instruct

Llama4 109B Instruct by Meta Llama Enterprise: A powerful, multi-lingual large language model with 109 billion parameters, designed for commercial applications.

Gemma3 1B Instruct

Gemma3 1B by Google: Multilingual LLM with 1B params, 128k/32k context-length. Ideal for content creation & communication tasks.

Qwen3 4B

Qwen3 4B: Alibaba's 4 billion parameter LLM for enhanced reasoning & logic tasks.

Gemma3N 4B Instruct

Gemma3N 4B Instruct by Google: 4 billion param. LLM for creative comms. Supports English, excels in text gen, chatbot AI & summarization.

Llama2 7B

Llama2 7B: Meta's 7 billion param, mono-lingual model for assistant-style chat.

Qwen2.5 1.5B Instruct

Qwen2.5 Instruct: Alibaba's 1.5B param model for multilingual long text generation up to 32k tokens.

Mistral Small3.2 24B Instruct

Mistral Small3.2 24B Instruct: A powerful 24-billion parameter model by Mistral AI, designed for chat assistance with a context window of up to 12,800 tokens.