Latest Announcements

Deepseek OCR: Vision‑Language Compression Meets Dynamic OCR

Published on 2025-11-19More News

Trending Models

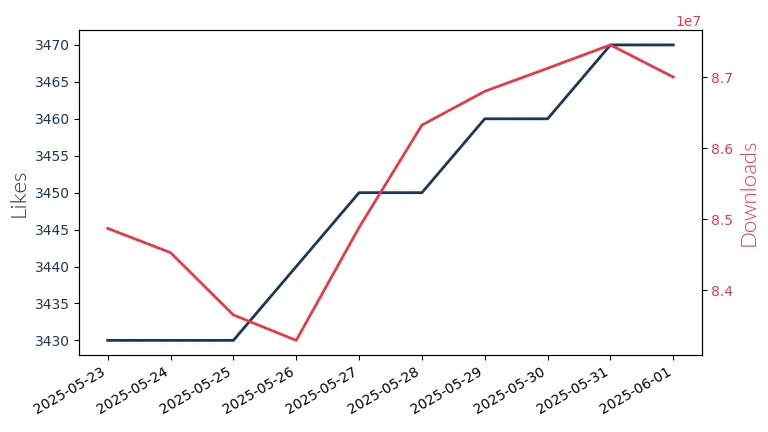

All Minilm 33M

All-MiniLM-33M by Sentence Transformers: A compact, monolingual model for efficient information retrieval.

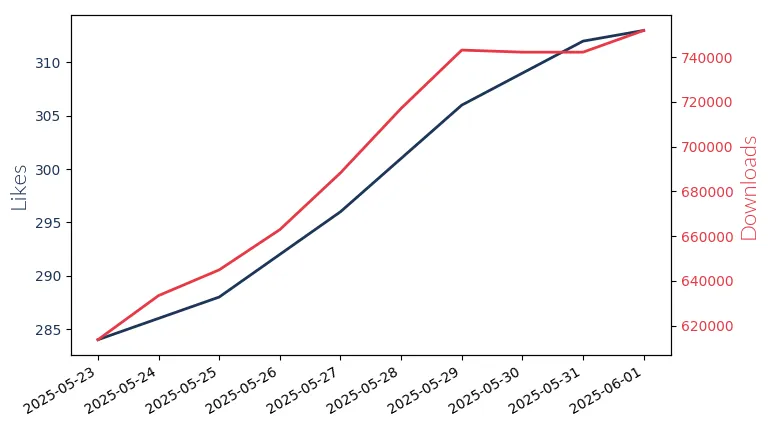

All Minilm 22M

All-MiniLM 22M by Sentence Transformers, a compact model for efficient information retrieval.

Bge M3 567M

Bge M3 567M by BAAI: A bi-lingual, 567M param model for efficient information retrieval with an 8k context window.

Qwen3 0.6B

Qwen3 0.6B: Alibaba's 0.6 billion param model excels in reasoning tasks with a 32k context window.

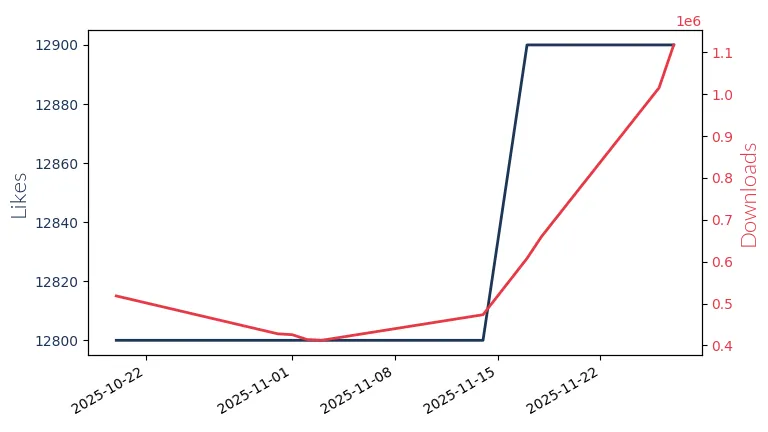

Deepseek R1 671B

Deepseek R1 671B: Bi-lingual Large Language Model with 671 billion parameters. Supports context lengths up to 128k for advanced code generation and debugging tasks.

Llama3.1 8B Instruct

Llama3.1 8B Instruct by Meta Llama, an 8 billion parameter, multi-lingual model with a 128k context window, excels in assistant-like chat.

Gemma3 27B Instruct

Gemma3 27B: Google's 27 billion parameter LLM for creative content & comms. Supports context up to 128k tokens. Ideal for text gen, chatbots, summarization & image data extraction.

Qwen3 8B

Qwen3 8B: Alibaba's 8 billion parameter LLM for reasoning & problem-solving. Mono-lingual model with context lengths up to 8k.

Gemma3 4B Instruct

Gemma3 4B by Google: 4 billion params, 128k/32k context-length. Supports multiple languages for creative content generation, chatbot AI, text summarization, and image data extraction.

Llama3 Gradient 8B Instruct

Llama3 Gradient 8B by Meta Llama: An 8 billion parameter, English-focused LLM with an 8k context window, ideal for commercial & research use.

Llama3.2 3B Instruct

Llama3.2 3B Instruct by Meta Llama Enterprise: A multilingual, 3 billion parameter model with 8k to 128k context-length, designed for assistant-like chat apps.

Qwen3 4B

Qwen3 4B: Alibaba's 4 billion parameter LLM for enhanced reasoning & logic tasks.