Aya 35B - Model Details

Aya 35B is a state-of-the-art multilingual large language model developed by Cohere For Ai, a community-maintained project. It features a parameter size of 35b, making it one of the most powerful models in its category. The model is released under the Creative Commons Attribution-NonCommercial 4.0 International (CC-BY-NC-4.0) license, allowing non-commercial use while preserving attribution rights. Aya 35B extends the capabilities of its predecessor, Aya 23, by supporting 23 languages across both 8B and 35B parameter variants, offering robust performance for diverse linguistic tasks.

Description of Aya 35B

Aya 23 is an open weights research release of an instruction fine-tuned model with highly advanced multilingual capabilities. It pairs a highly performant pre-trained Command family of models with the Aya Collection, resulting in a powerful multilingual large language model supporting 23 languages. The model is optimized for multilinguality and can generate text in various languages including Arabic, Chinese (simplified & traditional), Czech, Dutch, English, French, German, Greek, Hebrew, Hindi, Indonesian, Italian, Japanese, Korean, Persian, Polish, Portuguese, Romanian, Russian, Spanish, Turkish, Ukrainian, and Vietnamese.

Parameters & Context Length of Aya 35B

Aya 35B is a large language model with 35b parameters, placing it in the category of powerful models capable of handling complex tasks, though it requires significant computational resources. Its 8k context length allows it to process and generate long texts effectively, making it suitable for tasks requiring extended reasoning or detailed content creation, but this also increases the demand for memory and processing power. The model’s parameter size and context length reflect a balance between performance and resource requirements, positioning it as a versatile tool for multilingual and complex applications.

- Parameter Size: 35b

- Context Length: 8k

Possible Intended Uses of Aya 35B

Aya 35B is a multilingual large language model with 35b parameters, designed for cross-lingual translation, text completion, and summarization across 23 languages. Its multilingual capabilities suggest possible applications in generating text for diverse linguistic contexts, though further exploration is needed to confirm effectiveness. Possible uses could include translating content between languages like Persian, Hindi, or Japanese, completing text in less common languages such as Ukrainian or Romanian, or summarizing documents in multiple languages. The model’s design also raises possible opportunities for tasks requiring nuanced language understanding, though these remain to be thoroughly tested. Possible uses may extend to creative writing, educational tools, or content localization, but validation through experimentation is essential.

- multilingual text generation

- cross-lingual translation

- text completion and summarization

Possible Applications of Aya 35B

Aya 35B is a multilingual large language model with 35b parameters, capable of supporting possible applications such as generating text in multiple languages, translating content between diverse linguistic groups, or assisting with text completion tasks across different languages. Possible uses might involve creating multilingual educational materials, facilitating cross-lingual communication, or enabling content creation for global audiences. Potential applications could also include summarizing documents in less commonly supported languages or aiding in language research. These possible uses highlight the model’s flexibility but require thorough evaluation to ensure suitability for specific tasks. Each application must be thoroughly evaluated and tested before use.

- multilingual text generation

- cross-lingual translation

- text completion and summarization

- language research support

Quantized Versions & Hardware Requirements of Aya 35B

Aya 35B’s medium q4 version is optimized for a balance between precision and performance, requiring a GPU with at least 24GB VRAM, as per the hardware guidelines for models up to 24B parameters. This configuration ensures efficient execution while maintaining reasonable accuracy, though system memory (at least 32GB RAM), adequate cooling, and a sufficient power supply are also critical. Possible applications for this version may include multilingual tasks, but hardware compatibility should be verified before deployment.

- q2, q3, q4, q5, q6, q8

Conclusion

Aya 35B is a multilingual large language model with 35b parameters, optimized for complex tasks and supporting 23 languages, including Arabic, Chinese, and Russian, with an 8k context length for extended reasoning. Its design emphasizes performance and versatility in cross-lingual applications, though deployment requires significant computational resources.

References

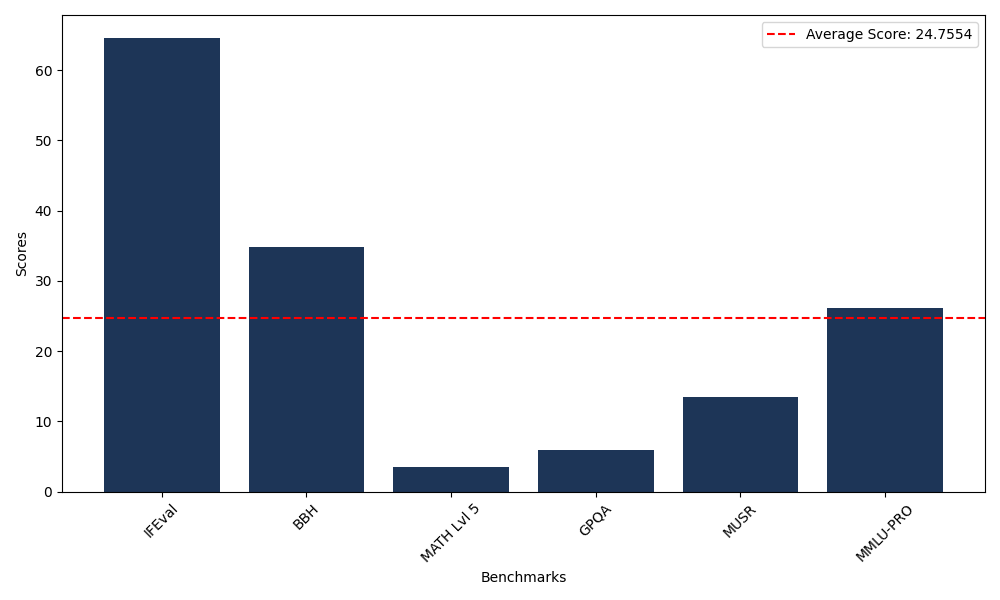

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 64.62 |

| Big Bench Hard (BBH) | 34.86 |

| Mathematical Reasoning Test (MATH Lvl 5) | 3.47 |

| General Purpose Question Answering (GPQA) | 5.93 |

| Multimodal Understanding and Reasoning (MUSR) | 13.47 |

| Massive Multitask Language Understanding (MMLU-PRO) | 26.18 |

Comments

No comments yet. Be the first to comment!

Leave a Comment