Aya Expanse 32B - Model Details

Aya Expanse 32B is a large language model developed by Cohere For Ai, a community-driven initiative, featuring 32 billion parameters and released under the Creative Commons Attribution-NonCommercial 4.0 International (CC-BY-NC-4.0) license.

Description of Aya Expanse 32B

Aya Expanse 32B is an open-weight research release developed by Cohere Labs that combines a highly performant pre-trained Command family of models with innovations in data arbitrage, multilingual preference training, safety tuning, and model merging. It supports 23 languages and is optimized for multilingual tasks with a 128K token context length. The model is designed for research and community-based applications under the Creative Commons Attribution-NonCommercial 4.0 International (CC-BY-NC-4.0) license, emphasizing accessibility while restricting commercial use. Its advanced capabilities make it suitable for complex, language-diverse tasks in academic and non-commercial settings.

Parameters & Context Length of Aya Expanse 32B

Aya Expanse 32B features 32 billion parameters, placing it in the large model category, which enables advanced performance for complex tasks but requires significant computational resources. Its 128,000-token context length falls into the very long context range, making it ideal for handling extensive texts while demanding substantial processing power. These specifications highlight its suitability for research and specialized applications where depth and scale are critical.

- Parameter Size: 32b

- Context Length: 128k

Possible Intended Uses of Aya Expanse 32B

Aya Expanse 32B is a multilingual large language model designed for multilingual text generation and translation, with possible applications in community-driven writing assistance, cross-lingual collaboration, and academic research. Its support for 23 languages suggests possible uses in creating localized content, analyzing multilingual datasets, or exploring language-specific patterns. The model’s 32 billion parameters and 128K token context length could enable possible tasks such as generating extended narratives, handling complex queries, or experimenting with language model merging techniques. However, these possible uses require thorough evaluation to ensure alignment with specific goals and constraints.

- multilingual text generation and translation

- community-contributed use cases like multilingual writing assistance

- research and development in multilingual language models

Possible Applications of Aya Expanse 32B

Aya Expanse 32B is a multilingual large language model with possible applications in areas such as cross-lingual content creation, where its support for 23 languages could enable possible uses for generating or translating texts across diverse linguistic contexts. Its 32 billion parameters and 128K token context length might also make it suitable for possible tasks like analyzing long-form multilingual documents or experimenting with language model merging techniques. Additionally, possible applications could include community-driven writing tools that leverage its multilingual capabilities to assist users in non-commercial, collaborative projects. The model’s design also suggests possible uses in academic research focused on improving multilingual language models, though these possible applications require thorough evaluation to ensure alignment with specific needs.

- multilingual text generation and translation

- community-contributed use cases like multilingual writing assistance

- research and development in multilingual language models

Quantized Versions & Hardware Requirements of Aya Expanse 32B

Aya Expanse 32B in its medium q4 version requires a GPU with at least 24GB VRAM for efficient operation, as quantized models like q4 balance precision and performance while reducing memory demands. This version is suitable for systems with 16GB–32GB VRAM for moderate tasks, though higher VRAM may be needed for complex queries. The 32B parameter model benefits from multi-GPU setups for scalability, and 32GB+ system RAM is recommended for stability. These requirements ensure the model runs effectively without excessive resource strain.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Aya Expanse 32B is a large language model developed by Cohere For Ai with 32 billion parameters and a 128K token context length, designed for multilingual tasks across 23 languages. It operates under the Creative Commons Attribution-NonCommercial 4.0 International (CC-BY-NC-4.0) license, making it suitable for research and community-driven applications while balancing performance and resource requirements.

References

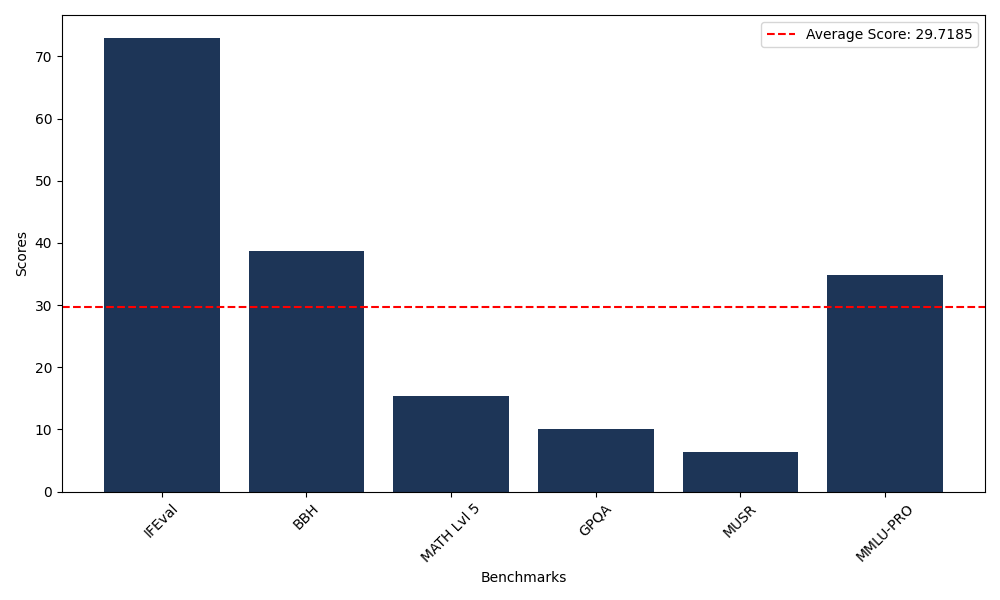

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 73.02 |

| Big Bench Hard (BBH) | 38.71 |

| Mathematical Reasoning Test (MATH Lvl 5) | 15.33 |

| General Purpose Question Answering (GPQA) | 10.07 |

| Multimodal Understanding and Reasoning (MUSR) | 6.41 |

| Massive Multitask Language Understanding (MMLU-PRO) | 34.78 |

Comments

No comments yet. Be the first to comment!

Leave a Comment