Aya Expanse 8B - Model Details

Aya Expanse 8B is a large language model developed by Cohere For Ai, a community-driven initiative. With 8b parameters, it offers robust capabilities for various natural language processing tasks. The model is released under the Creative Commons Attribution-NonCommercial 4.0 International (CC-BY-NC-4.0) license, allowing non-commercial use while requiring proper attribution.

Description of Aya Expanse 8B

Aya Expanse 8B is an open-weight research release designed to advance multilingual language model capabilities. It leverages the highly performant pre-trained Command family of models and integrates a year’s worth of dedicated research from Cohere Labs, including techniques like data arbitrage, multilingual preference training, safety tuning, and model merging. This collaboration results in a powerful multilingual large language model capable of handling diverse linguistic tasks with enhanced accuracy and safety. The model emphasizes robustness across languages, making it a valuable tool for global applications.

Parameters & Context Length of Aya Expanse 8B

Aya Expanse 8B has 8b parameters, placing it in the mid-scale category of open-source LLMs, offering a balance between performance and resource efficiency for moderate complexity tasks. Its 8k context length allows handling longer texts compared to short or moderate contexts, making it suitable for tasks requiring extended information retention while still being less resource-intensive than very long contexts. This combination enables versatile applications without excessive computational demands.

- Parameter Size: 8b

- Context Length: 8k

Possible Intended Uses of Aya Expanse 8B

Aya Expanse 8B is a multilingual large language model with 8b parameters and support for 8k context length, designed for a range of possible applications in text generation, translation, and cross-language communication. Its multilingual capabilities make it a possible tool for generating content in diverse languages, including Japanese, Russian, Italian, and many others, enabling possible use cases such as translating documents, creating localized content, or assisting with multilingual customer interactions. While the model’s design suggests possible value in these areas, further exploration is necessary to confirm its effectiveness and adaptability. The possible uses outlined here require thorough testing to ensure alignment with specific needs and constraints.

- multilingual text generation

- language translation

- customer support in multiple languages

Possible Applications of Aya Expanse 8B

Aya Expanse 8B is a multilingual large language model with 8b parameters and support for 8k context length, making it a possible tool for tasks requiring cross-lingual flexibility. Its possible applications include generating text in multiple languages, such as creating content for international audiences or adapting materials to specific linguistic contexts. It could also serve as a possible resource for translating between languages, though the accuracy and cultural nuances would require possible evaluation. Additionally, it might support possible use cases in customer service, offering multilingual assistance to users. The model’s possible value in these areas depends on further testing to ensure alignment with specific requirements. Each application must be thoroughly evaluated and tested before use.

- multilingual text generation

- language translation

- customer support in multiple languages

Quantized Versions & Hardware Requirements of Aya Expanse 8B

Aya Expanse 8B in its medium q4 version requires a GPU with at least 16GB VRAM for efficient operation, making it suitable for mid-range hardware setups. This quantization balances precision and performance, reducing memory demands compared to higher-precision formats like fp16 while maintaining reasonable accuracy. System memory of at least 32GB RAM is recommended for smooth execution. The q4 variant is ideal for users seeking a practical trade-off between speed and resource usage.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Aya Expanse 8B is a community-maintained large language model with 8b parameters, designed for multilingual tasks and released under the Creative Commons Attribution-NonCommercial 4.0 International (CC-BY-NC-4.0) license. It supports a wide range of languages and leverages advanced research to enhance performance in text generation, translation, and cross-lingual applications.

References

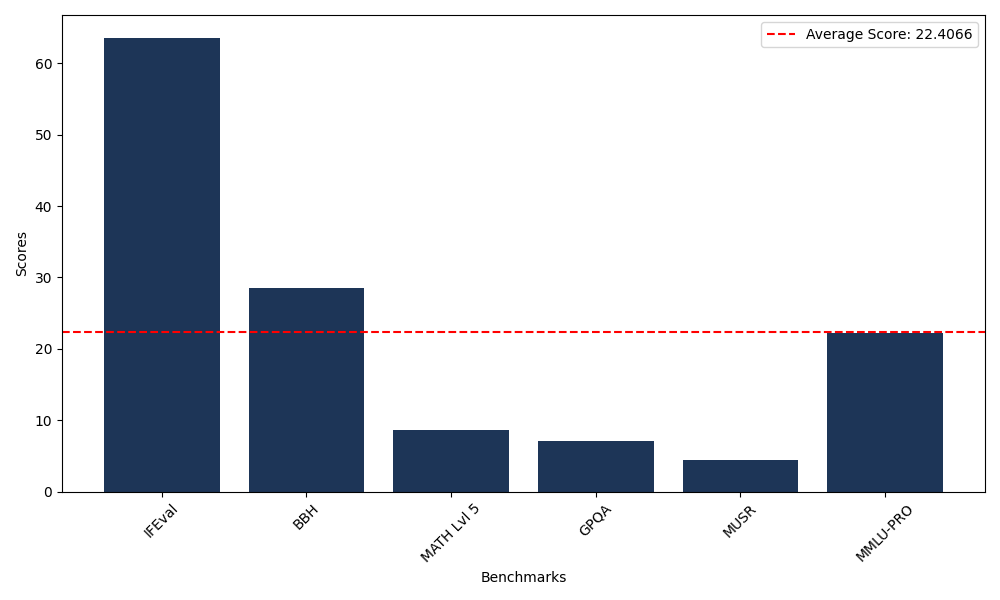

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 63.59 |

| Big Bench Hard (BBH) | 28.52 |

| Mathematical Reasoning Test (MATH Lvl 5) | 8.61 |

| General Purpose Question Answering (GPQA) | 7.05 |

| Multimodal Understanding and Reasoning (MUSR) | 4.41 |

| Massive Multitask Language Understanding (MMLU-PRO) | 22.26 |

Comments

No comments yet. Be the first to comment!

Leave a Comment