Codebooga 34B - Model Details

Codebooga 34B is a large language model with 34 billion parameters designed for enhanced code-related tasks. It features customizable parameters to adapt to specific programming needs. The model is not associated with a specific maintainer, and no formal license has been disclosed. Its focus on merging capabilities for code generation and analysis makes it a versatile tool for developers and researchers.

Description of Codebooga 34B

Codebooga 34B is a merged model combining Phind-CodeLlama-34B-v2 and WizardCoder-Python-34B-V1.0 using the BlockMerge Gradient script with tailored gradient weight configurations for critical layers such as lm_head, embed_tokens, self_attn, mlp, and layernorm. It is optimized for code-related tasks and supports Alpaca prompt format compatibility, enhancing its adaptability for programming and code generation workflows.

Parameters & Context Length of Codebooga 34B

Codebooga 34B features 34 billion parameters, placing it in the large model category, which enables advanced capabilities for complex code tasks but requires substantial computational resources. Its 16,000-token context length allows handling extended code sequences and detailed instructions, making it suitable for intricate programming challenges while demanding higher memory and processing power. The model’s design emphasizes efficiency in code-related workflows, balancing performance with resource requirements.

- Parameter Size: 34b

- Context Length: 16k

Possible Intended Uses of Codebooga 34B

Codebooga 34B is a large language model designed for code-related tasks, with possible uses including code generation, debugging, and programming assistance. Its architecture and parameter size suggest it could support possible applications in automating code writing, identifying errors in codebases, or offering real-time guidance during software development. However, these possible uses require thorough investigation to confirm their effectiveness and suitability for specific scenarios. The model’s focus on customizable parameters and Alpaca prompt compatibility further opens possible opportunities for adapting to diverse programming workflows, though practical implementation details remain to be explored.

- code generation

- debugging and troubleshooting

- programming assistance

Possible Applications of Codebooga 34B

Codebooga 34B is a large-scale language model with possible applications in areas such as code generation, debugging, and programming assistance, though these possible uses require further exploration to confirm their effectiveness. Its design suggests possible suitability for tasks like automating repetitive coding tasks, identifying errors in code, or providing real-time guidance during software development. Possible applications could also extend to customizing code templates or adapting to specific programming languages, but these possible opportunities must be thoroughly evaluated before deployment. The model’s focus on code-related tasks and Alpaca prompt compatibility highlights possible value in collaborative coding environments, though practical implementation details remain to be tested. Each possible application must be carefully assessed to ensure alignment with specific needs and constraints.

- code generation

- debugging and troubleshooting

- programming assistance

Quantized Versions & Hardware Requirements of Codebooga 34B

Codebooga 34B in its medium q4 version requires multiple GPUs with at least 48GB VRAM total for optimal performance, along with 32GB system memory and adequate cooling. This configuration ensures the model can handle its 34 billion parameters efficiently while balancing precision and speed. Possible applications of this version may vary depending on the specific use case, so users should evaluate their hardware compatibility carefully.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Codebooga 34B is a large language model with 34 billion parameters, designed for code-related tasks through a merged architecture combining Phind-CodeLlama-34B-v2 and WizardCoder-Python-34B-V1.0, utilizing the BlockMerge Gradient script for optimized performance. It supports a 16,000-token context length and Alpaca prompt format compatibility, making it suitable for advanced programming workflows while requiring significant computational resources.

References

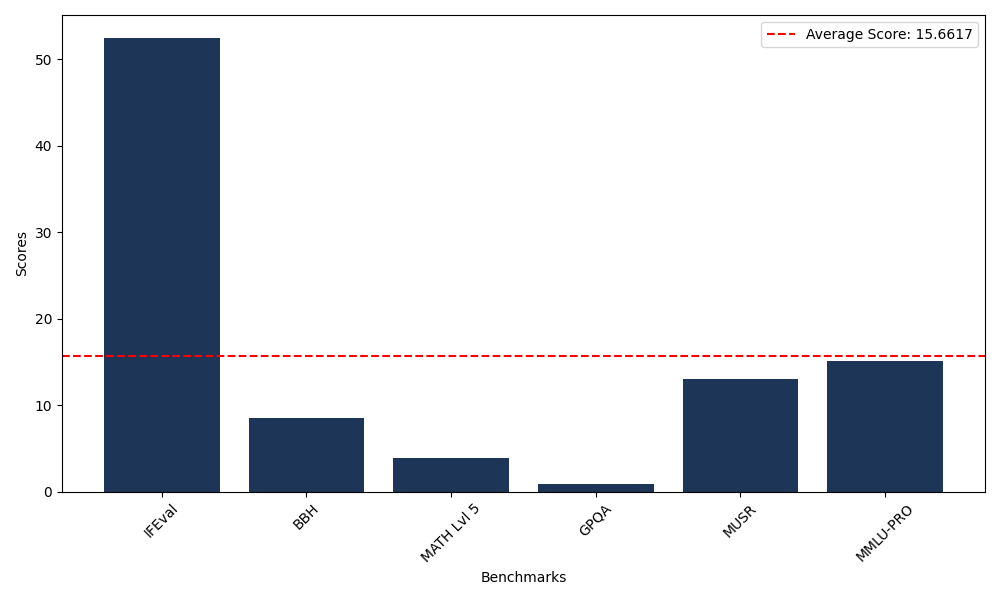

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 52.50 |

| Big Bench Hard (BBH) | 8.56 |

| Mathematical Reasoning Test (MATH Lvl 5) | 3.93 |

| General Purpose Question Answering (GPQA) | 0.89 |

| Multimodal Understanding and Reasoning (MUSR) | 12.98 |

| Massive Multitask Language Understanding (MMLU-PRO) | 15.11 |

Comments

No comments yet. Be the first to comment!

Leave a Comment