Codestral 22B - Model Details

Codestral 22B is a large language model developed by Mistral Ai with 22b parameters. It operates under the Mistral AI Non-Production License (Mistral-AI-NPL) and is designed to generate and complete code across multiple programming languages.

Description of Codestral 22B

Codestral-22B-v0.1 is a large language model trained on a diverse dataset of 80+ programming languages, including Python, Java, C, C++, JavaScript, and Bash. It operates in two primary modes: instruct for answering code-related questions, generating code, and explaining concepts, and Fill-in-the-Middle (FIM) for predicting tokens between a prefix and suffix, which is particularly useful for software development tasks. The model is accessible through Mistral Inference and Hugging Face Transformers, supporting both decoding and encoding operations. Its design emphasizes versatility in code generation and development workflows.

Parameters & Context Length of Codestral 22B

Codestral 22B has 22b parameters, placing it in the large model category, which is powerful for complex tasks but resource-intensive. Its 32k context length allows handling long texts, though it requires more resources. The model's design balances advanced capabilities with practical constraints, making it suitable for intricate coding challenges while demanding significant computational power.

- Parameter Size: 22b – large models offer robust performance for complex tasks but require substantial resources.

- Context Length: 32k – long contexts enable handling extended texts but increase computational demands.

Possible Intended Uses of Codestral 22B

Codestral 22B is a large language model designed for code-related tasks, with possible uses that could include answering questions about code snippets, such as explaining documentation, refactoring, or debugging. It might also be used to generate code based on specific instructions, offering possible applications in software development workflows. Additionally, its Fill-in-the-Middle (FIM) mode could support possible tasks like predicting tokens between a prefix and suffix, which might aid in automated code completion or pattern recognition. These possible uses require further exploration to confirm their effectiveness and suitability for specific scenarios.

- Answering questions about code snippets – possible applications in explaining, documenting, or refactoring code.

- Generating code based on instructions – possible uses for creating code snippets or implementing features.

- Predicting middle tokens for software tasks – possible applications in code completion or pattern analysis.

Possible Applications of Codestral 22B

Codestral 22B is a large language model with possible applications in areas such as generating code snippets based on user instructions, which could possibly streamline software development workflows. It might possibly assist in explaining or refactoring code by answering questions about specific code snippets, offering possible support for developers. The Fill-in-the-Middle (FIM) mode could possibly enable tasks like predicting tokens in code patterns, which might possibly aid in automated code completion. These possible applications require thorough evaluation to ensure they align with specific use cases. Each application must be thoroughly evaluated and tested before use.

- Generating code based on instructions – possible use for creating code snippets or implementing features.

- Answering questions about code snippets – possible application for explaining documentation or refactoring.

- Predicting middle tokens for software tasks – possible use in code completion or pattern analysis.

- Supporting software development workflows – possible benefit for tasks requiring code generation or analysis.

Quantized Versions & Hardware Requirements of Codestral 22B

Codestral 22B in its medium q4 version requires a GPU with at least 24GB VRAM (e.g., RTX 3090 Ti, A100) and a system with 32GB RAM for optimal performance. This version balances precision and efficiency, making it suitable for developers with mid-range hardware. Additional considerations include adequate cooling and a power supply capable of supporting the GPU.

- Quantized versions: q2, q3, q4, q5, q6, q8

Conclusion

Codestral 22B is a large language model developed by Mistral Ai with 22b parameters, designed for code generation and software development tasks. It operates under the Mistral AI Non-Production License (Mistral-AI-NPL) and supports modes like instruct and Fill-in-the-Middle (FIM) for diverse coding applications.

References

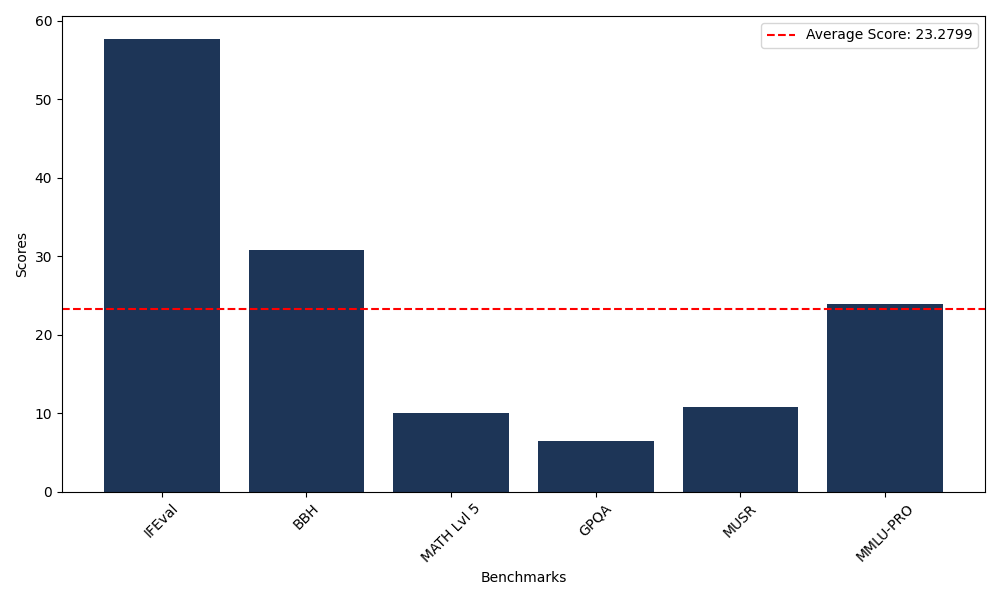

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 57.72 |

| Big Bench Hard (BBH) | 30.74 |

| Mathematical Reasoning Test (MATH Lvl 5) | 10.05 |

| General Purpose Question Answering (GPQA) | 6.49 |

| Multimodal Understanding and Reasoning (MUSR) | 10.74 |

| Massive Multitask Language Understanding (MMLU-PRO) | 23.95 |

Comments

No comments yet. Be the first to comment!

Leave a Comment