Command R 35B - Model Details

Command R 35B, developed by Cohere For Ai, is a large language model with 35 billion parameters. It operates under the Creative Commons Attribution-NonCommercial 4.0 International (CC-BY-NC-4.0) license and is optimized for conversational interaction and long context tasks such as retrieval-augmented generation (RAG) and external tool use.

Description of Command R 35B

Command R 35B, a 35 billion parameter large language model, is optimized for reasoning, summarization, question answering, and multilingual generation. It supports grounded generation through RAG (retrieval-augmented generation) and can interact with code. Designed for tasks requiring contextual understanding, document-based responses, and tool integration, it offers non-quantized and quantized (8-bit/4-bit) versions to balance performance and efficiency.

Parameters & Context Length of Command R 35B

Command R 35B, with 35 billion parameters, falls into the large model category, offering robust performance for complex tasks but requiring significant computational resources. Its 128k context length enables handling of very long texts, making it ideal for tasks like document analysis or extended conversations, though it demands substantial memory and processing power. The model’s design balances depth and efficiency, supporting advanced applications while accommodating varying resource constraints through quantized versions.

- Name: Command R 35B

- Parameter_Size: 35b (Large model, resource-intensive)

- Context_Length: 128k (Very long context, highly resource-intensive)

Possible Intended Uses of Command R 35B

Command R 35B, a versatile large language model, has possible applications in areas such as text generation, code generation, and multilingual support. Its potential uses could include creating content in multiple languages, assisting with coding tasks, or generating text tailored to specific linguistic needs. The model’s multilingual capabilities support languages like German, English, Spanish, Arabic, French, Italian, Japanese, Simplified Chinese, Korean, and Brazilian Portuguese, making it possible to explore cross-lingual tasks. However, these potential uses require thorough investigation to ensure alignment with specific requirements and constraints.

- Name: Command R 35B

- Intended_Uses: text generation, code generation, multilingual support

- Supported_Languages: german, english, spanish, arabic, french, italian, japanese, simplified chinese, korean, brazilian portuguese

Possible Applications of Command R 35B

Command R 35B, with its possible capabilities in text generation, code creation, and multilingual support, could offer potential benefits for tasks like drafting documents, assisting with programming challenges, or handling content in multiple languages. Its possible ability to manage long contexts might make it possible to use for analyzing extended texts or integrating tools in non-sensitive workflows. The model’s potential for multilingual tasks could support possible applications in cross-lingual communication or content localization. However, these possible uses require careful assessment to ensure they align with specific needs and constraints. Each application must be thoroughly evaluated and tested before deployment.

- Name: Command R 35B

- Possible Applications: text generation, code generation, multilingual support, document analysis

Quantized Versions & Hardware Requirements of Command R 35B

Command R 35B’s medium q4 version requires significant hardware resources, likely needing multiple GPUs with at least 48GB VRAM total for efficient operation, as its 35 billion parameters exceed typical single-GPU capacities. This quantized version balances precision and performance but still demands robust systems, including at least 32GB RAM and adequate cooling. Possible applications may vary based on hardware availability, so users should verify compatibility.

- Name: Command R 35B

- Quantized_Versions: fp16, q2, q3, q4, q5, q6, q8

Conclusion

Command R 35B, a 35 billion parameter large language model developed by Cohere For Ai, is optimized for conversational interaction, long context tasks, and retrieval-augmented generation (RAG), operating under the Creative Commons Attribution-NonCommercial 4.0 International (CC-BY-NC-4.0) license. Its design emphasizes contextual understanding, document-based responses, and tool integration, making it suitable for complex, non-sensitive applications requiring robust language processing.

References

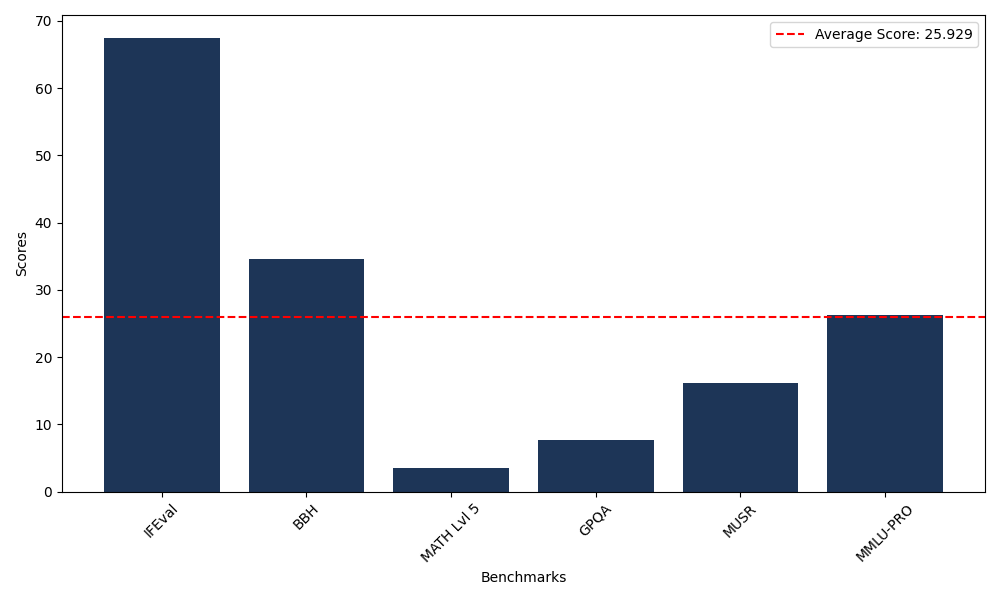

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 67.48 |

| Big Bench Hard (BBH) | 34.56 |

| Mathematical Reasoning Test (MATH Lvl 5) | 3.47 |

| General Purpose Question Answering (GPQA) | 7.61 |

| Multimodal Understanding and Reasoning (MUSR) | 16.13 |

| Massive Multitask Language Understanding (MMLU-PRO) | 26.33 |

Comments

No comments yet. Be the first to comment!

Leave a Comment