Command R Plus 104B - Model Details

Command R Plus 104B is a large language model developed by Cohere For Ai with 104 billion parameters, designed for advanced tasks. It operates under the Creative Commons Attribution-NonCommercial 4.0 International (CC-BY-NC-4.0) license, emphasizing non-commercial use. The model focuses on enhancing multilingual context understanding and tool automation through retrieval augmented generation, making it suitable for complex, real-world applications.

Description of Command R Plus 104B

Cohere Labs Command R+ is an open weights research release of a 104B parameter model designed for advanced tasks. It leverages Retrieval Augmented Generation (RAG) and tool use to automate complex workflows. The model supports multi-step tool use and is multilingual, excelling in reasoning, summarization, and question answering. Its architecture prioritizes efficiency and adaptability for real-world applications requiring deep contextual understanding and integration with external tools.

Parameters & Context Length of Command R Plus 104B

Command R Plus 104B is a 104B parameter model, placing it in the very large category, which enables it to handle highly complex tasks but demands substantial computational resources. Its 128k context length allows for processing extremely long texts, making it ideal for tasks requiring extensive contextual understanding, though it is highly resource-intensive. The model’s scale and context length make it suitable for advanced applications like detailed document analysis, multi-step reasoning, and multilingual processing, but its deployment requires significant infrastructure.

- Command R Plus 104B: 104B parameters – best for complex tasks, requiring significant resources.

- Command R Plus 104B: 128k context length – ideal for very long texts, highly resource-intensive.

Possible Intended Uses of Command R Plus 104B

Command R Plus 104B is a versatile large language model designed for reasoning, summarization, and question answering, with multilingual support across languages like English, Japanese, Brazilian Portuguese, Korean, Arabic, German, Simplified Chinese, French, Spanish, and Italian. Its 104B parameter size and 128k context length suggest it could enable possible uses in complex task automation, cross-lingual content analysis, or advanced data processing. However, these possible uses would require careful evaluation to ensure alignment with specific requirements, as the model’s capabilities are still being explored. The multilingual nature of the model opens possible applications in scenarios requiring interaction with diverse language inputs, though further testing would be necessary to confirm effectiveness.

- reasoning

- summarization

- question answering

Possible Applications of Command R Plus 104B

Command R Plus 104B is a large-scale language model with possible applications in areas like complex reasoning tasks, multilingual content summarization, question-answering systems, and cross-lingual data analysis. Its 104B parameter size and 128k context length suggest it could enable possible uses in scenarios requiring deep contextual understanding or handling extensive text. However, these possible applications would need thorough testing to ensure they meet specific requirements, as the model’s performance in real-world settings remains to be fully validated. The multilingual support also opens possible opportunities for interacting with diverse language datasets, though further exploration is necessary.

- complex reasoning tasks

- multilingual content summarization

- question-answering systems

- cross-lingual data analysis

Quantized Versions & Hardware Requirements of Command R Plus 104B

Command R Plus 104B is available in a medium q4 version, which balances precision and performance, but requires significant hardware resources. For this quantization, a GPU with at least 24GB VRAM (or multiple GPUs totaling 48GB+) is likely necessary, along with a multi-core CPU and 32GB+ RAM to handle the model’s 104B parameters efficiently. These possible requirements may vary depending on the specific workload and optimizations, so users should verify their system’s compatibility.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Command R Plus 104B is a large language model with 104B parameters and a 128k context length, designed for advanced tasks like reasoning, summarization, and question answering. It supports multilingual processing and is released as an open weights research model, offering potential for complex applications while requiring significant computational resources.

References

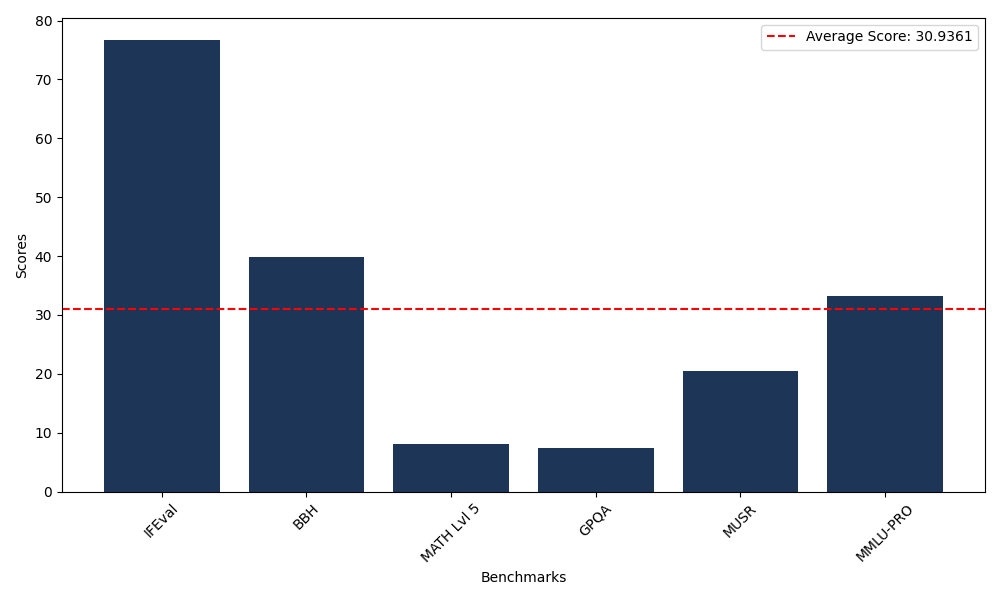

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 76.64 |

| Big Bench Hard (BBH) | 39.92 |

| Mathematical Reasoning Test (MATH Lvl 5) | 8.01 |

| General Purpose Question Answering (GPQA) | 7.38 |

| Multimodal Understanding and Reasoning (MUSR) | 20.42 |

| Massive Multitask Language Understanding (MMLU-PRO) | 33.24 |

Comments

No comments yet. Be the first to comment!

Leave a Comment