Command R7B 7B - Model Details

Command R7B 7B is a large language model developed by Cohere For Ai with 7b parameters, designed for advanced reasoning, summarization, question answering, and code tasks. It operates under the Creative Commons Attribution-NonCommercial 4.0 International (CC-BY-NC-4.0) license, allowing non-commercial use while requiring proper attribution. The model is maintained by a community of developers and researchers, focusing on optimizing performance for complex linguistic and computational challenges.

Description of Command R7B 7B

Command R7B 7B is a 7B parameter model designed for advanced reasoning, summarization, question answering, and code tasks. It supports Retrieval Augmented Generation (RAG) and tool use for sophisticated applications. Trained on 23 languages, it offers multilingual capabilities. The model is released with open weights, enabling research and development. It operates under the Creative Commons Attribution-NonCommercial 4.0 International (CC-BY-NC-4.0) license, allowing non-commercial use with proper attribution.

Parameters & Context Length of Command R7B 7B

Command R7B 7B features 7b parameters, placing it in the small to mid-scale range of open-source LLMs, offering efficient performance for moderate complexity tasks while balancing speed and resource usage. Its 128k context length enables handling extended inputs and long texts, making it suitable for applications requiring deep contextual understanding, though it demands higher computational resources. The model’s parameter size and context length make it versatile for tasks like detailed summarization, code generation, and multi-step reasoning, while its design prioritizes accessibility without sacrificing capability.

- Parameter Size: 7b

- Context Length: 128k

Possible Intended Uses of Command R7B 7B

Command R7B 7B is a versatile large language model designed for a range of tasks, with possible applications in areas like advanced reasoning, summarization, question answering, and code generation. Its possible integration with retrieval augmented generation (RAG) and tool use could enable dynamic interactions with external data sources or systems, though these possible use cases require careful validation. The model’s multilingual support across 23 languages opens possible opportunities for cross-lingual tasks, but the effectiveness of such applications would depend on specific implementation details. While the model’s architecture suggests possible utility in complex problem-solving or content creation, further research is needed to confirm its suitability for particular scenarios. The possible uses outlined here are not guaranteed and should be explored with caution.

- reasoning

- summarization

- question answering

- code generation

- retrieval augmented generation (rag)

- tool use

Possible Applications of Command R7B 7B

Command R7B 7B is a large language model with possible applications in areas like advanced reasoning, summarization, question answering, and code generation, though these possible uses require thorough evaluation to ensure alignment with specific needs. Its possible integration with retrieval augmented generation (RAG) and tool use could support dynamic data-driven tasks, but such possible scenarios demand rigorous testing. The model’s multilingual capabilities also suggest possible utility in cross-lingual projects, though effectiveness may vary. The possible flexibility of its architecture makes it a candidate for diverse tasks, but each possible application must be carefully assessed before deployment.

- reasoning

- summarization

- question answering

- code generation

- retrieval augmented generation (rag)

- tool use

Quantized Versions & Hardware Requirements of Command R7B 7B

Command R7B 7B's medium q4 version requires at least 16GB VRAM for efficient operation, offering a balance between precision and performance. Users should verify their GPU's VRAM capacity and system memory to ensure compatibility. The model is optimized for this configuration, making it suitable for a range of applications.

- fp16, q4, q8

Conclusion

Command R7B 7B is a 7B parameter model optimized for advanced reasoning, summarization, question answering, and code tasks, with a 128k context length and support for 23 languages. It operates under the Creative Commons Attribution-NonCommercial 4.0 International (CC-BY-NC-4.0) license, making it suitable for research and non-commercial applications while requiring proper attribution.

References

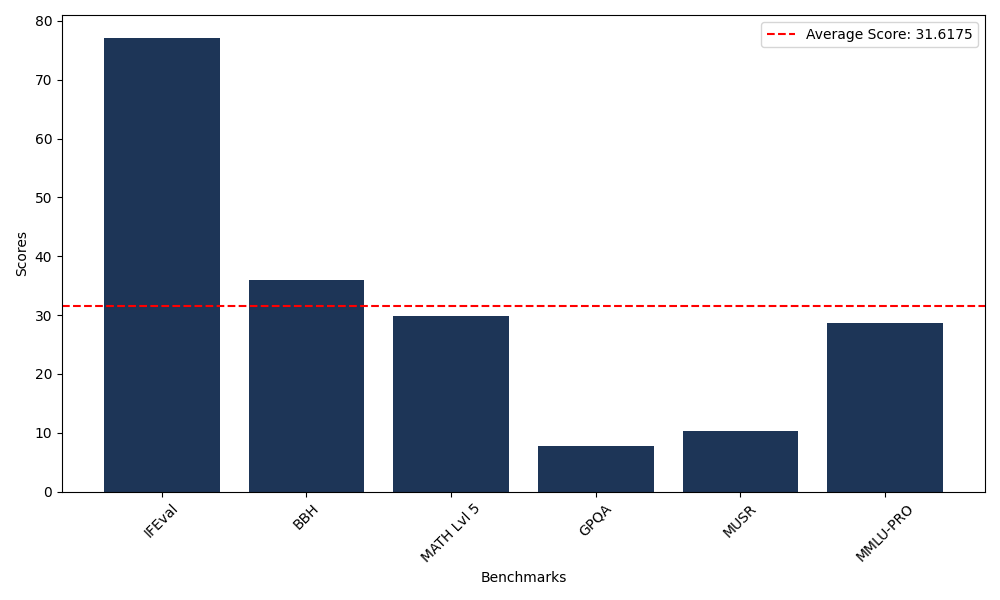

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 77.13 |

| Big Bench Hard (BBH) | 36.02 |

| Mathematical Reasoning Test (MATH Lvl 5) | 29.91 |

| General Purpose Question Answering (GPQA) | 7.83 |

| Multimodal Understanding and Reasoning (MUSR) | 10.23 |

| Massive Multitask Language Understanding (MMLU-PRO) | 28.58 |

Comments

No comments yet. Be the first to comment!

Leave a Comment