Deepseek Llm 7B - Model Details

Deepseek Llm 7B is a large language model developed by Deepseek, a company specializing in advanced AI research. With 7b parameters, it is designed to handle complex tasks while maintaining efficiency. The model operates under the Deepseek License Agreement (DEEPSEEK-LICENSE), ensuring specific usage terms. It excels in multilingual applications, particularly in English and Chinese comprehension, making it versatile for diverse linguistic needs.

Description of Deepseek Llm 7B

Deepseek Llm 7B is a large language model with 7 billion parameters designed for high-performance tasks. It was trained from scratch on a massive dataset of 2 trillion tokens in English and Chinese, ensuring strong multilingual capabilities. The model is open source, allowing the research community to access and utilize it freely. It operates under the Deepseek License Agreement (DEEPSEEK-LICENSE), which outlines specific usage terms. Its focus on English and Chinese comprehension makes it particularly effective for applications requiring robust language understanding in these languages.

Parameters & Context Length of Deepseek Llm 7B

The Deepseek Llm 7B features 7b parameters, placing it in the small to mid-scale range of open-source LLMs, offering fast and resource-efficient performance suitable for simple tasks. Its 4k context length is categorized as short, making it effective for concise tasks but less suited for extended text processing. These specifications balance accessibility and capability, catering to applications requiring moderate complexity without excessive resource demands.

- Parameter Size: 7b

- Context Length: 4k

Possible Intended Uses of Deepseek Llm 7B

The Deepseek Llm 7B is a versatile model with 7b parameters designed for tasks involving English and Chinese. Its monolingual nature and support for text generation, language translation, and code generation suggest possible applications in creative writing, cross-lingual communication, and software development. These possible uses could be explored further to assess their effectiveness in specific scenarios. The model’s open-source availability and licensing terms also allow for potential experimentation in non-critical domains. However, thorough testing is necessary to determine how well these possible functions align with real-world requirements.

- text generation

- language translation

- code generation

Possible Applications of Deepseek Llm 7B

The Deepseek Llm 7B could potentially support text generation for creative or informational content, language translation between English and Chinese, and code generation for software development tasks. These possible applications might be suitable for scenarios requiring multilingual processing or automated text creation, though their effectiveness would need to be thoroughly evaluated. The model’s monolingual design and 7b parameter size suggest it could possibly excel in tasks where efficiency and simplicity are prioritized over extreme complexity. However, each possible use case must be carefully tested to ensure alignment with specific requirements.

- text generation

- language translation

- code generation

Quantized Versions & Hardware Requirements of Deepseek Llm 7B

The Deepseek Llm 7B with the q4 quantization requires a GPU with at least 16GB VRAM for efficient operation, along with 32GB system memory and adequate cooling. This version balances precision and performance, making it suitable for deployment on mid-range hardware. Additional considerations include a stable power supply and proper thermal management.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

The Deepseek Llm 7B is a large language model with 7b parameters, designed for efficient performance and multilingual tasks in English and Chinese, operating under the Deepseek License Agreement (DEEPSEEK-LICENSE). It supports text generation, language translation, and code generation, offering potential for diverse applications while requiring thorough evaluation for specific use cases.

References

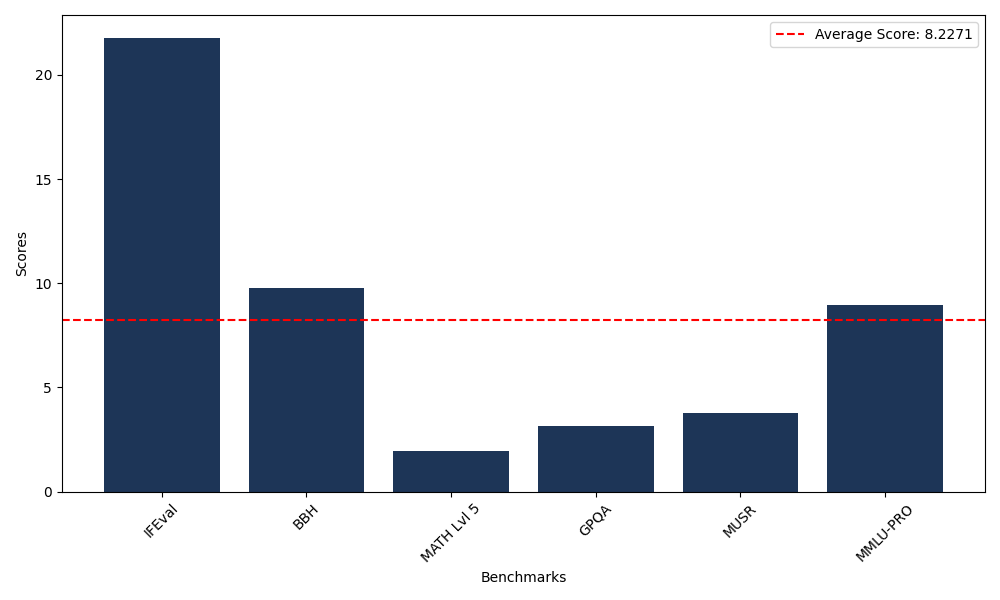

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 21.79 |

| Big Bench Hard (BBH) | 9.77 |

| Mathematical Reasoning Test (MATH Lvl 5) | 1.96 |

| General Purpose Question Answering (GPQA) | 3.13 |

| Multimodal Understanding and Reasoning (MUSR) | 3.76 |

| Massive Multitask Language Understanding (MMLU-PRO) | 8.96 |

Comments

No comments yet. Be the first to comment!

Leave a Comment