Deepseek Llm 7B Base - Model Details

Deepseek Llm 7B Base is a large language model developed by Deepseek, a company focused on advancing multilingual AI capabilities. With 7b parameters, it excels in understanding and generating content across multiple languages, particularly English and Chinese. The model operates under the Deepseek License Agreement (DEEPSEEK-LICENSE), ensuring compliance with specific usage terms. Its design emphasizes efficiency and adaptability for diverse linguistic tasks.

Description of Deepseek Llm 7B Base

An advanced language model comprising 7 billion parameters, trained from scratch on a vast dataset of 2 trillion tokens in both English and Chinese. It has been made open source for the research community.

Parameters & Context Length of Deepseek Llm 7B Base

Deepseek Llm 7B Base features 7b parameters, placing it in the small to mid-scale range of open-source LLMs, which balances efficiency and performance for tasks requiring moderate complexity. Its 4k context length is suitable for short to moderate-length tasks but limits its ability to process very long texts. This configuration makes it ideal for applications prioritizing speed and resource efficiency while maintaining robust multilingual capabilities.

- Parameter Size: 7b

- Context Length: 4k

Possible Intended Uses of Deepseek Llm 7B Base

Deepseek Llm 7B Base is designed for tasks that require multilingual support in english and chinese, with a focus on research, commercial applications, and text completion. Its 7b parameter size and 4k context length suggest it could be a possible tool for scenarios like content generation, language modeling, or exploratory analysis, though these possible uses would need thorough testing to ensure alignment with specific goals. The model’s monolingual nature means it is optimized for single-language tasks, which could make it a possible choice for projects requiring focused linguistic processing. However, the possible applications of this model remain speculative and require careful evaluation before deployment.

- research

- commercial applications

- text completion

Possible Applications of Deepseek Llm 7B Base

Deepseek Llm 7B Base is a possible tool for applications requiring multilingual support in english and chinese, such as content generation, language modeling, text summarization, and interactive dialogue systems. Its 7b parameter size and 4k context length make it a possible candidate for tasks like creative writing, educational content development, or localized customer support, though these possible uses would need rigorous testing to ensure suitability. The model’s monolingual design suggests it could be a possible choice for projects focused on single-language processing, but its possible applications remain speculative and require careful validation before deployment.

- content generation

- language modeling

- text summarization

- interactive dialogue systems

Quantized Versions & Hardware Requirements of Deepseek Llm 7B Base

Deepseek Llm 7B Base in its medium q4 version requires a GPU with at least 16GB VRAM (e.g., RTX 3090) and 12GB–24GB VRAM for optimal performance, balancing precision and efficiency. This makes it possible to run on mid-range hardware, though system memory (at least 32GB RAM) and adequate cooling are also critical. The q4 quantization reduces resource demands compared to higher-precision versions, enabling possible deployment on devices without high-end GPUs. However, thorough testing is recommended to ensure compatibility with specific hardware configurations.

- fp16

- q2

- q3

- q4

- q5

- q6

- q8

Conclusion

Deepseek Llm 7B Base is a 7B-parameter language model with a 4k context length, open-sourced under the Deepseek License Agreement, designed for multilingual tasks in english and chinese. Developed by Deepseek, it offers a balance of efficiency and performance for research and commercial applications.

References

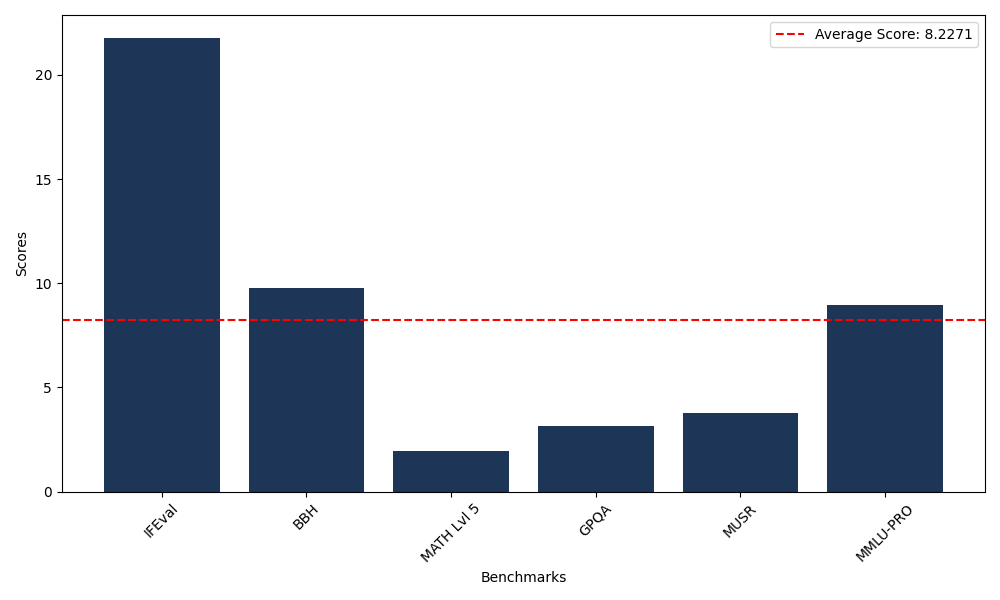

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 21.79 |

| Big Bench Hard (BBH) | 9.77 |

| Mathematical Reasoning Test (MATH Lvl 5) | 1.96 |

| General Purpose Question Answering (GPQA) | 3.13 |

| Multimodal Understanding and Reasoning (MUSR) | 3.76 |

| Massive Multitask Language Understanding (MMLU-PRO) | 8.96 |

Comments

No comments yet. Be the first to comment!

Leave a Comment