Deepseek R1 14B - Model Details

Deepseek R1 14B is a large language model developed by Deepseek, a company focused on advancing reasoning capabilities through reinforcement learning without supervised fine-tuning. With 14b parameters, it is designed to handle complex tasks efficiently. The model is released under the MIT License, allowing broad usage and modification. Its architecture emphasizes autonomous learning and problem-solving, making it suitable for applications requiring deep analytical skills.

Description of Deepseek R1 14B

Deepseek R1 14B is a large-scale reasoning model developed by Deepseek, a company focused on advancing reasoning capabilities through reinforcement learning (RL) without supervised fine-tuning (SFT). It addresses limitations of its predecessor, such as endless repetition and poor readability, by incorporating cold-start data before RL. The model achieves performance comparable to OpenAI-o1 in math, code, and reasoning tasks. It supports distillation into smaller models, enabling efficient deployment while maintaining high performance on benchmarks. The model is open-sourced under the MIT License, with variants available for different parameter sizes and base models, making it accessible for research and diverse applications.

Parameters & Context Length of Deepseek R1 14B

Deepseek R1 14B has 14b parameters, placing it in the mid-scale category of open-source LLMs, offering a balance between performance and resource efficiency for moderate complexity tasks. Its 128k context length falls into the very long context range, enabling it to process extensive texts but requiring significant computational resources. This combination allows the model to handle complex reasoning and long-form content while maintaining flexibility for deployment.

- Name: Deepseek R1 14B

- Parameter Size: 14b

- Context Length: 128k

- Implications: Mid-scale parameters for balanced performance, very long context for handling extensive texts but with high resource demands.

Possible Intended Uses of Deepseek R1 14B

Deepseek R1 14B is a versatile model that could have possible applications in areas like research, code generation, and mathematical problem solving, though these uses would require thorough exploration to confirm their effectiveness. Its design for reasoning and reinforcement learning suggests it might support possible research tasks involving complex analysis or hypothesis testing, while its parameter size and context length could enable possible code generation for specific projects. Possible mathematical problem solving scenarios might benefit from its structured approach, but these possible uses would need validation through experimentation. The model’s open-source nature allows for possible investigations into its adaptability, though further study is essential to understand its limitations and optimal deployment.

- Name: Deepseek R1 14B

- Intended Uses: research, code generation, mathematical problem solving

- Purpose: Designed for reasoning and reinforcement learning without supervised fine-tuning

- Important Info: These uses are possible and require further investigation.

Possible Applications of Deepseek R1 14B

Deepseek R1 14B is a model that could have possible applications in areas like research, code generation, mathematical problem solving, and content creation, though these possible uses would require thorough evaluation to ensure suitability. Its possible role in supporting research tasks might involve analyzing complex datasets or generating hypotheses, while its possible utility in code generation could aid in drafting or optimizing scripts. Possible applications in mathematical problem solving might leverage its reasoning capabilities for structured tasks, and possible uses in content creation could involve generating coherent text for specific purposes. These possible applications highlight the model’s flexibility but underscore the need for rigorous testing to confirm effectiveness and alignment with specific goals.

- Name: Deepseek R1 14B

- Possible Applications: research, code generation, mathematical problem solving, content creation

- Important Info: These possible uses require thorough evaluation and testing before deployment.

Quantized Versions & Hardware Requirements of Deepseek R1 14B

Deepseek R1 14B in its medium q4 version requires a GPU with at least 20GB VRAM (e.g., RTX 3090) and 16GB–32GB VRAM for optimal performance, making it suitable for mid-range hardware. This version balances precision and efficiency, but users should verify their graphics card’s specifications against these requirements. System memory of at least 32GB RAM and adequate cooling are also recommended.

- Name: Deepseek R1 14B

- Quantized Versions: fp16, q4, q8

- Important Info: Hardware requirements depend on parameter size and quantization level.

Conclusion

Deepseek R1 14B is a large language model developed by Deepseek, featuring 14b parameters and released under the MIT License, designed for advanced reasoning tasks through reinforcement learning without supervised fine-tuning. It supports a 128k context length, making it suitable for complex, long-form applications while remaining open-sourced for research and experimentation.

References

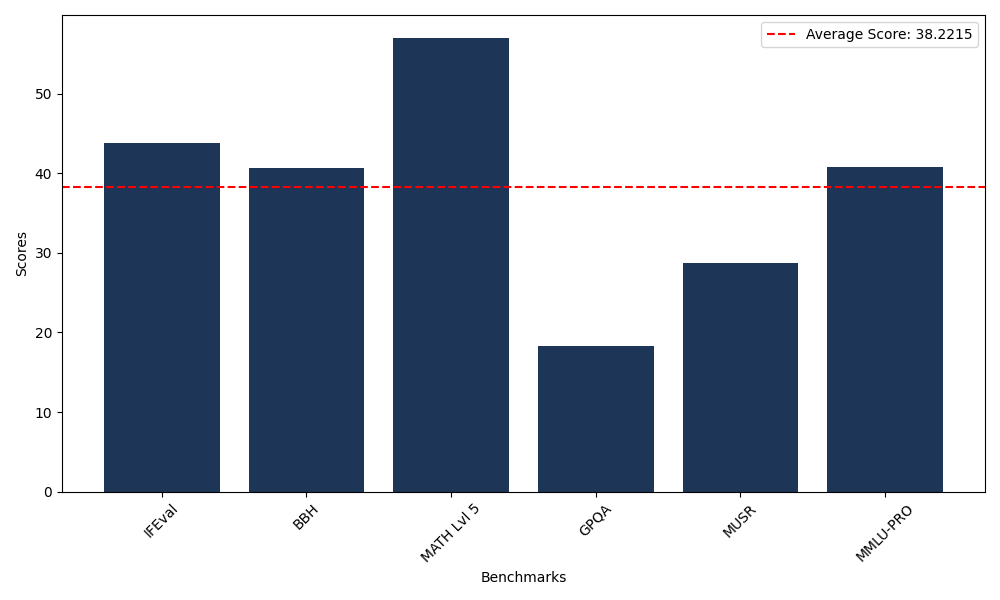

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 43.82 |

| Big Bench Hard (BBH) | 40.69 |

| Mathematical Reasoning Test (MATH Lvl 5) | 57.02 |

| General Purpose Question Answering (GPQA) | 18.34 |

| Multimodal Understanding and Reasoning (MUSR) | 28.71 |

| Massive Multitask Language Understanding (MMLU-PRO) | 40.74 |

Comments

No comments yet. Be the first to comment!

Leave a Comment