Deepseek R1 70B - Model Details

Deepseek R1 70B is a large language model developed by Deepseek, a company focused on advancing AI through innovative training methods. With 70b parameters, it emphasizes reasoning capabilities achieved via reinforcement learning, avoiding supervised fine-tuning. The model is released under the MIT License, ensuring open access and flexibility for research and development.

Description of Deepseek R1 70B

DeepSeek-R1 is a large-scale reasoning model developed using reinforcement learning (RL) without supervised fine-tuning (SFT), distinguishing it from traditional approaches. It addresses limitations of its predecessor, DeepSeek-R1-Zero, by incorporating cold-start data to mitigate issues like endless repetition and poor readability. The model achieves performance comparable to OpenAI-o1 in math, code, and reasoning tasks, demonstrating strong capabilities in complex problem-solving. It supports distillation into smaller models, enabling efficient deployment while maintaining high performance on benchmarks. Open-sourced for research purposes, it offers variants tailored for different parameter sizes and base models, enhancing flexibility for diverse applications.

Parameters & Context Length of Deepseek R1 70B

Deepseek R1 70B features 70b parameters, placing it in the very large models category, which enables advanced reasoning and complex task handling but demands substantial computational resources. Its 128k context length falls under very long contexts, allowing it to process extensive texts efficiently while requiring significant memory and processing power. This combination makes the model suitable for intricate tasks like long-form content generation or detailed analysis, though deployment may necessitate optimized infrastructure.

- Name: Deepseek R1 70B

- Parameter Size: 70b

- Context Length: 128k

- Implications: Very large models for complex tasks, very long contexts for extended text processing, both requiring significant resources.

Possible Intended Uses of Deepseek R1 70B

Deepseek R1 70B could have possible uses in research, code generation, and mathematical problem solving, though these applications require further exploration to confirm their effectiveness. As a model designed for advanced reasoning, it might support researchers in analyzing complex datasets or generating hypotheses, but the extent of its utility in these areas remains to be thoroughly investigated. For code generation, it could offer possible assistance in writing or optimizing code, though its performance in real-world programming scenarios would need validation. In mathematical problem solving, it might provide possible insights or solutions, but its accuracy and reliability for intricate calculations would require rigorous testing. These potential uses highlight the model’s flexibility but also underscore the need for careful evaluation before deployment.

- research

- code generation

- mathematical problem solving

Possible Applications of Deepseek R1 70B

Deepseek R1 70B could have possible applications in research, code generation, mathematical problem solving, and other domains requiring advanced reasoning, though these uses would need thorough evaluation to confirm their viability. As a model with a large parameter count and extended context length, it might offer possible benefits in academic research, where it could assist with hypothesis testing or data interpretation, but its effectiveness in these scenarios would require rigorous testing. For code generation, it could provide possible solutions to programming challenges, though real-world implementation would need validation. In mathematical problem solving, it might offer possible insights into complex calculations, but its accuracy would need careful assessment. Each of these potential applications highlights the model’s flexibility but underscores the necessity of comprehensive evaluation before deployment.

- research

- code generation

- mathematical problem solving

- software development

Quantized Versions & Hardware Requirements of Deepseek R1 70B

Deepseek R1 70B’s medium q4 version, a possible balance between precision and performance, would likely require a GPU with at least 24GB VRAM for efficient operation, though exact requirements depend on the specific implementation and workload. This quantized version reduces memory usage compared to full-precision formats like fp16, making it more feasible for mid-range hardware, but still demanding for large-scale models. Users should evaluate their system’s capabilities against the model’s parameter count and quantization to ensure compatibility.

- fp16, q4, q8

Conclusion

Deepseek R1 70B is a large-scale language model with 70b parameters and a 128k context length, designed for advanced reasoning tasks through reinforcement learning without supervised fine-tuning. It is open-sourced with variants for different applications, offering flexibility but requiring significant computational resources for deployment.

References

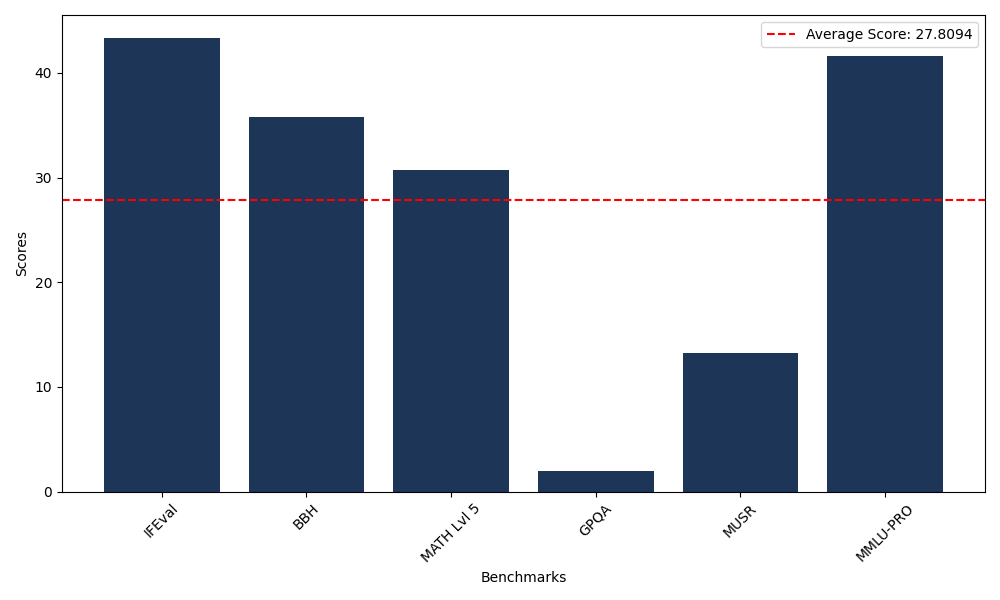

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 43.36 |

| Big Bench Hard (BBH) | 35.82 |

| Mathematical Reasoning Test (MATH Lvl 5) | 30.74 |

| General Purpose Question Answering (GPQA) | 2.01 |

| Multimodal Understanding and Reasoning (MUSR) | 13.28 |

| Massive Multitask Language Understanding (MMLU-PRO) | 41.65 |

Comments

No comments yet. Be the first to comment!

Leave a Comment