Dolphin3 8B - Model Details

Dolphin3 8B is a large language model developed by Cognitive Computations, a community-driven initiative. With 8b parameters, it is designed for efficient local deployment and offers users enhanced control over its behavior. The model operates under the Llama 31 Community License Agreement (LLAMA-31-CCLA), ensuring flexibility while prioritizing user-driven customization and practical applications. Its focus on instruction tuning and general-purpose utility makes it a versatile tool for a wide range of tasks.

Description of Dolphin3 8B

Dolphin 3.0 is the next generation of the Dolphin series of instruct-tuned models designed to be a general-purpose local model capable of handling coding, math, agentic tasks, function calling, and diverse use cases. It aims to replicate the versatility of models like ChatGPT, Claude, and Gemini while addressing challenges for businesses seeking to integrate AI into their products. By prioritizing local deployment, Dolphin 3.0 empowers system owners with control over customization, alignment, and data management, ensuring greater flexibility and security compared to centralized models. Its focus on user-driven adaptability makes it a practical choice for applications requiring tailored AI behavior.

Parameters & Context Length of Dolphin3 8B

Dolphin3 8B has 8b parameters, placing it in the mid-scale category of open-source LLMs, offering a balance between performance and resource efficiency for moderate complexity tasks. Its 4k context length falls within the short-to-moderate range, making it suitable for typical interactions but less optimized for extended text processing. The 8b parameter size ensures faster inference and lower computational demands, ideal for local deployment and user-controlled customization, while the 4k context length supports standard use cases without excessive resource consumption.

- Name: Dolphin3 8B

- Parameter Size: 8b

- Context Length: 4k

- Implications: Mid-scale performance with efficient resource use, suitable for local deployment and general tasks, but limited in handling very long texts.

Possible Intended Uses of Dolphin3 8B

Dolphin3 8B is a versatile model with 8b parameters and a 4k context length, designed for a range of possible applications that require adaptability and local deployment. Its agentic tasks and function calling capabilities suggest possible uses in automating workflows or enhancing interactive systems, though these would need careful evaluation for specific scenarios. Coding and math tasks could benefit from its instruction-tuned design, offering possible support for problem-solving or code generation, but real-world effectiveness would depend on customization. General use cases might include content creation or dialogue systems, but possible limitations could arise from its context length or parameter size. The model’s focus on user-controlled steerability highlights possible advantages for tailored implementations, yet possible challenges in scalability or performance remain to be explored.

- Intended Uses: coding, math, agentic tasks, function calling, general use cases

Possible Applications of Dolphin3 8B

Dolphin3 8B is a versatile model with 8b parameters and a 4k context length, designed for possible applications in coding, math, agentic tasks, and function calling. Its possible use in coding could involve generating or refining code snippets, though possible limitations might arise from its context length. Possible applications in math could include solving problems or explaining concepts, but possible variability in accuracy would require testing. Possible support for agentic tasks might enable task automation, though possible challenges in complex workflows would need investigation. Possible integration with function calling could enhance system interactions, but possible dependencies on external tools would require careful setup. Each possible application must be thoroughly evaluated and tested before deployment to ensure alignment with specific needs.

- coding

- math

- agentic tasks

- function calling

Quantized Versions & Hardware Requirements of Dolphin3 8B

Dolphin3 8B in its medium q4 version requires a GPU with at least 16GB VRAM for efficient operation, making it suitable for systems with mid-range graphics cards. System memory should be at least 32GB RAM, and adequate cooling and power supply are essential. This quantized version balances precision and performance, enabling local deployment without excessive resource demands. Other important considerations include proper GPU cooling and a stable power supply to ensure smooth execution.

- fp16, q4, q8

Conclusion

Dolphin3 8B is a mid-scale large language model with 8b parameters and a 4k context length, developed by Cognitive Computations to prioritize local deployment and user-controlled customization. It supports coding, math, agentic tasks, and function calling through quantized versions like fp16, q4, and q8, requiring at least 16GB VRAM for the q4 variant.

References

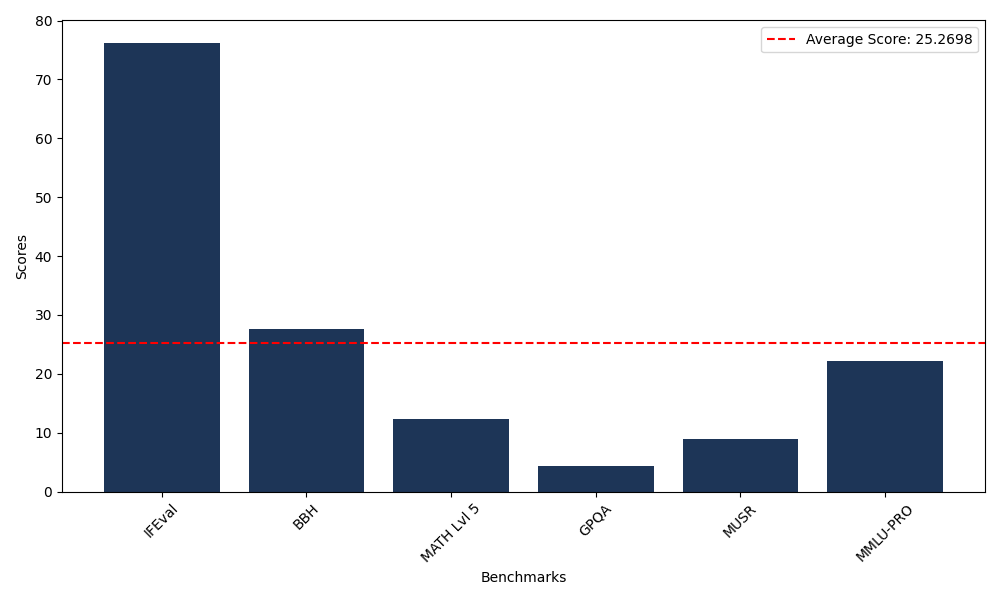

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 76.21 |

| Big Bench Hard (BBH) | 27.63 |

| Mathematical Reasoning Test (MATH Lvl 5) | 12.31 |

| General Purpose Question Answering (GPQA) | 4.36 |

| Multimodal Understanding and Reasoning (MUSR) | 8.97 |

| Massive Multitask Language Understanding (MMLU-PRO) | 22.13 |

Comments

No comments yet. Be the first to comment!

Leave a Comment