Exaone3.5 2.4B Instruct - Model Details

The Exaone3.5 2.4B Instruct is a large language model developed by LG Ai Research, featuring 2.4 billion parameters. It operates under the Exaone Ai Model License Agreement 11 - Nc (EXAONE-AIMLA-11-NC) and is designed for bilingual (English-Korean) generative tasks. Optimized for resource-constrained devices, it provides efficient performance while maintaining robust capabilities for instruction-following and language generation.

Description of Exaone3.5 2.4B Instruct

The EXAONE 3.5 series includes instruction-tuned bilingual (English and Korean) generative models developed by LG AI Research, ranging from 2.4B to 32B parameters. The 2.4B model is specifically optimized for deployment on small or resource-constrained devices, featuring a context length of 32,768 tokens. It achieves state-of-the-art performance in real-world use cases and long-context understanding while maintaining strong competitiveness in general domains compared to similarly sized models.

Parameters & Context Length of Exaone3.5 2.4B Instruct

The Exaone3.5 2.4B Instruct model features 2.4B parameters, placing it in the small model category, which ensures efficient performance on resource-constrained devices while maintaining capability for specific tasks. Its 32k context length falls into the long context range, enabling robust handling of extended texts and complex queries. This combination allows the model to balance accessibility and functionality, making it suitable for applications requiring both efficiency and extended contextual understanding.

- Parameter Size: 2.4B

- Context Length: 32k

Possible Intended Uses of Exaone3.5 2.4B Instruct

The Exaone3.5 2.4B Instruct model offers possible applications in areas such as text generation, multilingual communication, and content creation, leveraging its bilingual support for English and Korean. Possible uses could include assisting with creative writing, generating summaries, or facilitating cross-language interactions. Its design for resource-constrained devices suggests potential for deployment in scenarios requiring efficiency, though further investigation is needed to confirm suitability. Possible tasks might involve crafting educational materials, translating between languages, or supporting content development for specific audiences. The model’s capabilities are still being explored, and these uses remain theoretical until tested in real-world contexts.

- text generation

- multilingual communication

- content creation

Possible Applications of Exaone3.5 2.4B Instruct

The Exaone3.5 2.4B Instruct model presents possible applications in areas such as creative writing assistance, where its bilingual capabilities could support text generation in English and Korean. Possible uses might include generating multilingual content for educational materials or translating between languages to facilitate communication. It could also serve as a tool for content creation, offering possible support in drafting articles or scripts. Additionally, the model’s optimization for resource-constrained devices suggests possible deployment in scenarios requiring efficient processing, such as lightweight applications. These uses remain theoretical and require thorough evaluation to ensure alignment with specific needs.

- text generation

- multilingual communication

- content creation

- educational material support

Quantized Versions & Hardware Requirements of Exaone3.5 2.4B Instruct

The Exaone3.5 2.4B Instruct model’s q4 version, a possible choice for balancing precision and performance, requires a GPU with at least 12GB VRAM and a system with 32GB RAM to run efficiently. This configuration ensures smooth operation while maintaining reasonable computational demands. Other quantized versions, such as fp16 and q8, may have different requirements depending on the model’s parameter count and complexity.

- fp16, q4, q8

Conclusion

The Exaone3.5 2.4B Instruct is a large language model developed by LG Ai Research, featuring 2.4B parameters and optimized for bilingual (English-Korean) generative tasks on resource-constrained devices. It operates under the Exaone Ai Model License Agreement 11 - Nc (EXAONE-AIMLA-11-NC) and supports a 32,768-token context length, making it suitable for efficient and versatile language applications.

References

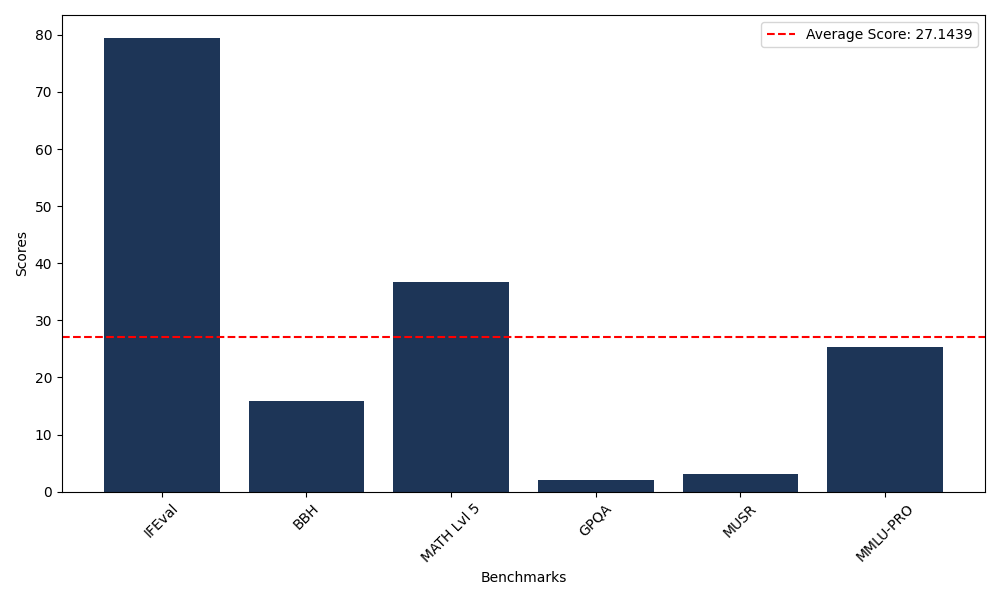

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 79.50 |

| Big Bench Hard (BBH) | 15.95 |

| Mathematical Reasoning Test (MATH Lvl 5) | 36.78 |

| General Purpose Question Answering (GPQA) | 2.13 |

| Multimodal Understanding and Reasoning (MUSR) | 3.17 |

| Massive Multitask Language Understanding (MMLU-PRO) | 25.34 |

Comments

No comments yet. Be the first to comment!

Leave a Comment