Exaone3.5 7.8B Instruct - Model Details

The Exaone3.5 7.8B Instruct is a large language model developed by LG AI Research, featuring 7.8 billion parameters. It operates under the Exaone Ai Model License Agreement 11 - Nc (EXAONE-AIMLA-11-NC) and is designed for instruct-based tasks. This model specializes in bilingual generative capabilities for English and Korean, with optimizations tailored for deployment on resource-constrained devices.

Description of Exaone3.5 7.8B Instruct

The EXAONE 3.5 is a collection of instruction-tuned bilingual (English and Korean) generative models ranging from 2.4B to 32B parameters. The 7.8B model specifically offers an extended context length of up to 32,768 tokens, enabling enhanced performance in complex tasks. It demonstrates state-of-the-art capabilities for real-world applications and long-context understanding, making it suitable for scenarios requiring deep analysis of extensive text.

Parameters & Context Length of Exaone3.5 7.8B Instruct

The Exaone3.5 7.8B Instruct model features 7.8B parameters, placing it in the mid-scale category of open-source LLMs, offering a balance between performance and resource efficiency for moderate complexity tasks. Its 32k token context length falls into the long-context range, enabling advanced handling of extended texts but requiring significant computational resources. This combination allows the model to excel in real-world applications requiring deep analysis of lengthy content while maintaining efficiency for broader use cases.

- Parameter Size: 7.8b

- Context Length: 32k

Possible Intended Uses of Exaone3.5 7.8B Instruct

The Exaone3.5 7.8B Instruct model, designed for English and Korean, offers possible applications in areas like text generation, translation, and content creation. Its bilingual capabilities could support possible use cases such as generating multilingual documents, translating between the two languages, or assisting with creative content development. However, these possible uses would require further exploration to ensure alignment with specific requirements and constraints. The model’s focus on monolingual operations may limit some scenarios but could enhance precision in targeted tasks. Additional research is necessary to confirm the possible effectiveness of these applications in real-world settings.

- text generation

- translation

- content creation

Possible Applications of Exaone3.5 7.8B Instruct

The Exaone3.5 7.8B Instruct model presents possible applications in areas such as text generation, translation, content creation, and dialogue systems, leveraging its bilingual capabilities in English and Korean. These possible use cases could include generating multilingual documents, translating between the two languages, assisting with creative content development, or enhancing conversational interfaces. However, these possible applications require thorough evaluation to ensure they meet specific needs and constraints. The model’s monolingual focus may influence its suitability for certain tasks, but its design allows for possible exploration in scenarios where precision and language-specific optimization are critical. Each possible application must be rigorously tested and validated before deployment.

- text generation

- translation

- content creation

- dialogue systems

Quantized Versions & Hardware Requirements of Exaone3.5 7.8B Instruct

The Exaone3.5 7.8B Instruct model’s medium q4 version requires a GPU with at least 16GB VRAM for efficient operation, making it suitable for systems with mid-range graphics cards. This quantized version balances precision and performance, allowing possible use on devices with adequate VRAM while reducing computational demands compared to higher-precision formats. Users should ensure their hardware meets these requirements to avoid performance bottlenecks.

- fp16, q4, q8

Conclusion

The Exaone3.5 7.8B Instruct is a large language model with 7.8 billion parameters and a 32k token context length, optimized for bilingual English-Korean tasks. It operates under the Exaone Ai Model License Agreement 11 - Nc and is designed for resource-constrained devices, offering strong performance in real-world applications and long-context understanding.

References

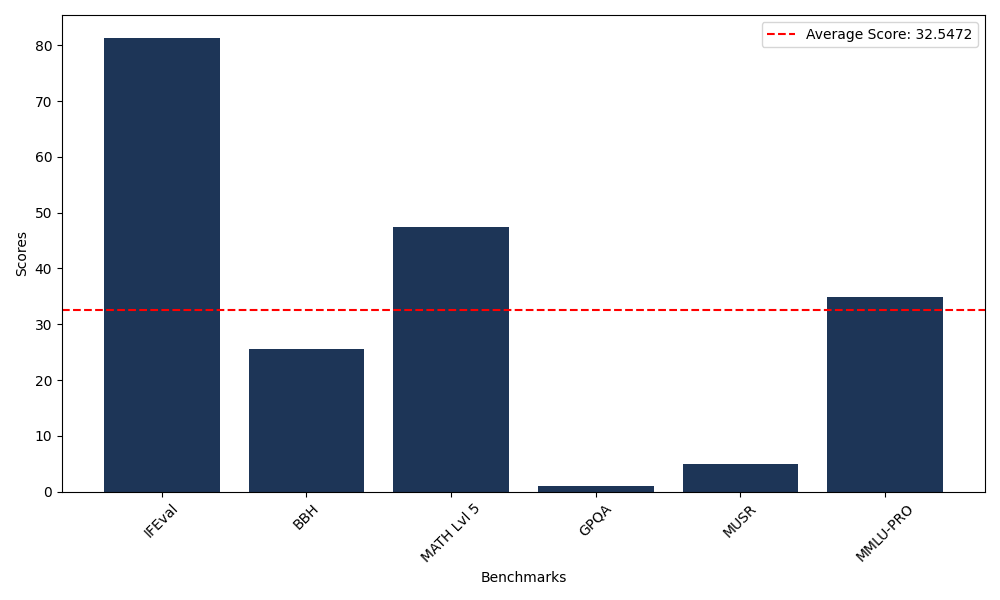

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 81.36 |

| Big Bench Hard (BBH) | 25.65 |

| Mathematical Reasoning Test (MATH Lvl 5) | 47.51 |

| General Purpose Question Answering (GPQA) | 1.01 |

| Multimodal Understanding and Reasoning (MUSR) | 4.94 |

| Massive Multitask Language Understanding (MMLU-PRO) | 34.81 |

Comments

No comments yet. Be the first to comment!

Leave a Comment