Falcon 40B - Model Details

Falcon 40B is a large language model developed by the Technology Innovation Institute, featuring 40 billion parameters and released under the Apache License 2.0. This model is part of the Falcon series, which includes the Falcon 180B, known for its state-of-the-art performance across natural language tasks and its availability as an open-source resource.

Description of Falcon 40B

Falcon-40B is a 40B parameters causal decoder-only model developed by the Technology Innovation Institute (TII). It is trained on 1,000B tokens of RefinedWeb data enhanced with curated corpora, ensuring high-quality and diverse training material. The model is made open-source under the Apache 2.0 license, allowing broad accessibility and use. Its architecture and training approach emphasize scalability and performance across natural language tasks.

Parameters & Context Length of Falcon 40B

Falcon 40B is a 40b parameter model with a 2k context length, placing it in the large-scale category for parameters and short context range for token handling. The 40b parameter size enables robust performance on complex tasks but requires significant computational resources, while the 2k context length limits its ability to process very long texts efficiently. This balance makes it suitable for tasks requiring depth over extended sequences.

- Name: Falcon 40B

- Parameter Size: 40b

- Context Length: 2k

- Implications: Large parameters for complex tasks, short context for limited sequence handling.

Possible Intended Uses of Falcon 40B

Falcon 40B is a 40b parameter multilingual language model designed for research on large language models and specialized tasks such as summarization, text generation, and chatbot development. Its support for multiple languages including German, Swedish, English, Spanish, Portuguese, Polish, Dutch, Czech, French, Italian, and Romanian suggests possible applications in cross-lingual research or content creation. However, these possible uses require thorough evaluation to ensure alignment with specific requirements, as the model’s capabilities may vary across tasks and languages. The multilingual nature of Falcon 40B opens potential opportunities for projects involving diverse language support, but further testing is necessary to confirm effectiveness in real-world scenarios.

- Name: Falcon 40B

- Intended Uses: research on large language models, specialization for tasks like summarization, text generation, chatbot development

- Supported Languages: german, swedish, english, spanish, portuguese, polish, dutch, czech, french, italian, romanian

- Multilingual: yes

Possible Applications of Falcon 40B

Falcon 40B is a 40b parameter multilingual language model that could support possible applications in areas such as research on large language models, cross-lingual text generation, automated summarization of multilingual content, and development of chatbots for general-purpose interactions. Its multilingual capabilities and large parameter size suggest possible uses in tasks requiring nuanced language understanding or adaptation across languages, though these possible applications would need rigorous testing to ensure suitability. The model’s design also opens potential opportunities for creative or exploratory projects, but each possible use case must be carefully assessed before deployment.

- Name: Falcon 40B

- Possible Applications: research on large language models, cross-lingual text generation, automated summarization of multilingual content, development of chatbots for general-purpose interactions

- Multilingual Support: yes

- Parameter Size: 40b

Quantized Versions & Hardware Requirements of Falcon 40B

Falcon 40B’s medium q4 version requires a GPU with at least 32GB VRAM to balance precision and performance, making it suitable for systems with high-end graphics cards. This quantized variant reduces memory usage compared to full-precision models, but its 40b parameter size still demands significant computational resources. Users should verify their hardware compatibility before deployment, as these possible requirements may vary based on specific configurations.

- Quantized Versions: fp16, q4, q5, q8

- Name: Falcon 40B

- Parameter Size: 40b

- Hardware Requirement: GPU with at least 32GB VRAM for q4 version

Conclusion

Falcon 40B is a 40b parameter large language model developed by the Technology Innovation Institute, released under the Apache 2.0 license, and designed for multilingual tasks with support for 11 languages. Its high parameter count and open-source availability make it suitable for research and specialized applications like text generation and chatbot development.

References

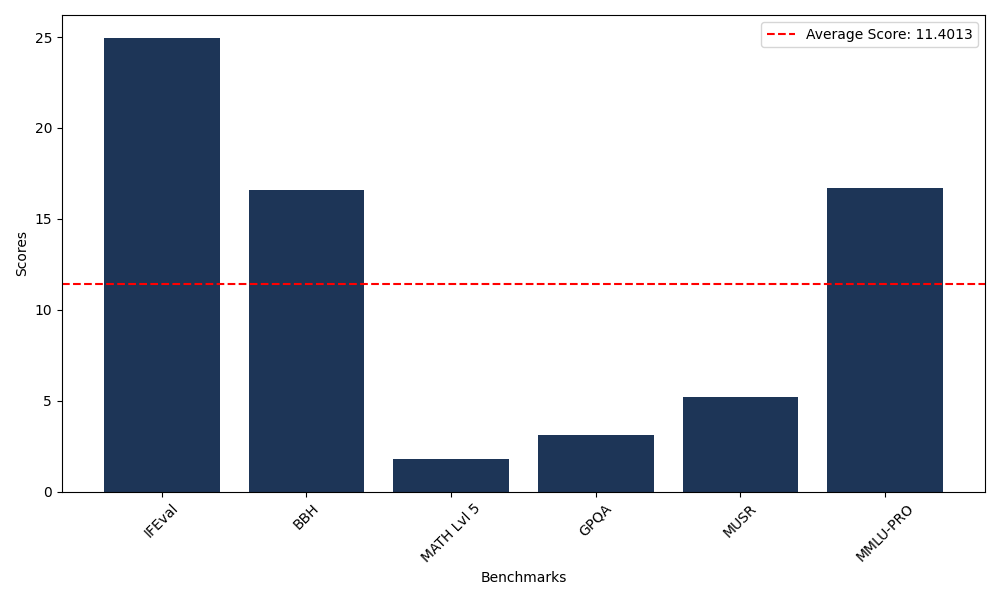

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 24.96 |

| Big Bench Hard (BBH) | 16.58 |

| Mathematical Reasoning Test (MATH Lvl 5) | 1.81 |

| General Purpose Question Answering (GPQA) | 3.13 |

| Multimodal Understanding and Reasoning (MUSR) | 5.19 |

| Massive Multitask Language Understanding (MMLU-PRO) | 16.72 |

Comments

Взглянем на XCARDS — платформу, которая меня заинтересовала. Совсем недавно обнаружил на финансовый платформу XCARDS, он предлагает оформлять виртуальные платёжные карты чтобы оплачивать сервисы. Что обещают: Создание карты занимает несколько минут. Сервис позволяет создать любое число карт — без ограничений. Служба поддержки доступна 24/7 включая живое общение с оператором. Все операции отображаются моментально — лимиты, уведомления, отчёты, статистика. Возможные нюансы: Юрисдикция: офшорная зона — перед использованием стоит уточнить, что всё законно в вашей стране. Стоимость: карты заявлены как “бесплатные”, но скрытые комиссии, поэтому лучше внимательно изучить раздел с тарифами. Отзывы пользователей: по отзывам вопросы решаются оперативно. Безопасность: внедрены базовые меры безопасности, но всегда лучше активировать 2FA. Итоги: На первый взгляд XCARDS — это современным решением в области рекламы. Сервис совмещает скорость, удобство и гибкость. Хочу узнать ваше мнение Есть ли у вас опыт с виртуальными картами? Расскажите, как у вас работает Виртуальные карты для онлайн-платежей https://tinyurl.com/antarctic-wallet

Leave a Comment