Falcon 40B Instruct - Model Details

Falcon 40B Instruct is a large language model developed by the Technology Innovation Institute, featuring 40 billion parameters and released under the Apache License 2.0. It is designed for instruct tasks and is part of the Falcon series, which includes the Falcon 180B, a model renowned for its state-of-the-art performance across natural language tasks.

Description of Falcon 40B Instruct

Falcon 40B Instruct is a 40B parameters causal decoder-only model developed by the Technology Innovation Institute. It is built upon Falcon-40B and fine-tuned on a mixture of Baize to enhance its performance on instruction-following tasks. The model is made available under the Apache 2.0 license, ensuring open access for research and commercial use. Its architecture is optimized for inference with FlashAttention and multiquery mechanisms, improving efficiency and scalability for large-scale natural language processing tasks.

Parameters & Context Length of Falcon 40B Instruct

Falcon 40B Instruct is a 40 billion parameter model, placing it in the large-scale category that balances complexity and resource demands, enabling advanced performance for intricate tasks while requiring substantial computational power. Its 2,000-token context length supports efficient handling of short to moderate-length inputs, making it well-suited for tasks like dialogue and concise text generation but less effective for extended sequences. The model’s parameter size allows for nuanced understanding of language patterns, while its context length limits its capacity for long-form content, influencing its applicability in specific use cases.

- Parameter Size: 40b

- Context Length: 2k

Possible Intended Uses of Falcon 40B Instruct

Falcon 40B Instruct is a large language model designed for natural language processing tasks, with possible uses including chatbots and text generation. Its 40 billion parameters and 2,000-token context length suggest it could support possible applications in generating coherent responses for interactive systems or creating structured content. However, these possible uses require thorough evaluation to ensure alignment with specific requirements. The model’s monolingual design in English and French may limit its possible applicability to multilingual scenarios, though it could still serve possible purposes in language-specific tasks. Further research is needed to confirm its effectiveness in real-world implementations.

- chatbots

- text generation

- natural language processing tasks

Possible Applications of Falcon 40B Instruct

Falcon 40B Instruct is a large-scale language model with possible applications in areas such as chatbots, text generation, natural language processing tasks, and language-specific content creation. Its 40 billion parameters and 2,000-token context length suggest it could support possible uses in interactive dialogue systems, automated content drafting, or task-oriented NLP workflows. However, these possible applications require careful assessment to ensure they align with specific needs, as the model’s monolingual design in English and French may limit its possible suitability for multilingual scenarios. The possible effectiveness of these uses depends on further testing and adaptation to real-world requirements.

- chatbots

- text generation

- natural language processing tasks

- language-specific content creation

Quantized Versions & Hardware Requirements of Falcon 40B Instruct

Falcon 40B Instruct’s medium q4 version requires a GPU with at least 32GB VRAM and a system with 32GB RAM to operate efficiently, making it suitable for devices with high-end graphics cards. This quantized variant balances precision and performance, reducing memory usage compared to full-precision versions while maintaining reasonable computational efficiency. Possible applications on such hardware include complex natural language tasks, but users should verify compatibility with their specific setup.

- fp16, q4, q5, q8

Conclusion

Falcon 40B Instruct is a 40 billion parameter large language model developed by the Technology Innovation Institute, released under the Apache License 2.0, and optimized for inference with FlashAttention and multiquery mechanisms. It is part of the Falcon series, designed for instruction-following tasks and notable for its balance of performance and efficiency in natural language processing.

References

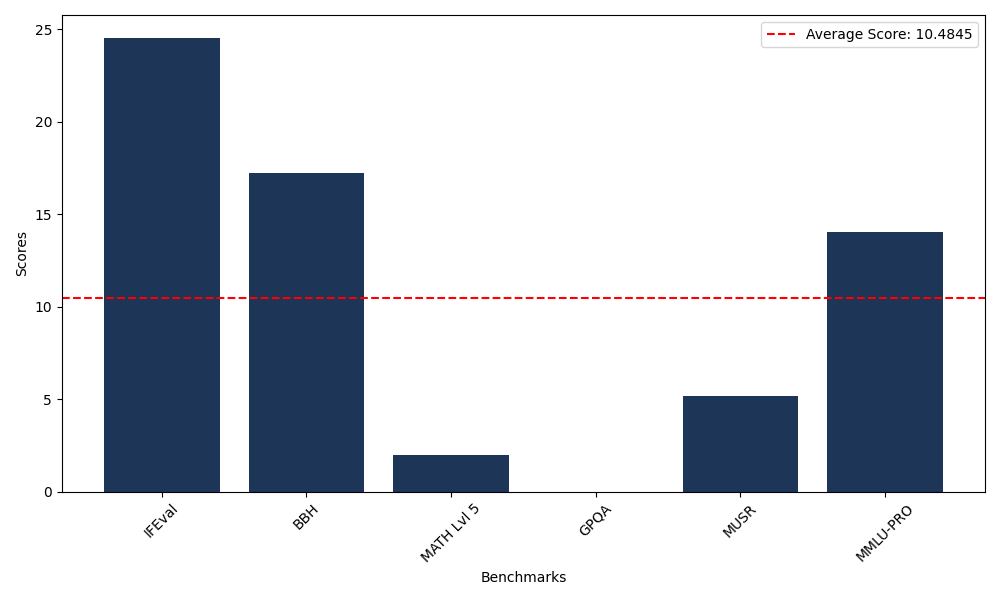

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 24.54 |

| Big Bench Hard (BBH) | 17.22 |

| Mathematical Reasoning Test (MATH Lvl 5) | 1.96 |

| General Purpose Question Answering (GPQA) | 0.00 |

| Multimodal Understanding and Reasoning (MUSR) | 5.16 |

| Massive Multitask Language Understanding (MMLU-PRO) | 14.02 |

Comments

No comments yet. Be the first to comment!

Leave a Comment