Falcon 7B - Model Details

Falcon 7B is a large language model developed by Technology Innovation Institute, a company known for advancing AI research. With 7b parameters, it offers robust performance across natural language tasks. The model is released under the Apache License 2.0, ensuring open access and flexibility for users. While Falcon 180B represents a larger variant in the Falcon series, Falcon 7B provides a scalable solution for diverse applications.

Description of Falcon 7B

Falcon 7B is a 7B parameters causal decoder-only model developed by Technology Innovation Institute. It is trained on 1,500B tokens of RefinedWeb enhanced with curated corpora, ensuring high-quality data for robust performance. The model features an architecture optimized for inference with FlashAttention and multiquery, improving efficiency. Available under the Apache 2.0 license, it outperforms comparable open-source models and is well-suited for research, fine-tuning, and specialized applications. Its design emphasizes scalability and adaptability for diverse use cases.

Parameters & Context Length of Falcon 7B

Falcon 7B is a 7b parameter model with a 2k context length, positioning it as a compact yet efficient solution for tasks requiring moderate computational resources. The 7b parameter size places it in the small to mid-scale range, offering fast inference and lower resource demands, ideal for applications where simplicity and speed are prioritized. Its 2k context length, while limited compared to models with extended token support, is sufficient for handling short to moderately long texts but may restrict performance on very lengthy documents. This balance makes Falcon 7B well-suited for research, fine-tuning, and specialized use cases where efficiency and accessibility are critical.

- Parameter Size: 7b

- Context Length: 2k

Possible Intended Uses of Falcon 7B

Falcon 7B is a 7b parameter model designed for research on large language models, specialization, and fine-tuning for specific use cases such as summarization, text generation, and chatbots. Its multilingual capabilities support languages like German, Swedish, English, Spanish, Portuguese, Polish, Dutch, Czech, French, Italian, and Romanian, making it a possible tool for exploring cross-lingual tasks. While possible applications include inference and fine-tuning for various NLP tasks, these uses require thorough investigation to ensure alignment with specific goals. The model’s design allows for potential adaptation to diverse scenarios, but further testing is necessary to validate its effectiveness in real-world contexts.

- research on large language models

- foundation for further specialization and fine-tuning for specific use cases

- inference and fine-tuning for various nlp tasks

Possible Applications of Falcon 7B

Falcon 7B is a 7b parameter model with multilingual support for languages like German, Swedish, English, and others, making it a possible tool for exploring cross-lingual tasks. Its design allows for possible applications in research on language model capabilities, possible customization for specific domains through fine-tuning, and possible use in tasks like text generation or chatbot development. While possible scenarios include inference for general NLP tasks, these uses require thorough evaluation to ensure suitability for specific needs. The model’s flexibility suggests possible adaptability to diverse contexts, but each application must be rigorously tested before deployment.

- research on large language models

- customization for specific domains through fine-tuning

- text generation and chatbot development

- inference for general NLP tasks

Quantized Versions & Hardware Requirements of Falcon 7B

Falcon 7B’s medium q4 version requires a GPU with at least 16GB VRAM and 32GB system memory for efficient operation, making it suitable for devices with moderate hardware capabilities. This quantized version balances precision and performance, allowing possible deployment on consumer-grade GPUs like the RTX 3090, but users should verify compatibility with their system’s cooling and power supply. The q4 variant reduces memory usage compared to higher-precision formats, enabling possible use in scenarios where resource constraints are a concern. However, actual performance may vary based on specific hardware configurations.

fp16, q4, q5, q8

Conclusion

Falcon 7B is a 7b parameter language model developed by Technology Innovation Institute, trained on 1,500B tokens with an optimized architecture featuring FlashAttention and multiquery for efficient inference. It supports multiple languages, operates under the Apache 2.0 license, and is designed for research, fine-tuning, and specialized applications, offering a balance between performance and resource efficiency.

References

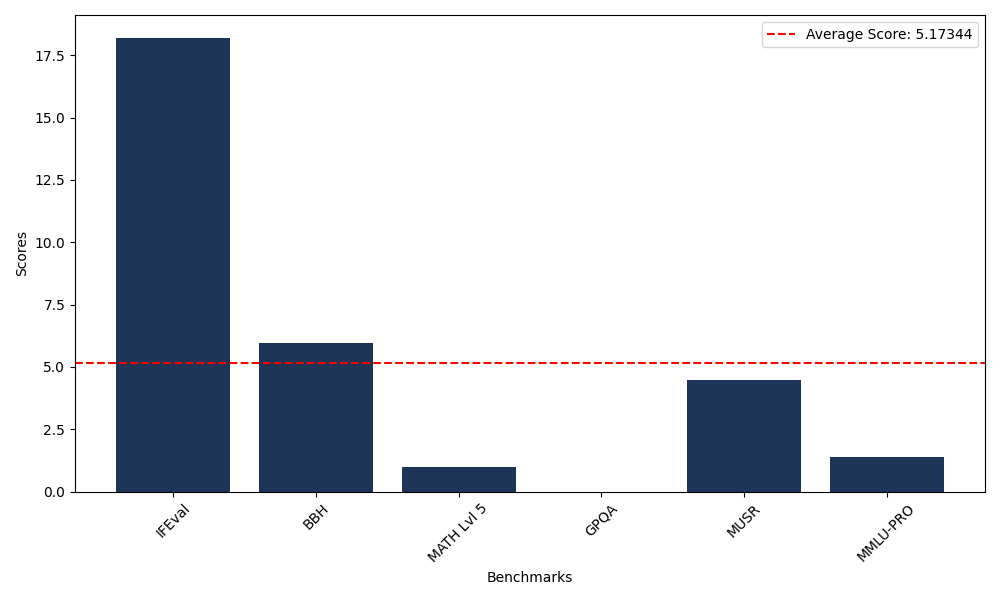

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 18.21 |

| Big Bench Hard (BBH) | 5.96 |

| Mathematical Reasoning Test (MATH Lvl 5) | 0.98 |

| General Purpose Question Answering (GPQA) | 0.00 |

| Multimodal Understanding and Reasoning (MUSR) | 4.50 |

| Massive Multitask Language Understanding (MMLU-PRO) | 1.39 |

Comments

No comments yet. Be the first to comment!

Leave a Comment