Falcon 7B Instruct - Model Details

Falcon 7B Instruct is a large language model developed by the Technology Innovation Institute, a company known for its advanced research in artificial intelligence. With 7b parameters, it is designed to excel in instruction-following tasks and natural language processing. The model is released under the Apache License 2.0, ensuring open access and flexibility for users. Part of the Falcon series, it builds on the success of larger models like Falcon 180B, offering state-of-the-art performance in a more compact and efficient form.

Description of Falcon 7B Instruct

Falcon 7B Instruct is a 7B parameter causal decoder-only model developed by Technology Innovation Institute (TII) and fine-tuned on chat/instruct datasets to enhance its performance in conversational and task-oriented scenarios. It is part of the Falcon series, which includes a larger variant Falcon-40B-Instruct, and is trained on 1,500B tokens of RefinedWeb data to ensure robust language understanding. The model leverages FlashAttention and multiquery optimizations for efficient inference, making it suitable for real-time applications. It is released under the Apache 2.0 license, allowing open access and commercial use.

Parameters & Context Length of Falcon 7B Instruct

Falcon 7B Instruct is a 7b parameter model with a 2k context length, placing it in the small to mid-scale category of open-source LLMs. Its 7b parameter size ensures fast and resource-efficient performance, making it ideal for simple tasks and moderate complexity scenarios. The 2k context length falls under short contexts, which is suitable for short tasks but limited in handling long texts. This balance makes it accessible for applications requiring quick inference without excessive computational demands, though it may struggle with complex or extended content.

- Name: Falcon 7B Instruct

- Parameter Size: 7b

- Context Length: 2k

- Implications: Efficient for simple tasks, limited context for long texts

Possible Intended Uses of Falcon 7B Instruct

Falcon 7B Instruct is a 7b parameter model designed for chatbot development, text generation, and code generation, with support for English and French. Its monolingual nature and 2k context length make it a possible tool for creating conversational interfaces, generating textual content, or assisting with coding tasks. However, these possible applications require further investigation to ensure alignment with specific requirements, as the model’s performance may vary depending on the complexity of the task. The limited context length and parameter size suggest it is best suited for moderate-scale projects where efficiency and simplicity are prioritized over handling highly complex or extended inputs.

- chatbot development

- text generation

- code generation

Possible Applications of Falcon 7B Instruct

Falcon 7B Instruct is a 7b parameter model with a 2k context length, offering possible applications in areas such as chatbot development, text generation, and code generation. Its monolingual design and Apache 2.0 license make it a possible tool for creating conversational interfaces, generating textual content, or assisting with coding tasks. However, these possible uses require thorough evaluation to ensure they align with specific needs, as the model’s limited context length and parameter size may affect performance in complex scenarios. The possible suitability of these applications depends on the task’s requirements, and further testing is essential to confirm their effectiveness.

- chatbot development

- text generation

- code generation

Quantized Versions & Hardware Requirements of Falcon 7B Instruct

Falcon 7B Instruct’s medium q4 version requires a GPU with at least 16GB VRAM for efficient inference, paired with a multi-core CPU and 32GB system memory to handle its 7b parameters. This quantized version balances precision and performance, making it suitable for devices with moderate hardware capabilities. However, these possible requirements may vary based on workload, and users should verify compatibility with their graphics card.

- fp16, q4, q5, q8

Conclusion

Falcon 7B Instruct is a 7b parameter model developed by the Technology Innovation Institute, optimized for inference with FlashAttention and multiquery, and released under the Apache 2.0 license. It is designed for instruction-following tasks, with a 2k context length and fine-tuned on chat/instruct datasets, making it suitable for applications requiring efficient and accessible language processing.

References

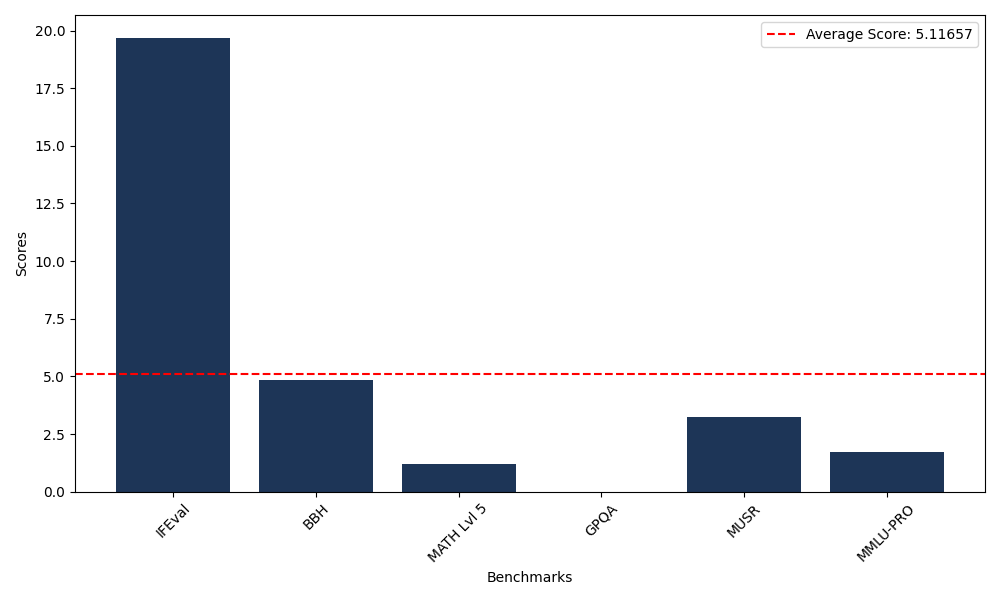

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 19.69 |

| Big Bench Hard (BBH) | 4.82 |

| Mathematical Reasoning Test (MATH Lvl 5) | 1.21 |

| General Purpose Question Answering (GPQA) | 0.00 |

| Multimodal Understanding and Reasoning (MUSR) | 3.25 |

| Massive Multitask Language Understanding (MMLU-PRO) | 1.73 |

Comments

No comments yet. Be the first to comment!

Leave a Comment