Falcon2 11B - Model Details

Falcon2 11B is a large language model developed by the Technology Innovation Institute, a company, featuring 11B parameters. It operates under the Falcon 2 11B TII License (Falcon-2-11B-TII-License-10) and is designed for multilingual multimodal capabilities with vision-to-language support.

Description of Falcon2 11B

Falcon2-11B is an 11B parameters causal decoder-only model developed by the Technology Innovation Institute. It is trained on over 5,000B tokens of RefinedWeb enhanced with curated corpora, making it suitable for research and specialized applications. The model is available under the TII Falcon License 2.0, which includes an acceptable use policy to ensure responsible AI development and deployment.

Parameters & Context Length of Falcon2 11B

Falcon2-11B is a large language model with 11B parameters, placing it in the mid-scale category, which offers a balance between performance and resource efficiency for handling moderate complexity tasks. Its 8K context length falls into the moderate range, enabling it to process longer texts than short-context models but with limitations for extremely lengthy sequences. The model’s design supports research and specialized applications, leveraging its parameter count and context length to balance capability and accessibility.

- Name: Falcon2-11B

- Parameter Size: 11B

- Context Length: 8K

- Implications: Mid-scale performance for moderate tasks, moderate context handling.

Possible Intended Uses of Falcon2 11B

Falcon2-11B is a multilingual large language model with 11B parameters and support for German, Swedish, English, Spanish, Portuguese, Polish, Dutch, Czech, French, Italian, and Romanian, making it a versatile tool for a range of possible applications. Its design allows for possible uses in advancing research on large language models, enabling possible exploration of fine-tuning for tasks like summarization and text generation, and supporting possible development of chatbots and conversational AI systems. While these possible uses could benefit from the model’s multilingual capabilities and parameter scale, further investigation is necessary to assess their feasibility and effectiveness in specific scenarios.

- research on large language models

- fine-tuning for specific tasks like summarization and text generation

- development of chatbots and conversational ai systems

Possible Applications of Falcon2 11B

Falcon2-11B is a multilingual large language model with 11B parameters and support for German, Swedish, English, Spanish, Portuguese, Polish, Dutch, Czech, French, Italian, and Romanian, making it a versatile tool for possible applications in areas such as possible research on language model capabilities, possible adaptation for specialized tasks like text summarization or content generation, possible development of multilingual chatbots, and possible exploration of cross-lingual understanding. These possible uses could leverage the model’s scale and language diversity, but each possible application requires thorough evaluation to ensure alignment with specific goals and constraints.

- research on large language models

- fine-tuning for specific tasks like summarization and text generation

- development of chatbots and conversational ai systems

- exploration of cross-lingual understanding

Quantized Versions & Hardware Requirements of Falcon2 11B

Falcon2-11B with the q4 quantization requires a GPU with at least 20GB VRAM (e.g., RTX 3090) and 16GB–32GB VRAM for optimal performance, making it suitable for systems with mid-range to high-end graphics cards. This medium q4 version balances precision and efficiency, but users should verify their hardware compatibility before deployment.

fp16, q2, q3, q4, q5, q6, q8

Conclusion

Falcon2-11B is a 11B-parameter large language model developed by the Technology Innovation Institute, available under the TII Falcon License 2.0, and supports multiple languages including German, Swedish, English, and others. It is designed for research, fine-tuning, and multilingual applications, with potential uses requiring further evaluation for specific scenarios.

References

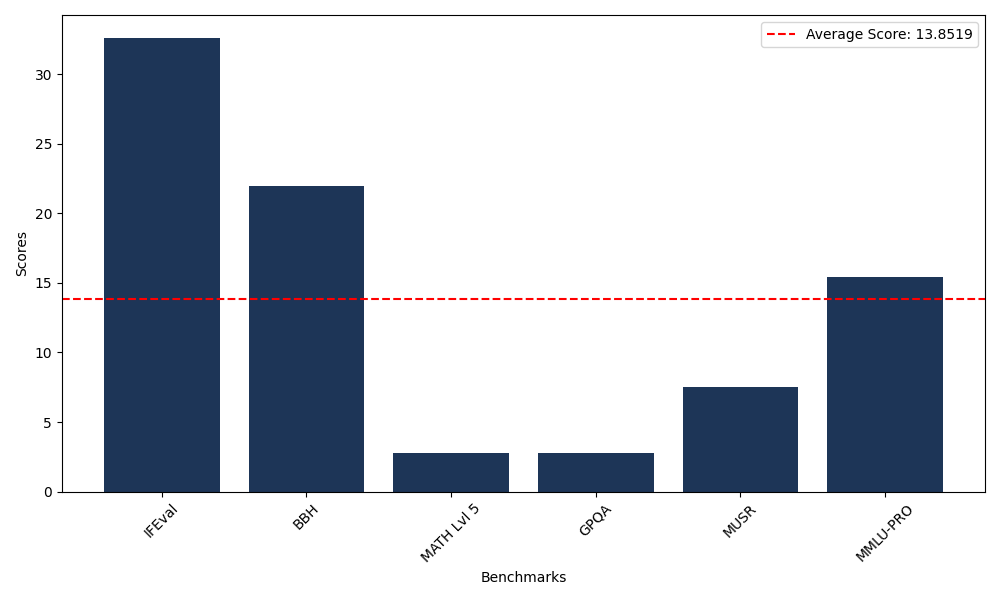

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 32.61 |

| Big Bench Hard (BBH) | 21.94 |

| Mathematical Reasoning Test (MATH Lvl 5) | 2.79 |

| General Purpose Question Answering (GPQA) | 2.80 |

| Multimodal Understanding and Reasoning (MUSR) | 7.53 |

| Massive Multitask Language Understanding (MMLU-PRO) | 15.44 |

Comments

No comments yet. Be the first to comment!

Leave a Comment