Falcon3 10B Instruct - Model Details

Falcon3 10B Instruct is a large language model developed by the Technology Innovation Institute, a company, featuring 10 billion parameters. It operates under the Falcon 3 TII Falcon License (Falcon-3-TII) and is specifically designed to enhance science, math, and code capabilities in decoder-only models. The model prioritizes instruction-following tasks, making it suitable for complex problem-solving and technical applications.

Description of Falcon3 10B Instruct

Falcon3 is a family of open foundation models offering pretrained and instruct LLMs with parameter sizes ranging from 1B to 10B. It achieves state-of-the-art results on reasoning, language understanding, instruction following, code, and mathematics tasks. The model supports four languages including English, French, Spanish, and Portuguese, with a context length of up to 32K tokens. Developed by the Technology Innovation Institute, it is designed for versatility in technical and complex problem-solving scenarios.

Parameters & Context Length of Falcon3 10B Instruct

Falcon3 10B Instruct features 10 billion parameters, placing it in the mid-scale category, which balances performance and resource efficiency for moderate complexity tasks. Its 32,000-token context length enables handling extended texts, making it suitable for tasks requiring long-term memory or detailed analysis, though it demands more computational resources. This combination allows the model to excel in technical domains while maintaining flexibility for diverse applications.

- Name: Falcon3 10B Instruct

- Parameter Size: 10b

- Context Length: 32k

- Implications: Mid-scale performance for moderate tasks, long context for extended texts, resource-intensive but versatile.

Possible Intended Uses of Falcon3 10B Instruct

Falcon3 10B Instruct offers possible applications in text generation and summarization, where its multilingual capabilities could support content creation across Spanish, English, French, and Portuguese. It may also serve as a tool for code generation and debugging, providing potential assistance in software development workflows. Additionally, it could be explored for multilingual customer support, enabling interactions in multiple languages. These uses remain possible but require thorough testing to ensure effectiveness and alignment with specific needs. The model’s design suggests adaptability, though further investigation is necessary to confirm its suitability for such tasks.

- text generation and summarization

- code generation and debugging

- multilingual customer support

Possible Applications of Falcon3 10B Instruct

Falcon3 10B Instruct has possible applications in text generation and summarization, where its multilingual capabilities could support content creation across Spanish, English, French, and Portuguese. It may also serve as a tool for code generation and debugging, offering possible assistance in software development workflows. Additionally, it could be explored for multilingual customer support, enabling interactions in multiple languages. These uses remain possible but require thorough testing to ensure effectiveness and alignment with specific needs. The model’s design suggests adaptability, though further investigation is necessary to confirm its suitability for such tasks.

- text generation and summarization

- code generation and debugging

- multilingual customer support

Quantized Versions & Hardware Requirements of Falcon3 10B Instruct

Falcon3 10B Instruct’s medium q4 version requires a GPU with at least 16GB-24GB VRAM, making it possible to run on mid-range hardware, though system memory and cooling should also be considered. This quantized version balances precision and performance, enabling possible deployment on devices with moderate resources. The model’s flexibility allows for potential use cases where lower precision is acceptable, but thorough testing is recommended to ensure compatibility.

- fp16, q4, q8

Conclusion

Falcon3 10B Instruct is a large language model developed by the Technology Innovation Institute, featuring 10 billion parameters and operating under the Falcon-3-TII license, with a context length of 32,000 tokens. It focuses on enhancing science, math, and code capabilities while supporting four languages, making it suitable for technical and multilingual applications.

References

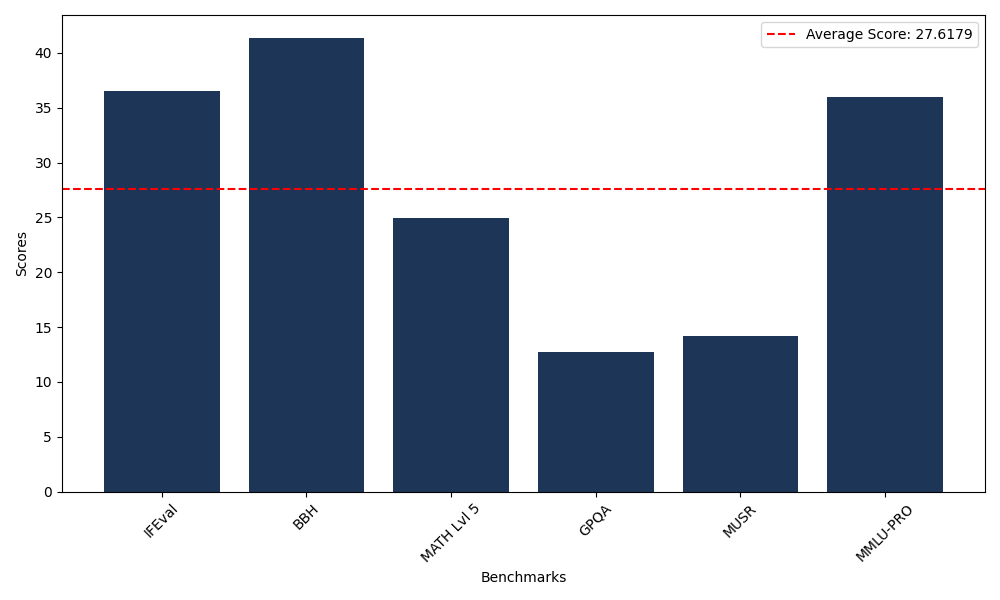

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 36.48 |

| Big Bench Hard (BBH) | 41.38 |

| Mathematical Reasoning Test (MATH Lvl 5) | 24.92 |

| General Purpose Question Answering (GPQA) | 12.75 |

| Multimodal Understanding and Reasoning (MUSR) | 14.17 |

| Massive Multitask Language Understanding (MMLU-PRO) | 36.00 |

Comments

No comments yet. Be the first to comment!

Leave a Comment