Falcon3 1B Instruct - Model Details

Falcon3 1B Instruct is a large language model developed by Technology Innovation Institute, a company, featuring 1b parameters. It operates under the Falcon 3 TII Falcon License (Falcon-3-TII) and is designed to enhance science, math, and code capabilities in decoder-only models up to 10 billion parameters.

Description of Falcon3 1B Instruct

Falcon3-1B-Base is a pretrained and instruct large language model (LLM) from the Falcon3 family, available in parameter sizes ranging from 1B to 10B. It is a pruned and optimized version of a larger 3B Falcon model, trained on 80 Gigatokens of diverse data including web content, code, STEM fields, and multilingual material. The model uses a transformer-based causal decoder-only architecture with 18 decoder blocks, Grouped Query Attention (GQA), and a 4K context length. It excels in reasoning, language understanding, instruction following, code generation, and mathematics tasks, making it a versatile tool for complex and specialized applications.

Parameters & Context Length of Falcon3 1B Instruct

Falcon3 1B Instruct has 1b parameters, placing it in the small models (up to 7B) category, which ensures fast and resource-efficient performance suitable for simple tasks. Its 4K context length falls under short contexts (up to 4K tokens), making it effective for short tasks but limiting its ability to handle long texts.

- Parameter_Size: 1b

- Context_Length: 4k

Possible Intended Uses of Falcon3 1B Instruct

Falcon3 1B Instruct is a model that could have possible applications in general language understanding and generation, such as drafting text, answering questions, or summarizing content. It might offer possible use cases for code generation and mathematical problem solving, supporting tasks like writing scripts or solving equations. Its possible multilingual capabilities in Spanish, English, French, and Portuguese could enable possible tasks involving translation or content creation across these languages. However, these possible uses require further exploration to confirm effectiveness and suitability for specific scenarios.

- general language understanding and generation

- code generation and mathematical problem solving

- multilingual tasks in english, french, spanish, and portuguese

Possible Applications of Falcon3 1B Instruct

Falcon3 1B Instruct could have possible applications in general language tasks, such as drafting text or answering queries, though its possible effectiveness in these areas requires further testing. It might offer possible support for code generation and mathematical problem solving, but possible limitations in complexity or accuracy need investigation. Its possible multilingual capabilities in Spanish, English, French, and Portuguese could enable possible use in cross-language content creation, though possible variability in performance across languages remains to be confirmed. Additionally, it could have possible utility in educational or creative workflows, but possible suitability for specific tasks must be validated. Each application must be thoroughly evaluated and tested before use.

- general language understanding and generation

- code generation and mathematical problem solving

- multilingual tasks in english, french, spanish, and portuguese

- educational or creative workflows

Quantized Versions & Hardware Requirements of Falcon3 1B Instruct

Falcon3 1B Instruct’s medium q4 version is designed for balanced precision and performance, requiring at least 8GB VRAM for a GPU or a multi-core CPU for deployment. This possible configuration makes it suitable for systems with moderate hardware, though exact requirements may vary based on workload. The fp16, q4, q8 quantized versions are available.

Conclusion

Falcon3 1B Instruct is a large language model developed by Technology Innovation Institute, featuring 1b parameters and released under the Falcon 3 TII Falcon License. It is designed for science, math, and code tasks, with a transformer-based architecture and training on diverse data including web, code, and multilingual content.

References

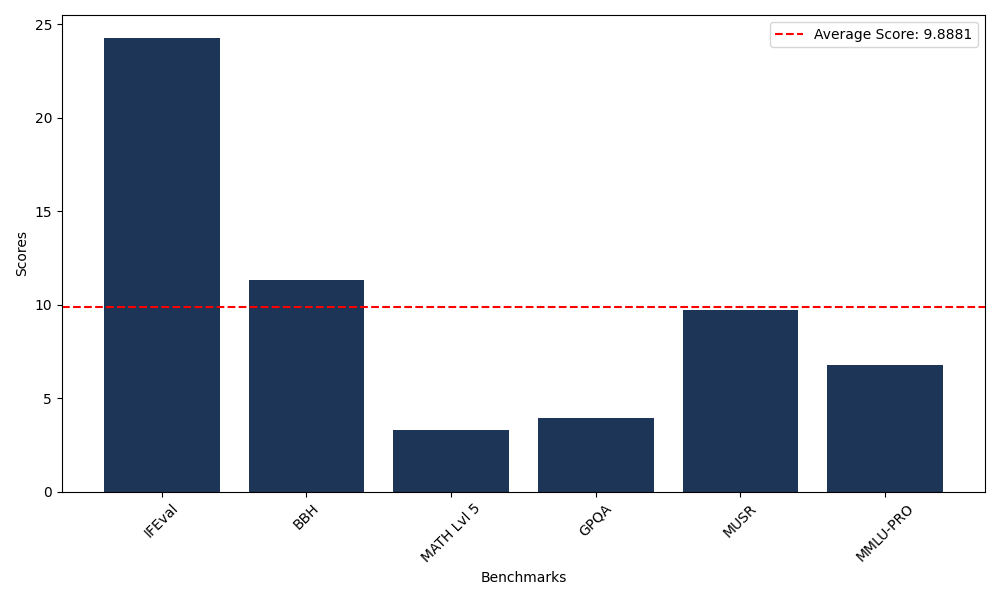

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 24.28 |

| Big Bench Hard (BBH) | 11.34 |

| Mathematical Reasoning Test (MATH Lvl 5) | 3.32 |

| General Purpose Question Answering (GPQA) | 3.91 |

| Multimodal Understanding and Reasoning (MUSR) | 9.71 |

| Massive Multitask Language Understanding (MMLU-PRO) | 6.76 |

Comments

No comments yet. Be the first to comment!

Leave a Comment