Falcon3 7B Instruct - Model Details

Falcon3 7B Instruct is a large language model developed by the Technology Innovation Institute, a company dedicated to advancing AI research. With 7b parameters, it is designed to enhance capabilities in science, math, and code within decoder-only architectures. The model is released under the Falcon 3 TII Falcon License (Falcon-3-TII), ensuring accessibility while maintaining ethical guidelines. Its focus on specialized domains makes it a valuable tool for technical and analytical tasks.

Description of Falcon3 7B Instruct

Falcon3-7B-Base is part of the Falcon3 family of open foundation models, which includes pretrained and instruct LLMs ranging from 1B to 10B parameters. This specific model achieves state-of-the-art results at the time of release in reasoning, language understanding, instruction following, code, and mathematics tasks. It supports four languages (English, French, Spanish, Portuguese) and features a context length of 32K, making it suitable for complex and extended interactions. However, it is a raw, pretrained model that requires further fine-tuning for most practical use cases.

Parameters & Context Length of Falcon3 7B Instruct

Falcon3-7B-Base has 7b parameters, placing it in the small to mid-scale range of open-source LLMs, offering fast and efficient performance for tasks that do not require extreme complexity. Its 32k context length enables handling of extended texts, making it suitable for tasks requiring long-term memory or detailed analysis, though this demands significant computational resources. The model’s design balances accessibility with capability, allowing it to tackle diverse applications while remaining manageable for many users.

- Parameter Size: 7b

- Context Length: 32k

Possible Intended Uses of Falcon3 7B Instruct

Falcon3-7B-Base is a versatile model with 7b parameters and a 32k context length, designed for tasks requiring reasoning, language understanding, instruction following, code generation, and mathematics. Its multilingual capabilities in spanish, english, french, and portuguese make it a possible tool for cross-lingual applications. While possible uses could include assisting with complex problem-solving, generating code snippets, or analyzing textual data, these applications would require thorough testing to ensure alignment with specific goals. The model’s architecture suggests it might be a possible candidate for tasks involving logical deduction or language translation, but further exploration is necessary to confirm its effectiveness. Possible uses in educational contexts, such as supporting learning through reasoning exercises or mathematical problem-solving, remain speculative and would need validation. The model’s design also hints at possible applications in creative writing or technical documentation, though these would depend on the quality of training data and task-specific requirements.

- reasoning tasks

- language understanding

- instruction following

- code generation

- mathematics tasks

Possible Applications of Falcon3 7B Instruct

Falcon3-7B-Base is a versatile model with 7b parameters and a 32k context length, capable of supporting possible applications in areas like educational tools, multilingual content creation, code assistance, and logical reasoning tasks. Its multilingual support in spanish, english, french, and portuguese suggests it could be a possible tool for cross-lingual projects or collaborative workflows. Possible uses might include generating structured documentation, analyzing textual patterns, or aiding in problem-solving exercises, though these possible applications would require careful validation. The model’s design also hints at possible value in tasks involving instruction following or mathematical reasoning, but further testing is essential to confirm its suitability. Each possible application must be thoroughly evaluated and tested before deployment to ensure alignment with specific requirements.

- reasoning tasks

- language understanding

- instruction following

- code generation

- mathematics tasks

Quantized Versions & Hardware Requirements of Falcon3 7B Instruct

Falcon3-7B-Base’s medium q4 version requires a GPU with at least 16GB VRAM and 32GB system memory to run efficiently, making it suitable for mid-scale models. This quantization balances precision and performance, allowing deployment on standard consumer-grade hardware. Possible applications may vary, but users should verify compatibility with their setup.

- fp16, q4, q8

Conclusion

Falcon3-7B-Base is a 7b-parameter model with a 32k context length, part of the Falcon3 family, designed for reasoning, language understanding, instruction following, code generation, and mathematics tasks. It supports Spanish, English, French, and Portuguese, making it a versatile tool for multilingual applications, though its effectiveness in specific use cases requires thorough evaluation.

References

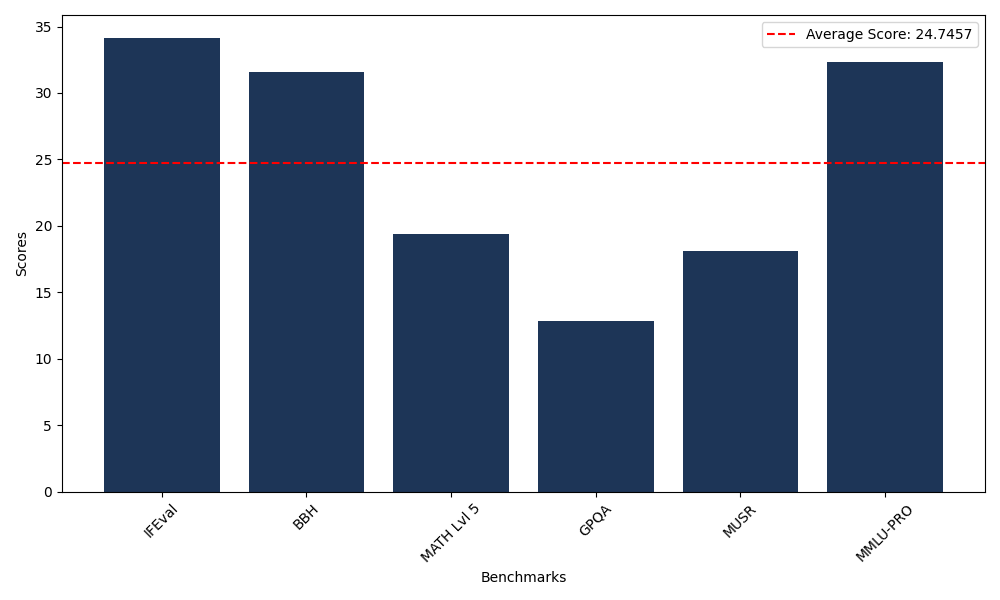

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 34.16 |

| Big Bench Hard (BBH) | 31.56 |

| Mathematical Reasoning Test (MATH Lvl 5) | 19.41 |

| General Purpose Question Answering (GPQA) | 12.86 |

| Multimodal Understanding and Reasoning (MUSR) | 18.14 |

| Massive Multitask Language Understanding (MMLU-PRO) | 32.34 |

Comments

No comments yet. Be the first to comment!

Leave a Comment