Gemma 2B - Model Details

Gemma 2B is a large language model developed by Google, featuring 2B parameters. It operates under the Gemma Terms of Use (Gemma-Terms-of-Use) license and is designed with a strong emphasis on safety and responsible AI generation.

Description of Gemma 2B

Gemma is a family of lightweight, state-of-the-art open models from Google, built from the same research and technology used to create the Gemini models. These text-to-text, decoder-only large language models are available in English and come with open weights, pre-trained variants, and instruction-tuned variants. They are designed for tasks like question answering, summarization, and reasoning, making them versatile for various text generation needs. Their relatively small size enables deployment in resource-constrained environments such as laptops, desktops, or personal cloud infrastructure, helping to democratize access to cutting-edge AI and fostering broader innovation.

Parameters & Context Length of Gemma 2B

Gemma 2B is a 2b parameter model with an 8k context length, placing it in the small to mid-scale range. This size allows for efficient performance on resource-constrained devices while handling moderate-length tasks. The 8k context length enables processing of extended texts, though it may not support extremely long documents. These characteristics make Gemma 2B suitable for applications requiring balance between capability and accessibility.

- Parameter_Size: 2b

- Context_Length: 8k

Possible Intended Uses of Gemma 2B

Gemma 2B is a model that could be used for a range of possible applications, including content creation and communication, where it might generate creative formats like poems, scripts, or marketing copy. It could also power chatbots and conversational AI, offering support for customer service or interactive tools. Text summarization is another possible use, where it might condense long documents or research papers. In research and education, it could serve as a tool for natural language processing (NLP) research, enabling experimentation with techniques or algorithm development. Language learning tools might leverage it for grammar correction or writing practice, while knowledge exploration could involve generating summaries or answering questions about large text collections. These possible uses require further investigation to ensure suitability for specific tasks.

- content creation and communication

- text generation: creative formats such as poems, scripts, code, marketing copy, and email drafts

- chatbots and conversational ai: power conversational interfaces for customer service, virtual assistants, or interactive applications

- text summarization: generate concise summaries of a text corpus, research papers, or reports

- research and education

- natural language processing (nlp) research: foundation for experimenting with nlp techniques, developing algorithms, and advancing the field

- language learning tools: support interactive language learning experiences, aid in grammar correction or providing writing practice

- knowledge exploration: assist researchers in exploring large bodies of text by generating summaries or answering questions about specific topics

Possible Applications of Gemma 2B

Gemma 2B is a model that could be used for possible applications such as content creation and communication, where it might generate creative formats like scripts or marketing copy. It could also power chatbots and conversational AI, offering support for customer service or interactive tools. Text summarization is another possible use, where it might condense long documents or research papers. In research and education, it could serve as a possible tool for experimenting with natural language processing techniques. These possible applications require careful evaluation to ensure alignment with specific needs and ethical considerations.

- content creation and communication

- chatbots and conversational ai

- text summarization

- research and education

Quantized Versions & Hardware Requirements of Gemma 2B

The medium q4 version of the large language model is optimized for a balance between precision and performance, requiring ~8GB - 16GB VRAM for models with up to 3B parameters, making it suitable for devices with moderate GPU capabilities. This version allows users to run the model on systems with at least 8GB VRAM, though higher VRAM is recommended for larger parameter counts. The hardware requirements depend on the model’s size, so users should evaluate their GPU’s VRAM and system memory to ensure compatibility.

- fp16, q2, q3, q32, q4, q5, q6, q8

Conclusion

Gemma 2B is a 2b parameter large language model developed by Google, designed with a focus on safety and responsible AI generation under the Gemma Terms of Use (Gemma-Terms-of-Use) license. It offers text-to-text, decoder-only capabilities, supports various quantized versions for different hardware requirements, and is optimized for resource-efficient deployment in environments like laptops or personal cloud infrastructure.

References

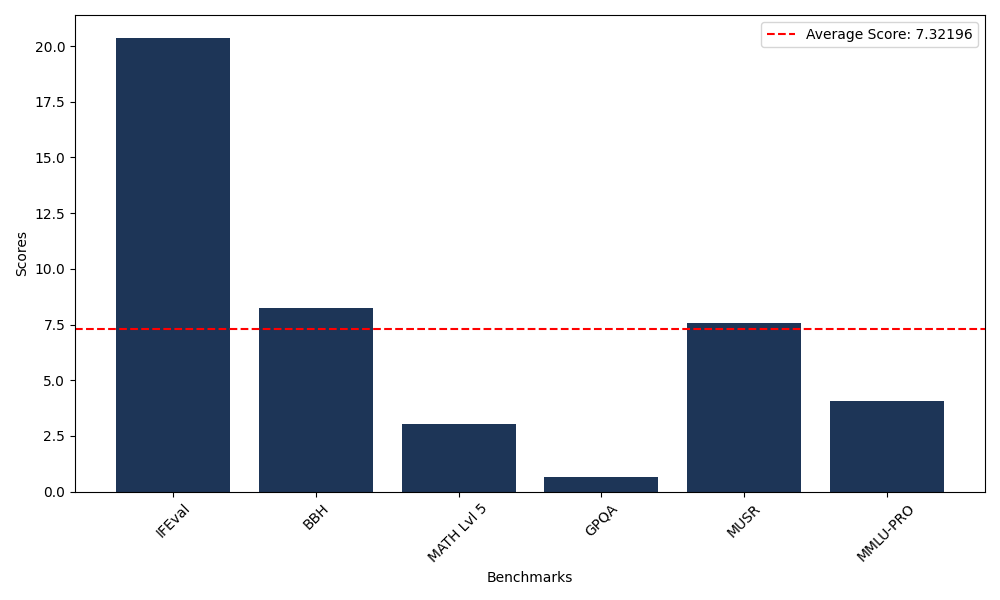

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 20.38 |

| Big Bench Hard (BBH) | 8.25 |

| Mathematical Reasoning Test (MATH Lvl 5) | 3.02 |

| General Purpose Question Answering (GPQA) | 0.67 |

| Multimodal Understanding and Reasoning (MUSR) | 7.56 |

| Massive Multitask Language Understanding (MMLU-PRO) | 4.06 |

Comments

No comments yet. Be the first to comment!

Leave a Comment