Gemma2 9B - Model Details

Gemma2 9B is a large language model developed by Google, featuring 9 billion parameters and released under the Gemma Terms of Use. It is designed for efficient and high-performance language understanding, offering robust capabilities while maintaining computational efficiency. The model balances scale and practicality, making it suitable for a wide range of applications. Its licensing terms emphasize responsible use and accessibility.

Description of Gemma2 9B

Gemma is a family of lightweight, state-of-the-art open models developed by Google, built from the same research and technology used to create the Gemini models. These text-to-text, decoder-only large language models are available in English and feature open weights for both pre-trained and instruction-tuned variants, enabling flexibility for diverse applications. Gemma excels in tasks such as question answering, summarization, and reasoning, while its relatively small size allows deployment on resource-constrained environments like laptops, desktops, or personal cloud infrastructure. This accessibility democratizes state-of-the-art AI, fostering innovation by making advanced language models available to a broader audience.

Parameters & Context Length of Gemma2 9B

Gemma2 9B is a mid-scale large language model with 9 billion parameters, placing it in the category of models that offer balanced performance for moderate complexity tasks while maintaining resource efficiency. Its 4k token context length allows it to handle short to moderately long texts but limits its ability to process extremely lengthy documents. The parameter size ensures faster inference and lower computational demands compared to larger models, making it accessible for deployment on standard hardware. The context length supports tasks like summarization and question answering but may require chunking for extended content.

- Parameter Size: 9b

- Context Length: 4k

Possible Intended Uses of Gemma2 9B

Gemma2 9B is a versatile large language model designed for text generation, natural language processing, and conversational tasks, with possible applications in areas like content creation, chatbots, and research. Its 9b parameter size and 4k context length make it suitable for possible uses such as generating creative writing, assisting with text summarization, or supporting language learning tools. While possible applications include exploring knowledge through dialogue or enhancing communication workflows, these possible uses require careful evaluation to ensure alignment with specific needs. The model’s design allows for possible deployment in environments where resource efficiency is critical, but possible benefits depend on the context and implementation.

- content creation and communication

- text generation

- chatbots and conversational AI

- text summarization

- natural language processing (nlp) research

- language learning tools

- knowledge exploration

Possible Applications of Gemma2 9B

Gemma2 9B is a versatile large language model with 9 billion parameters and a 4k token context length, making it a possible tool for applications like content creation, chatbots, natural language processing research, and knowledge exploration. Its possible uses could include generating creative writing, supporting conversational AI systems, or aiding in text analysis for academic or exploratory purposes. While possible applications might extend to language learning tools or summarization tasks, these possible scenarios require careful validation to ensure they align with specific goals. The model’s design allows for possible deployment in resource-efficient settings, but possible benefits depend on the context and implementation.

- content creation and communication

- chatbots and conversational AI

- natural language processing (nlp) research

- knowledge exploration

Each application must be thoroughly evaluated and tested before use.

Quantized Versions & Hardware Requirements of Gemma2 9B

Gemma2 9B's medium q4 version is optimized for balanced precision and performance, requiring a GPU with at least 16GB VRAM for efficient operation, making it suitable for systems with moderate hardware capabilities. This quantized variant reduces memory demands while maintaining usability for tasks like text generation and conversational AI.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Gemma2 9B is a mid-scale large language model with 9 billion parameters and a 4k token context length, offering a balance between performance and resource efficiency. It is designed for tasks like text generation and conversational AI, making it suitable for deployment in environments with limited computational resources.

References

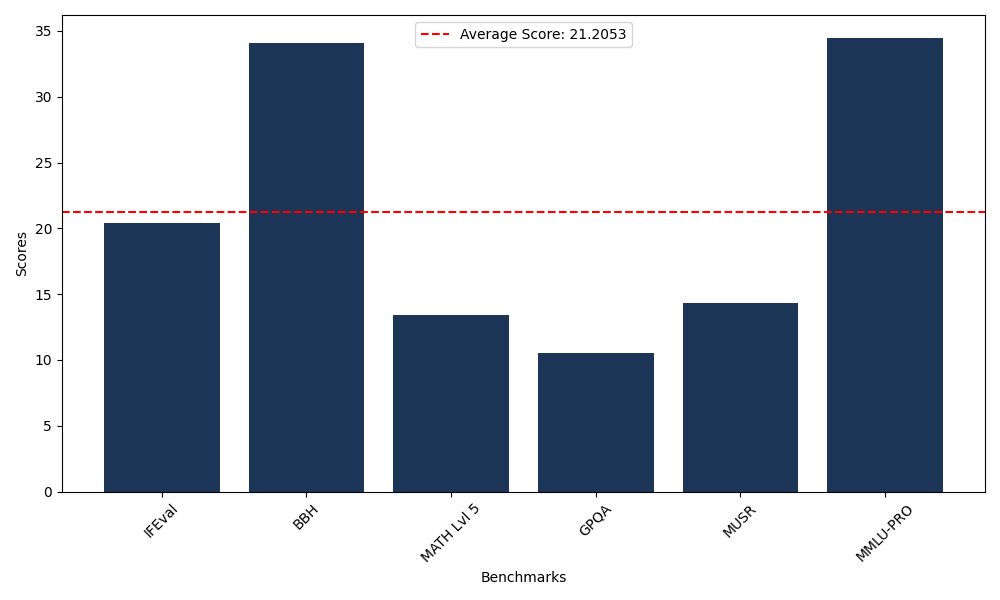

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 20.40 |

| Big Bench Hard (BBH) | 34.10 |

| Mathematical Reasoning Test (MATH Lvl 5) | 13.44 |

| General Purpose Question Answering (GPQA) | 10.51 |

| Multimodal Understanding and Reasoning (MUSR) | 14.30 |

| Massive Multitask Language Understanding (MMLU-PRO) | 34.48 |

Comments

No comments yet. Be the first to comment!

Leave a Comment