Granite3 Dense 8B Instruct - Model Details

Granite3 Dense 8B Instruct is a high-performance, open-source large language model developed by IBM Granite. It features 8 billion parameters and is released under the Apache License 2.0, making it accessible for a wide range of applications. Trained on vast data, the model emphasizes efficiency and versatility for diverse tasks.

Description of Granite3 Dense 8B Instruct

Granite-3.0-8B-Base is a decoder-only language model trained from scratch using a two-stage strategy. It is optimized for text-to-text generation tasks such as summarization, classification, extraction, and question-answering. The model employs a dense transformer architecture with GQA, RoPE, and SwiGLU components. It was trained on 10 trillion tokens in the first stage and 2 trillion tokens in the second stage, drawing from diverse domains including web, code, academic sources, books, and multilingual content. The model is available under the Apache 2.0 license and supports fine-tuning for additional languages beyond its initial training scope.

Parameters & Context Length of Granite3 Dense 8B Instruct

Granite3 Dense 8B Instruct is a large language model with 8 billion parameters, placing it in the mid-scale category, which offers a balance between performance and resource efficiency for moderate complexity tasks. Its 4,096-token context length falls into the short-context range, making it well-suited for tasks involving concise inputs or outputs but less effective for extended text processing. The 8B parameter size ensures it can handle a wide range of applications without excessive computational demands, while the 4K context length limits its ability to process very long documents or sequences.

- Parameter Size: 8B

- Context Length: 4K

Possible Intended Uses of Granite3 Dense 8B Instruct

Granite3 Dense 8B Instruct is a versatile model that offers possible applications in text summarization, text classification, information extraction, and question-answering, with potential for adaptation to specialized scenarios. Its multilingual capabilities support languages such as Japanese, English, Italian, Dutch, French, Korean, Chinese, Portuguese, Czech, Arabic, German, and Spanish, making it a possible tool for cross-lingual tasks. While these uses are possible, they require thorough investigation to ensure suitability for specific contexts. The model’s design allows for potential customization, but further testing is needed to confirm effectiveness in real-world implementations.

- text summarization

- text classification

- information extraction

- question-answering

- creation of specialized models for specific application scenarios

Possible Applications of Granite3 Dense 8B Instruct

Granite3 Dense 8B Instruct offers possible applications in text summarization, text classification, information extraction, and question-answering, with potential for adaptation to specialized scenarios. These uses are possible in contexts where general-purpose language understanding and generation are required, leveraging the model’s multilingual support for languages like Japanese, English, and Spanish. While these applications are possible, they require thorough evaluation to ensure alignment with specific needs. The model’s design allows for possible customization, but each use case must be carefully tested before deployment.

- text summarization

- text classification

- information extraction

- question-answering

Quantized Versions & Hardware Requirements of Granite3 Dense 8B Instruct

Granite3 Dense 8B Instruct’s medium q4 version requires a GPU with at least 16GB VRAM for efficient operation, making it suitable for systems with mid-range graphics cards. This quantization balances precision and performance, allowing possible deployment on devices with 32GB RAM and adequate cooling. Users should verify their GPU’s VRAM and power supply to ensure compatibility.

fp16, q2, q3, q4, q5, q6, q8

Conclusion

Granite3 Dense 8B Instruct is a mid-scale, open-source large language model with 8 billion parameters and a 4,096-token context length, designed for text-to-text generation tasks. It supports multiple languages and is released under the Apache 2.0 license, offering flexibility for diverse applications.

References

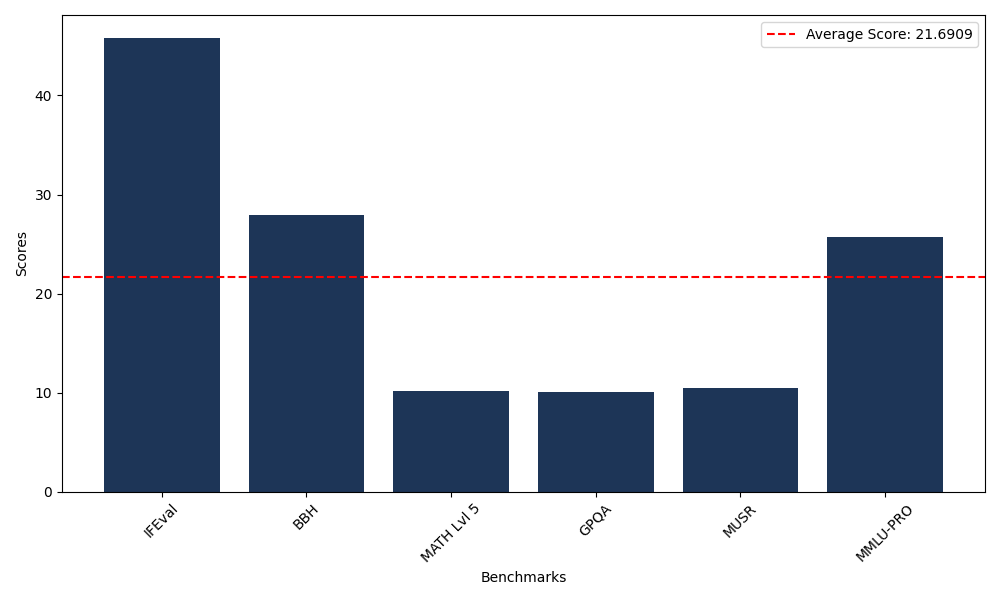

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 45.83 |

| Big Bench Hard (BBH) | 27.97 |

| Mathematical Reasoning Test (MATH Lvl 5) | 10.12 |

| General Purpose Question Answering (GPQA) | 10.07 |

| Multimodal Understanding and Reasoning (MUSR) | 10.45 |

| Massive Multitask Language Understanding (MMLU-PRO) | 25.70 |

Comments

No comments yet. Be the first to comment!

Leave a Comment