Granite3.1 Dense 8B Instruct - Model Details

Granite3.1 Dense 8B Instruct is a large language model developed by Ibm Granite, a company known for its advanced AI research. With 8b parameters, it is designed for efficient and scalable performance. The model is released under the Apache License 2.0, allowing flexible use and modification. Trained on over 12 trillion tokens, it excels in handling complex tasks and delivering high-quality responses.

Description of Granite3.1 Dense 8B Instruct

Granite-3.1-8B-Base extends the context length of its predecessor from 4K to 128K through a progressive training strategy that incrementally increases the supported context while adjusting RoPE theta. This long-context pre-training stage utilized approximately 500B tokens. The model employs a decoder-only dense transformer architecture with GQA, RoPE, SwiGLU in the MLP, RMSNorm, and shared input/output embeddings. It is trained on a mix of open source and proprietary data across three stages, with the final stage incorporating synthetic long-context data. The model is deployed on IBM's Blue Vela supercomputing cluster using NVIDIA H100 GPUs.

Parameters & Context Length of Granite3.1 Dense 8B Instruct

Granite-3.1-8B-Base features 8b parameters, placing it in the mid-scale category of open-source LLMs, offering a balance between performance and resource efficiency for moderate complexity tasks. Its 128k context length falls into the very long context range, enabling advanced handling of extended texts but requiring significant computational resources. This combination allows the model to manage intricate, lengthy inputs effectively while maintaining scalability.

- Parameter Size: 8b

- Context Length: 128k

Possible Intended Uses of Granite3.1 Dense 8B Instruct

Granite-3.1-8B-Base is a versatile model with possible uses in tasks like summarization, text classification, extraction, question-answering, and long-context processing. Its multilingual support for languages including Chinese, Italian, Korean, Spanish, and others suggests possible applications in cross-lingual projects or content adaptation. The model’s ability to handle extended contexts could enable possible exploration of complex document analysis or extended dialogue systems. However, these possible uses require thorough testing to ensure suitability for specific scenarios. The model’s design and training data may influence its effectiveness in different domains, so possible applications should be validated before deployment.

- summarization

- text classification

- extraction

- question-answering

- long-context tasks

Possible Applications of Granite3.1 Dense 8B Instruct

Granite-3.1-8B-Base could have possible applications in areas like generating detailed summaries of lengthy documents, where its 128k context length allows for comprehensive analysis. It might also support possible use cases in multilingual text classification, leveraging its multilingual capabilities to handle content across diverse languages. Possible exploration of question-answering systems for complex queries could benefit from its balanced 8b parameter size, enabling efficient processing without excessive resource demands. Additionally, it might assist in possible tasks involving structured data extraction from extended texts, though these possible uses require careful validation. Each application must be thoroughly evaluated and tested before deployment to ensure alignment with specific requirements.

- summarization

- text classification

- question-answering

- data extraction

Quantized Versions & Hardware Requirements of Granite3.1 Dense 8B Instruct

Granite-3.1-8B-Base’s medium q4 version requires a GPU with at least 16GB VRAM for efficient operation, balancing precision and performance. This configuration is suitable for systems with 12GB–24GB VRAM and 32GB+ RAM, ensuring smooth execution without excessive resource strain. Possible applications of this version may vary depending on the specific use case, so testing is recommended.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Granite-3.1-8B-Base is a mid-scale language model with 8b parameters and a 128k context length, designed for efficient performance and multilingual tasks across various languages. Its architecture supports a range of applications, making it suitable for scenarios requiring balanced resource usage and extended text handling.

References

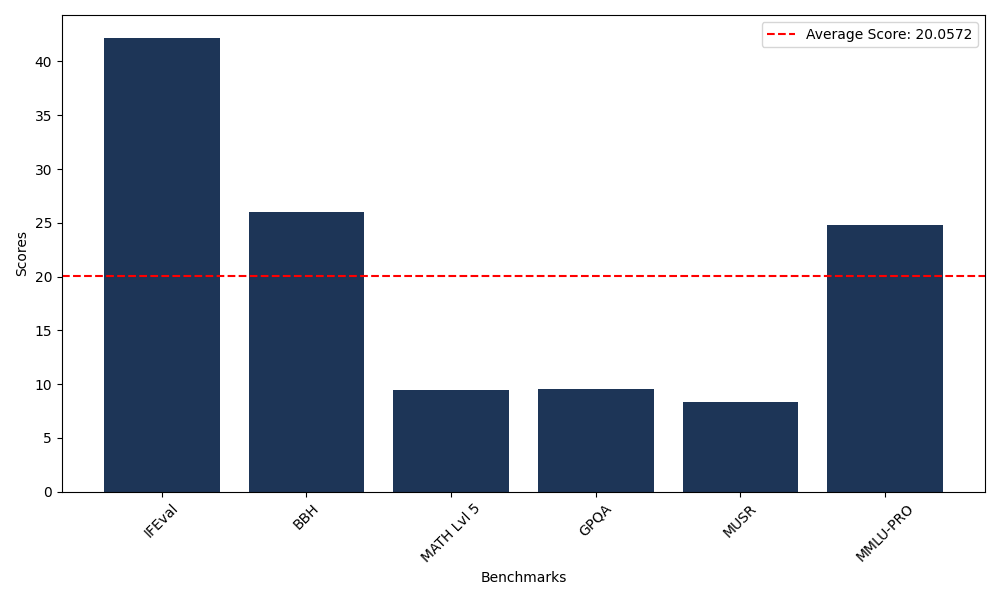

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 42.21 |

| Big Bench Hard (BBH) | 26.02 |

| Mathematical Reasoning Test (MATH Lvl 5) | 9.44 |

| General Purpose Question Answering (GPQA) | 9.51 |

| Multimodal Understanding and Reasoning (MUSR) | 8.36 |

| Massive Multitask Language Understanding (MMLU-PRO) | 24.80 |

Comments

No comments yet. Be the first to comment!

Leave a Comment