Granite3.1 Moe 1B Instruct - Model Details

Granite3.1 Moe 1B Instruct is a large language model developed by Ibm Granite, a company specializing in advanced AI research. With 1b parameters, it is designed for efficient and scalable performance. The model is released under the Apache License 2.0 (Apache-2.0), allowing broad usage and modification. It excels in handling complex tasks with long-context capabilities and supports multiple languages, making it versatile for diverse applications.

Description of Granite3.1 Moe 1B Instruct

Granite-3.1-8B-Base extends the context length of its predecessor from 4K to 128K through a progressive training strategy, enabling advanced handling of long-context tasks. It is trained on a mix of open source and proprietary data across three stages, ensuring robust performance. The model employs a decoder-only dense transformer architecture with GQA, RoPE, and SwiGLU-based MLP for efficient processing. It supports 12 primary languages and is optimized for text-to-text generation tasks such as summarization, classification, and question-answering.

Parameters & Context Length of Granite3.1 Moe 1B Instruct

Granite3.1 Moe 1B Instruct features a 1b parameter size, making it efficient for simpler tasks, while its 128k context length enables handling long texts, though requiring more resources. This combination allows for versatile applications, balancing performance and resource usage.

- Granite3.1 Moe 1B Instruct: 1b parameters (small model, efficient for simple tasks), 128k context length (long context, ideal for long texts but resource-intensive).

Possible Intended Uses of Granite3.1 Moe 1B Instruct

Granite3.1 Moe 1B Instruct is a multilingual model with potential applications in tasks like summarization of long documents, text classification and extraction, and question-answering that leverages its long-context support. Its ability to handle multiple languages, including chinese, italian, korean, spanish, french, portuguese, czech, english, dutch, arabic, japanese, and german, makes it a possible tool for cross-lingual projects. However, these uses are still under exploration and require further validation to ensure effectiveness in specific scenarios. The model’s design suggests it could be a possible choice for scenarios demanding efficient processing of extended text, but thorough testing is necessary before deployment.

- summarization of long documents

- text classification and extraction tasks

- question-answering with long-context support

Possible Applications of Granite3.1 Moe 1B Instruct

Granite3.1 Moe 1B Instruct is a multilingual model with possible applications in tasks like summarizing long documents, classifying and extracting text, and answering questions with long-context support. Its design suggests it could be a possible tool for scenarios requiring efficient handling of extended text, though these uses are still under exploration. The model’s multilingual capabilities, supporting languages such as chinese, italian, korean, and others, make it a possible candidate for cross-lingual projects. However, these potential applications require thorough evaluation to ensure suitability for specific tasks. Each application must be thoroughly evaluated and tested before use.

- summarization of long documents

- text classification and extraction tasks

- question-answering with long-context support

Quantized Versions & Hardware Requirements of Granite3.1 Moe 1B Instruct

Granite3.1 Moe 1B Instruct with the q4 quantization offers a balance between precision and performance, requiring a GPU with at least 8GB VRAM for optimal operation. This version is possible to run on systems with multi-core CPUs and sufficient system memory, though specific requirements may vary based on workload. Users should evaluate their hardware to ensure compatibility, as higher quantization levels may reduce precision while improving efficiency.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Granite3.1 Moe 1B Instruct is a 1b-parameter model with a 128k context length, designed for long-context tasks and multilingual support across 12 languages, released under the Apache License 2.0. It is optimized for text-to-text generation, including summarization, classification, and question-answering, with potential applications requiring further evaluation.

References

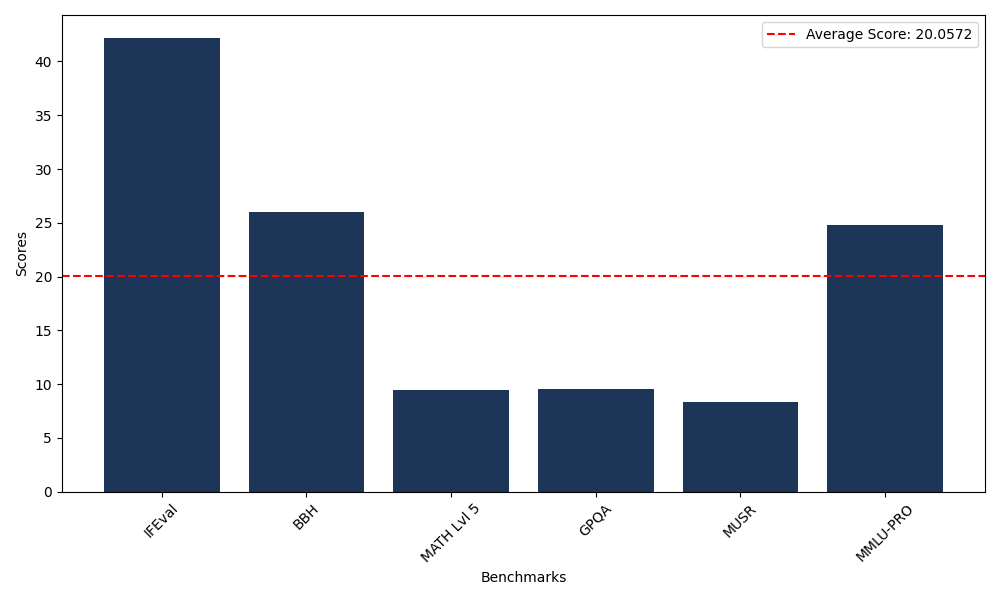

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 42.21 |

| Big Bench Hard (BBH) | 26.02 |

| Mathematical Reasoning Test (MATH Lvl 5) | 9.44 |

| General Purpose Question Answering (GPQA) | 9.51 |

| Multimodal Understanding and Reasoning (MUSR) | 8.36 |

| Massive Multitask Language Understanding (MMLU-PRO) | 24.80 |

Comments

No comments yet. Be the first to comment!

Leave a Comment