Internlm2 1.8B

Internlm2 1.8B is a large language model developed by InternLM, a company specializing in advanced AI research. With 1.8 billion parameters, it is designed to deliver strong performance in complex reasoning tasks. The model is released under the Apache License 2.0, allowing for flexible use and modification. Its primary focus lies in enhancing reasoning capabilities, making it suitable for a wide range of applications requiring deep analytical skills.

Description of Internlm2 1.8B

InternLM2-1.8B is the 1.8 billion parameter version of the second generation InternLM series, designed to handle ultra-long contexts of up to 200,000 characters. It demonstrates significant advancements in reasoning, mathematics, and coding capabilities compared to previous iterations. The model offers three open-source versions: a foundation model, a chat model refined through supervised fine-tuning, and a further aligned chat model with enhanced instruction following and function calling abilities, making it versatile for diverse applications.

Parameters & Context Length of Internlm2 1.8B

InternLM2-1.8B features 1.8 billion parameters, placing it in the small to mid-scale range of open-source LLMs, which typically prioritize efficiency and suitability for simpler tasks. Its 195,000-token context length falls into the very long context category, enabling it to process extensive texts but requiring significant computational resources. This combination allows the model to balance performance and resource demands while handling complex, lengthy inputs effectively.

- Parameter Size: 1.8b

- Context Length: 195k tokens

Possible Intended Uses of Internlm2 1.8B

InternLM2-1.8B is a versatile large language model that could have possible applications in areas like chatbot development, code generation, and general question answering. Its design suggests it might support interactive conversations, assist with writing or debugging code, and provide answers to a wide range of queries. However, these possible uses would require thorough testing and adaptation to specific scenarios, as the model’s performance in real-world settings remains to be fully explored. The model’s capabilities could also be extended to other tasks, but further research would be necessary to confirm its effectiveness in those contexts.

- chatbot

- code generation

- general question answering

Possible Applications of Internlm2 1.8B

InternLM2-1.8B is a large language model that could have possible applications in areas such as educational support, content creation, technical documentation, and interactive learning tools. Its possible uses might include assisting with generating educational materials, drafting creative content, or providing explanations for technical topics. These possible applications could also extend to tasks requiring structured reasoning or language-based problem-solving, though further exploration would be necessary to confirm their effectiveness. The model’s possible suitability for these areas depends on specific implementation details, and each possible use case would require rigorous testing and validation before deployment.

- educational support

- content creation

- technical documentation

- interactive learning tools

Quantized Versions & Hardware Requirements of Internlm2 1.8B

InternLM2-1.8B's medium q4 version is optimized for a balance between precision and performance, requiring a GPU with at least 8GB VRAM for efficient operation. This configuration is suitable for systems with moderate resources, though higher VRAM may be needed for complex tasks. System memory of at least 32GB and adequate cooling are also recommended to ensure stable performance.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

InternLM2-1.8B is a large language model with 1.8 billion parameters and a 195,000-token context length, offering strong reasoning and coding capabilities through its open-source foundation, chat, and further aligned versions. Its design emphasizes efficiency and versatility, making it suitable for a range of applications while requiring careful evaluation for specific use cases.

References

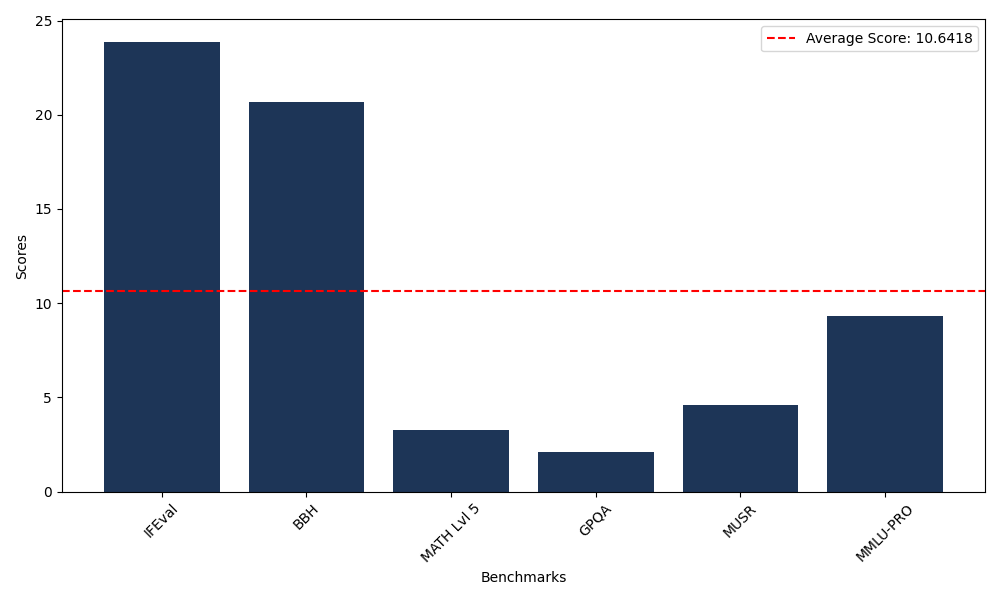

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 23.87 |

| Big Bench Hard (BBH) | 20.67 |

| Mathematical Reasoning Test (MATH Lvl 5) | 3.25 |

| General Purpose Question Answering (GPQA) | 2.13 |

| Multimodal Understanding and Reasoning (MUSR) | 4.61 |

| Massive Multitask Language Understanding (MMLU-PRO) | 9.33 |