Internlm2 7B - Model Details

Internlm2 7B is a large language model developed by InternLM, featuring 7B parameters and released under the Apache License 2.0 (Apache-2.0). It is designed with a focus on exceptional reasoning capabilities.

Description of Internlm2 7B

InternLM2-1.8B is the 1.8 billion parameter version of the second generation of the InternLM series. It supports ultra-long contexts of up to 200,000 characters and demonstrates significant improvements in reasoning, mathematics, and coding capabilities compared to previous generations. The model offers three open-source versions: a foundation model, a chat model after supervised fine-tuning, and a further aligned chat model using online RLHF.

Parameters & Context Length of Internlm2 7B

Internlm2 7B features 7B parameters, placing it in the small to mid-scale range of open-source LLMs, which ensures efficient performance for tasks requiring moderate computational resources. Its 195k context length falls into the very long context category, enabling it to process extensive text sequences but demanding significant memory and processing power. This combination makes the model well-suited for applications prioritizing depth in text analysis while balancing resource constraints.

- Parameter Size: 7b

- Context Length: 195k

Possible Intended Uses of Internlm2 7B

Internlm2 7B is a versatile large language model with possible applications in text generation, code generation, and chatbot applications. Its design allows for possible use cases such as creating dynamic content, assisting with programming tasks, or building conversational interfaces. However, these possible uses require thorough evaluation to ensure alignment with specific requirements and constraints. The model’s capabilities may support tasks ranging from drafting documents to generating code snippets, but further exploration is needed to confirm effectiveness in real-world scenarios.

- text generation

- code generation

- chatbot applications

Possible Applications of Internlm2 7B

Internlm2 7B is a large-scale language model with possible applications in areas such as text generation, code generation, and chatbot development. Its possible uses could include creating dynamic content, assisting with programming tasks, or enhancing conversational interfaces. Possible applications might also extend to tasks requiring structured data processing or interactive dialogue systems, though these possible uses require careful validation. The model’s design suggests possible suitability for scenarios where adaptability and efficiency are prioritized, but each possible application must be thoroughly assessed to ensure alignment with specific needs.

- text generation

- code generation

- chatbot applications

Quantized Versions & Hardware Requirements of Internlm2 7B

Internlm2 7B with the medium q4 quantization requires a GPU with at least 12GB VRAM and 32GB system memory for optimal performance, balancing precision and efficiency. This version is suitable for systems with moderate hardware capabilities, though higher VRAM may be needed for complex tasks.

Quantized versions: fp16, q2, q3, q4, q5, q6, q8

Conclusion

Internlm2 7B is a large language model developed by InternLM, featuring 7B parameters and released under the Apache License 2.0. It supports a 195k token context length, making it suitable for tasks requiring extensive text processing while balancing performance and resource efficiency.

References

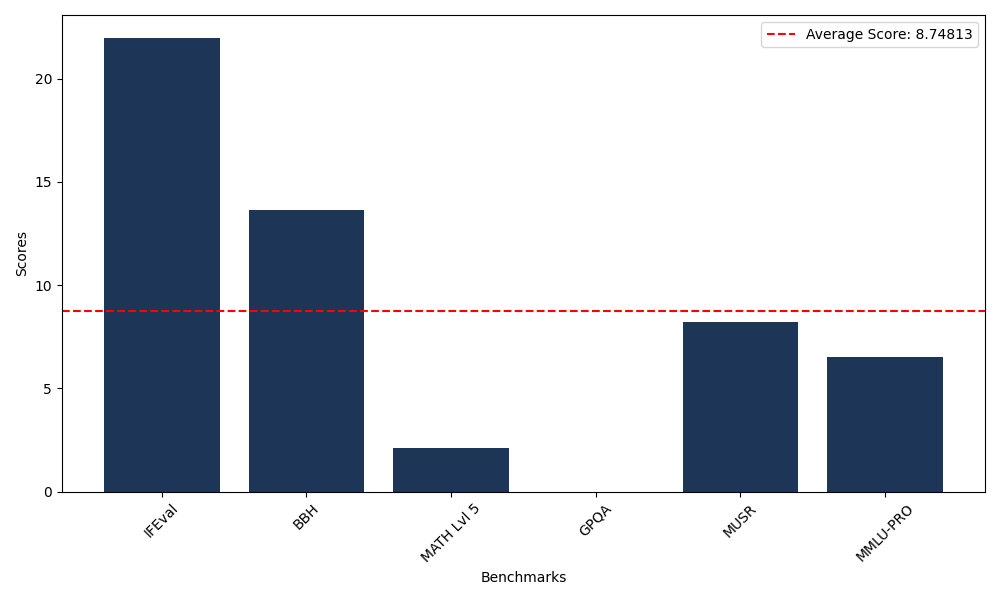

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 21.98 |

| Big Bench Hard (BBH) | 13.63 |

| Mathematical Reasoning Test (MATH Lvl 5) | 2.11 |

| General Purpose Question Answering (GPQA) | 0.00 |

| Multimodal Understanding and Reasoning (MUSR) | 8.23 |

| Massive Multitask Language Understanding (MMLU-PRO) | 6.54 |

Comments

No comments yet. Be the first to comment!

Leave a Comment