Llama Pro 8B - Model Details

Llama Pro 8B, developed by Arc Lab and Tencent Pcg, is a large language model with 8 billion parameters. It operates under the Llama 2 Community License Agreement (LLAMA-2-CLA) and is designed to integrate general language understanding with specialized knowledge in programming and mathematics.

Description of Llama Pro 8B

LLaMA-Pro is a progressive version of the original LLaMA model, enhanced by the addition of Transformer blocks. Developed by Tencent's ARC Lab, it is an 8.3 billion parameter model designed to integrate general language understanding with domain-specific knowledge in programming and mathematics. It builds on LLaMA2-7B by being further trained on 80 billion tokens of code and math corpora, making it particularly effective for technical tasks.

Parameters & Context Length of Llama Pro 8B

Llama Pro 8B is a large language model with 8b parameters, placing it in the mid-scale category, offering a balance between performance and resource efficiency for moderate complexity tasks. Its 4k context length is suitable for short to moderate-length tasks but limits its ability to handle very long texts. The model's parameter size enables it to handle complex queries effectively while remaining accessible for deployment on standard hardware, while the context length ensures it can process detailed inputs without excessive computational overhead.

- Parameter Size: 8b

- Context Length: 4k

Possible Intended Uses of Llama Pro 8B

Llama Pro 8B is a large language model designed to support a range of possible applications, including natural language processing tasks, programming, mathematics, and general language tasks. Its ability to integrate natural and programming languages suggests possible utility in scenarios requiring cross-domain understanding, such as code generation, problem-solving, or hybrid text analysis. However, these possible uses would need thorough investigation to confirm their effectiveness and suitability for specific contexts. The model’s architecture and training data may also enable possible experimentation in areas like multilingual support or domain-specific reasoning, though further research would be necessary.

- nlp tasks

- programming

- mathematics

- general language tasks

- integration of natural and programming languages

Possible Applications of Llama Pro 8B

Llama Pro 8B is a large language model with possible applications in areas such as code generation, mathematical reasoning, multilingual natural language processing, and hybrid text analysis. These possible uses could involve tasks like translating between natural and programming languages, solving complex mathematical problems, or handling diverse language tasks. However, the possible effectiveness of these applications would require thorough evaluation to ensure alignment with specific requirements. The model’s design suggests possible adaptability to technical and cross-domain scenarios, but further testing would be necessary to confirm its suitability.

- code generation

- mathematical reasoning

- multilingual natural language processing

- hybrid text analysis

Quantized Versions & Hardware Requirements of Llama Pro 8B

Llama Pro 8B in its medium q4 version requires a GPU with at least 16GB VRAM and 32GB system memory for efficient operation, balancing precision and performance. This configuration ensures compatibility with mid-range hardware while maintaining reasonable inference speeds. The q4 quantization reduces memory usage compared to higher-precision versions like fp16, making it suitable for deployment on devices with limited resources.

fp16, q2, q3, q4, q5, q6, q8

Conclusion

Llama Pro 8B is a large language model with 8 billion parameters and a 4,000-token context length, designed to integrate general language understanding with specialized knowledge in programming and mathematics. Its architecture supports cross-domain tasks like code generation and hybrid text analysis, making it suitable for technical applications requiring balanced precision and performance.

References

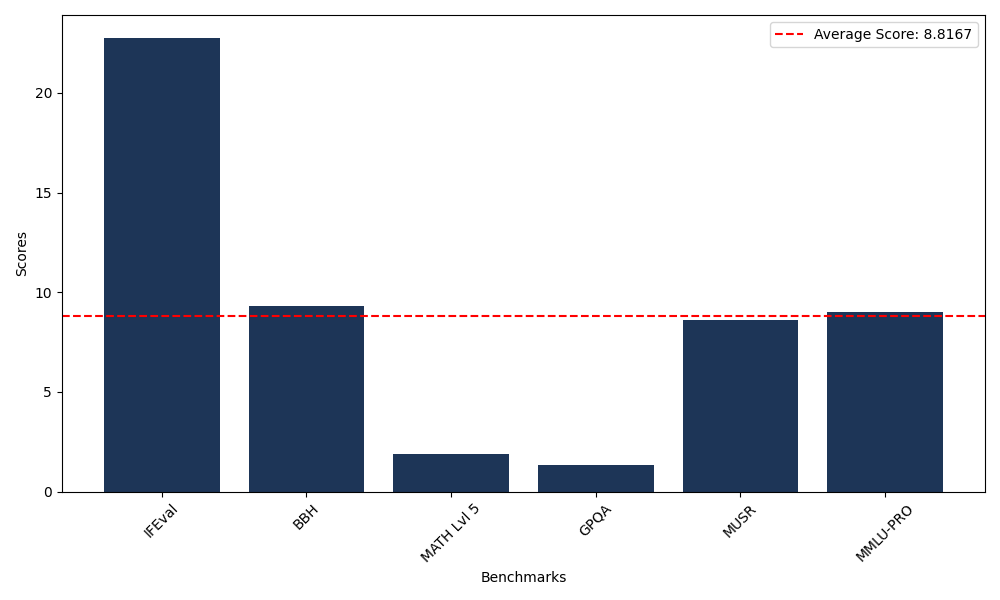

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 22.77 |

| Big Bench Hard (BBH) | 9.29 |

| Mathematical Reasoning Test (MATH Lvl 5) | 1.89 |

| General Purpose Question Answering (GPQA) | 1.34 |

| Multimodal Understanding and Reasoning (MUSR) | 8.59 |

| Massive Multitask Language Understanding (MMLU-PRO) | 9.01 |

Comments

No comments yet. Be the first to comment!

Leave a Comment