Llama Pro 8B Instruct - Model Details

Llama Pro 8B Instruct is a large language model developed by Arc Lab, Tencent Pcg with 8b parameters. It operates under the Llama 2 Community License Agreement (LLAMA-2-CLA) and is designed for instruction-following tasks. The model specializes in integrating general language understanding with domain-specific knowledge in programming and mathematics.

Description of Llama Pro 8B Instruct

LLaMA-PRO-Instruct is a large language model with 8.3 billion parameters developed by the Tencent ARC team. It builds on the LLaMA2-7B foundation using innovative block expansion techniques to enhance its capabilities. The model specializes in programming, coding, and mathematical reasoning while maintaining versatility in general language tasks. It is trained on a diverse dataset of over 80 billion tokens that includes extensive coding and mathematical content.

Parameters & Context Length of Llama Pro 8B Instruct

Llama Pro 8B Instruct has 8b parameters, placing it in the mid-scale category of open-source LLMs, offering a balance between performance and resource efficiency for moderate complexity tasks. Its 4k context length falls into the short context range, making it well-suited for concise tasks but less effective for extended text processing. The model’s parameter size enables versatile general language understanding while maintaining focus on programming and mathematical reasoning, though its context length limits its ability to handle very long documents or complex sequential tasks.

- Parameter Size: 8b (mid-scale, balanced performance for moderate complexity)

- Context Length: 4k (short context, ideal for brief tasks but limited for long texts)

Possible Intended Uses of Llama Pro 8B Instruct

Llama Pro 8B Instruct is a versatile model designed for complex NLP challenges, programming, mathematical reasoning, and general language processing. Its 8b parameter size and specialized training make it a possible tool for tasks requiring domain-specific knowledge in coding and math, though its effectiveness in these areas remains possible and requires further exploration. The model could also be possible for applications involving natural language understanding, but its 4k context length may limit its possible use in handling extended or highly detailed texts. While its design suggests possible value in integrating general language skills with technical domains, these possible applications must be thoroughly tested before deployment.

- complex NLP challenges

- programming

- mathematical reasoning

- general language processing

Possible Applications of Llama Pro 8B Instruct

Llama Pro 8B Instruct is a model with 8b parameters and a 4k context length, making it a possible tool for addressing complex NLP challenges, programming tasks, mathematical reasoning, and general language processing. Its possible applications could include generating code snippets, solving mathematical problems, or assisting with language translation, though these possible uses require further investigation to confirm their effectiveness. The model’s possible suitability for tasks involving domain-specific knowledge in programming and math suggests possible value in educational or technical contexts, but its possible limitations in handling long texts or highly specialized scenarios must be carefully assessed. The possible deployment of this model in any application area should be preceded by rigorous testing and validation to ensure alignment with specific requirements.

- complex NLP challenges

- programming

- mathematical reasoning

- general language processing

Quantized Versions & Hardware Requirements of Llama Pro 8B Instruct

Llama Pro 8B Instruct’s medium q4 version requires a GPU with at least 16GB VRAM and 12GB–24GB VRAM for optimal performance, making it suitable for systems with mid-range graphics cards. This quantized version balances precision and efficiency, but possible applications may vary depending on hardware capabilities. Users should verify their GPU’s VRAM and system memory to ensure compatibility.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Llama Pro 8B Instruct is a large language model with 8b parameters developed by Arc Lab, Tencent Pcg, designed for programming, mathematical reasoning, and general language processing. It operates under the Llama 2 Community License Agreement (LLAMA-2-CLA) and features a 4k context length, making it suitable for tasks requiring domain-specific knowledge while balancing performance and resource efficiency.

References

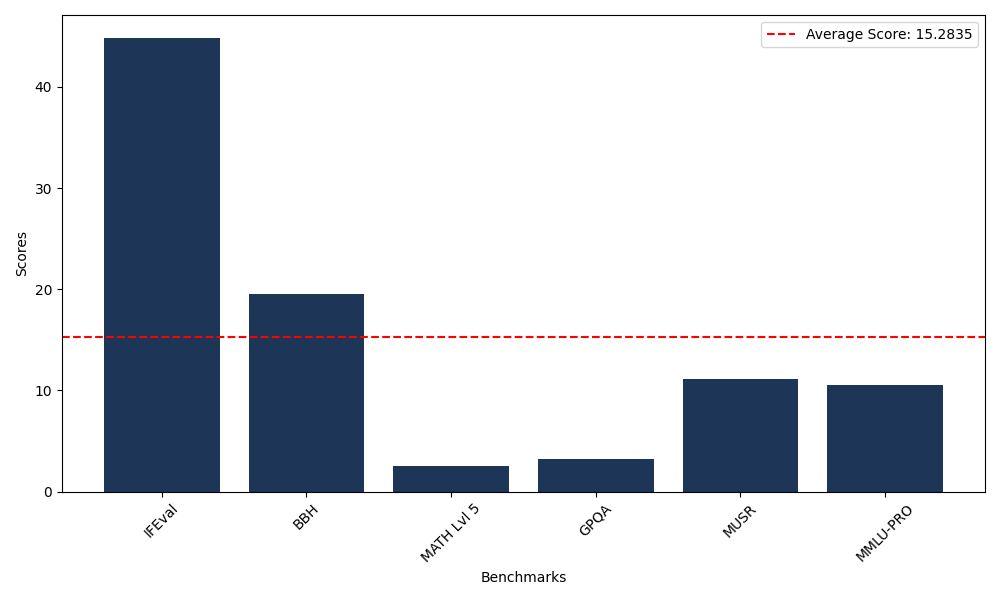

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 44.86 |

| Big Bench Hard (BBH) | 19.49 |

| Mathematical Reasoning Test (MATH Lvl 5) | 2.49 |

| General Purpose Question Answering (GPQA) | 3.24 |

| Multimodal Understanding and Reasoning (MUSR) | 11.11 |

| Massive Multitask Language Understanding (MMLU-PRO) | 10.51 |

Comments

No comments yet. Be the first to comment!

Leave a Comment