Llama3 70B - Model Details

Llama3 70B, developed by Meta Llama, a company, is a large language model with 70b parameters. It operates under the Meta Llama 3 Community License Agreement (META-LLAMA-3-CCLA) and is designed to enhance performance and efficiency on industry benchmarks.

Description of Llama3 70B

Meta Llama 3 is a family of large language models developed by Meta, featuring pretrained and instruction-tuned variants in 8B and 70B parameter sizes. The instruction-tuned models are optimized for dialogue use cases, utilizing an auto-regressive transformer architecture with supervised fine-tuning (SFT) and reinforcement learning with human feedback (RLHF) to enhance helpfulness and safety. Trained on over 15 trillion tokens of publicly available data with a context length of 8k tokens, the models support commercial and research applications, including code generation and natural language tasks.

Parameters & Context Length of Llama3 70B

Llama3 70B is a large language model with 70b parameters, placing it in the very large models category, which excels at complex tasks but requires significant computational resources. Its 8k context length falls into the moderate contexts range, enabling it to handle longer texts effectively while still being limited for extremely extended sequences. The 70b parameter size allows for advanced reasoning and multitasking, but it demands substantial infrastructure for deployment. The 8k context length supports detailed interactions and extended content processing, though it may not match the capabilities of models with longer context windows.

- Parameter Size: 70b

- Context Length: 8k

Possible Intended Uses of Llama3 70B

Llama3 70B is a large language model with 70b parameters and an 8k context length, making it a versatile tool for a range of possible applications. Its possible uses include commercial use scenarios such as automation, content creation, or customer interaction, though these require careful evaluation for specific needs. Research use could involve exploring language patterns, improving model efficiency, or advancing natural language processing techniques, but further testing is needed to confirm effectiveness. Assistant-like chat functions might support task automation or conversational interfaces, though their suitability depends on context and requirements. Natural language generation tasks could include drafting text, generating code, or creating summaries, but these possible applications demand thorough validation. The model’s scale and architecture offer flexibility, but its possible utility in any domain requires detailed analysis before deployment.

- commercial use

- research use

- assistant-like chat

- natural language generation tasks

Possible Applications of Llama3 70B

Llama3 70B is a large language model with 70b parameters and an 8k context length, which may be suitable for possible applications such as commercial use involving content creation or automation, though its effectiveness in such areas requires further exploration. Possible research use could include analyzing language patterns or testing model efficiency, but these possible applications demand rigorous validation. Assistant-like chat functions might support task automation or conversational interfaces, though their suitability depends on specific requirements. Natural language generation tasks could involve drafting text or generating code, but these possible uses need thorough testing. Each application must be thoroughly evaluated and tested before use.

- commercial use

- research use

- assistant-like chat

- natural language generation tasks

Quantized Versions & Hardware Requirements of Llama3 70B

Llama3 70B with the q4 quantized version requires multiple GPUs with at least 48GB VRAM total for deployment, along with 32GB+ system memory and adequate cooling. This possible configuration balances precision and performance, making it suitable for medium-scale tasks. Other important considerations include sufficient power supply and optimized GPU cooling.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Llama3 70B, developed by Meta Llama, is a large language model with 70b parameters and an 8k context length, operating under the Meta Llama 3 Community License Agreement. It utilizes an auto-regressive transformer architecture with SFT and RLHF for enhanced performance, trained on over 15 trillion tokens for tasks like code generation and natural language processing.

References

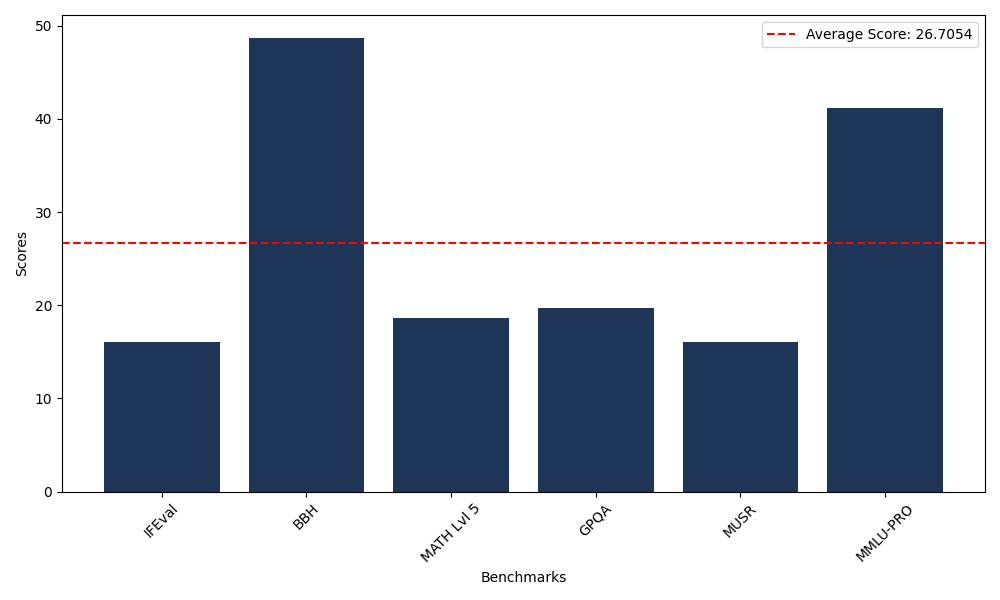

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 16.03 |

| Big Bench Hard (BBH) | 48.71 |

| Mathematical Reasoning Test (MATH Lvl 5) | 18.58 |

| General Purpose Question Answering (GPQA) | 19.69 |

| Multimodal Understanding and Reasoning (MUSR) | 16.01 |

| Massive Multitask Language Understanding (MMLU-PRO) | 41.21 |

Comments

No comments yet. Be the first to comment!

Leave a Comment