Llama3 8B Instruct - Model Details

Llama3 8B Instruct is a large language model developed by Meta Llama, featuring 8 billion parameters. It is released under the Meta Llama 3 Community License Agreement (META-LLAMA-3-CCLA), allowing specific usage rights. Designed for instruction-following tasks, the model emphasizes improved performance and efficiency on industry benchmarks.

Description of Llama3 8B Instruct

Meta Llama 3 is a family of large language models (LLMs) developed by Meta, available in 8B and 70B parameter sizes. It includes pretrained and instruction-tuned variants optimized for dialogue use cases. The models use an optimized transformer architecture with Grouped-Query Attention (GQA) for improved inference scalability. Llama 3 is trained on a mix of publicly available online data, with knowledge cutoffs in March 2023 for the 8B version and December 2023 for the 70B version. It emphasizes safety, helpfulness, and alignment through techniques like supervised fine-tuning and reinforcement learning with human feedback.

Parameters & Context Length of Llama3 8B Instruct

Llama3 8B Instruct has 8 billion parameters, placing it in the mid-scale category, offering balanced performance for moderate complexity tasks while remaining resource-efficient. Its 8k context length allows handling moderate-length tasks but limits its ability to process very long texts. The parameter size enables faster inference and lower computational demands compared to larger models, making it suitable for applications requiring efficiency. The context length supports extended interactions but may struggle with extremely lengthy documents or continuous sequences.

- Parameter Size: 8b

- Context Length: 8k

Possible Intended Uses of Llama3 8B Instruct

Llama3 8B Instruct is designed for a range of possible uses that could benefit from its parameter size and context length. Possible applications include commercial and research use, where its efficiency and scalability might support tasks like data analysis or content creation. Possible scenarios also involve assistant-like chat applications, leveraging its dialogue optimization for interactive systems. Possible tasks in natural language generation could include text summarization or creative writing, though these would require testing to confirm suitability. The model’s design and training suggest it could handle such possible uses, but further investigation is needed to ensure alignment with specific requirements.

- commercial and research use

- assistant-like chat applications

- natural language generation tasks

Possible Applications of Llama3 8B Instruct

Llama3 8B Instruct could be used for possible applications such as commercial and research use, where its parameter size and context length might support tasks like data analysis or content creation. Possible scenarios include assistant-like chat applications, where its dialogue optimization could enhance user interactions. Possible tasks in natural language generation might involve creative writing or text summarization, though these would require testing to confirm effectiveness. Possible uses in other domains could also emerge, but each would need thorough evaluation to ensure alignment with specific needs.

- commercial and research use

- assistant-like chat applications

- natural language generation tasks

Quantized Versions & Hardware Requirements of Llama3 8B Instruct

Llama3 8B Instruct with the medium q4 quantization requires a GPU with at least 16GB VRAM and system memory of at least 32GB to run efficiently, making it suitable for devices with moderate hardware capabilities. Possible applications of this version may include tasks that balance precision and performance, but users should verify compatibility with their specific setup.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Llama3 8B Instruct is a large language model developed by Meta Llama with 8 billion parameters and an 8k context length, released under the Meta Llama 3 Community License Agreement (META-LLAMA-3-CCLA). It is designed for improved performance and efficiency on industry benchmarks, making it suitable for commercial and research use, assistant-like chat applications, and natural language generation tasks.

References

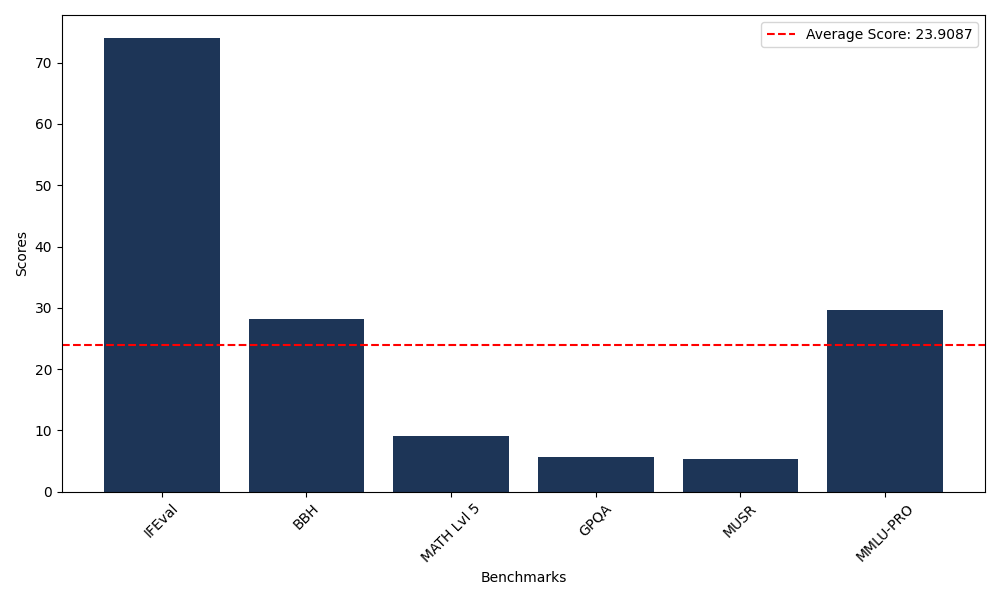

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 74.08 |

| Big Bench Hard (BBH) | 28.24 |

| Mathematical Reasoning Test (MATH Lvl 5) | 8.69 |

| General Purpose Question Answering (GPQA) | 1.23 |

| Multimodal Understanding and Reasoning (MUSR) | 1.60 |

| Massive Multitask Language Understanding (MMLU-PRO) | 29.60 |

| Instruction Following Evaluation (IFEval) | 47.82 |

| Big Bench Hard (BBH) | 26.80 |

| Mathematical Reasoning Test (MATH Lvl 5) | 9.14 |

| General Purpose Question Answering (GPQA) | 5.70 |

| Multimodal Understanding and Reasoning (MUSR) | 5.40 |

| Massive Multitask Language Understanding (MMLU-PRO) | 28.79 |

Comments

No comments yet. Be the first to comment!

Leave a Comment