Llama3.2 1B

Llama3.2 1B is a large language model developed by Meta Llama Enterprise with a parameter size of 1B. It is available under the Llama 32 Acceptable Use Policy (Llama-32-AUP) and the Llama 32 Community License Agreement (LLAMA-32-COMMUNITY). The model is designed for multilingual dialogue use cases with capabilities in agentic retrieval and summarization.

Description of Llama3.2 1B

Llama 3.2 is a collection of multilingual large language models (LLMs) with 1B and 3B parameter sizes, designed for multilingual dialogue, agentic retrieval, and summarization tasks. It outperforms many open-source and closed chat models on industry benchmarks. Trained on up to 9 trillion tokens of publicly available data, its knowledge is current through December 2023.

Parameters & Context Length of Llama3.2 1B

Llama3.2 1B features a 1B parameter size, placing it in the small model category, which ensures fast and resource-efficient performance for tasks requiring moderate complexity. Its 128k context length falls into the very long context range, enabling handling of extensive texts but demanding significant computational resources. This combination makes the model suitable for multilingual dialogue and summarization while balancing efficiency and capability.

- Parameter Size: 1b

- Context Length: 128k

Possible Intended Uses of Llama3.2 1B

Llama3.2 1B is a multilingual large language model designed for assistant-like chat applications, knowledge retrieval and summarization, and mobile AI-powered writing assistants. Its support for multiple languages including English, Italian, French, Portuguese, Thai, Hindi, German, and Spanish makes it a possible tool for cross-lingual tasks. While possible applications could include conversational interfaces, content analysis, or text generation, these uses require thorough investigation to ensure alignment with specific needs. The model’s capabilities could be explored for scenarios involving dialogue systems, information extraction, or creative writing support, but possible limitations may arise depending on the context.

- assistant-like chat applications

- knowledge retrieval and summarization

- mobile ai-powered writing assistants

Possible Applications of Llama3.2 1B

Llama3.2 1B is a multilingual large language model with possible applications in assistant-like chat interactions, knowledge retrieval and summarization tasks, and mobile AI-powered writing support. Its support for multiple languages including English, Italian, French, Portuguese, Thai, Hindi, German, and Spanish suggests possible use cases for cross-lingual dialogue systems or content processing. While possible scenarios could involve conversational interfaces, information extraction, or text generation, these possible applications require thorough evaluation to ensure suitability for specific contexts. The model’s design could be adapted for tasks involving dialogue management, content analysis, or creative writing assistance, but possible limitations may exist depending on implementation. Each application must be thoroughly evaluated and tested before deployment.

- assistant-like chat applications

- knowledge retrieval and summarization

- mobile ai-powered writing assistants

Quantized Versions & Hardware Requirements of Llama3.2 1B

Llama3.2 1B with the q4 quantization offers a balanced trade-off between precision and performance, requiring a GPU with at least 8GB VRAM for efficient operation. This makes it possible to run on mid-range graphics cards, though specific requirements may vary based on workload and implementation. The fp16, q2, q3, q4, q5, q6, q8 quantized versions are available.

Conclusion

Llama3.2 1B is a multilingual large language model optimized for dialogue, agentic retrieval, and summarization, with a 1B parameter size and 128k context length. It is available under the Llama 32 Acceptable Use Policy and Llama 32 Community License Agreement, trained on up to 9 trillion tokens of publicly available data through December 2023.

References

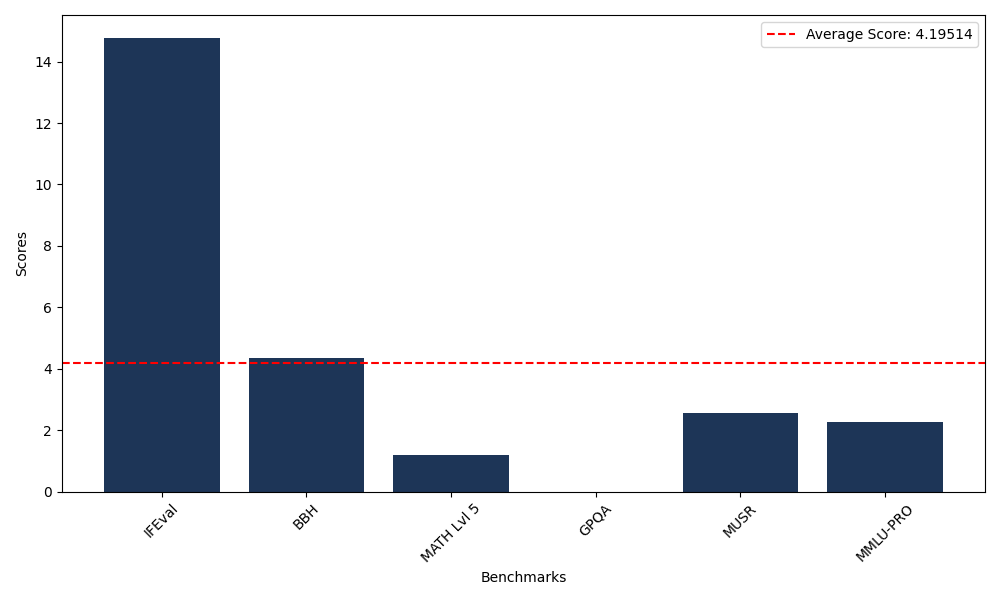

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 14.78 |

| Big Bench Hard (BBH) | 4.37 |

| Mathematical Reasoning Test (MATH Lvl 5) | 1.21 |

| General Purpose Question Answering (GPQA) | 0.00 |

| Multimodal Understanding and Reasoning (MUSR) | 2.56 |

| Massive Multitask Language Understanding (MMLU-PRO) | 2.26 |