Llama3.2 1B Instruct - Model Details

Llama3.2 1B Instruct is a large language model developed by Meta Llama Enterprise with 1b parameters. It is designed for multilingual dialogue use cases involving agentic retrieval and summarization. The model is available under the Llama 32 Acceptable Use Policy (Llama-32-AUP) and the Llama 32 Community License Agreement (LLAMA-32-COMMUNITY).

Description of Llama3.2 1B Instruct

The Llama 3.2 collection of multilingual large language models (LLMs) includes pretrained and instruction-tuned generative models in 1B and 3B sizes. Optimized for multilingual dialogue use cases, including agentic retrieval and summarization tasks, these models outperform many open-source and closed chat models on industry benchmarks. Developed by Meta, they utilize an optimized transformer architecture with supervised fine-tuning (SFT) and reinforcement learning with human feedback (RLHF) to align with human preferences.

Parameters & Context Length of Llama3.2 1B Instruct

The Llama3.2 1B Instruct model has 1b parameters, placing it in the small-scale category of open-source LLMs, which typically offers fast and resource-efficient performance for simpler tasks. Its 128k context length falls into the very long context range, enabling it to handle extensive text sequences but requiring significant computational resources. This combination makes the model suitable for specialized multilingual dialogue tasks where efficiency and long-context understanding are critical.

- Parameter size: 1b

- Context length: 128k

Possible Intended Uses of Llama3.2 1B Instruct

The Llama3.2 1B Instruct model offers possible uses in scenarios requiring multilingual support and efficient processing. Its 1b parameter size and 128k context length make it suitable for possible applications such as assistant-like chat interactions, where it could provide conversational responses in multiple languages. It might also be used for agentic applications like knowledge retrieval and summarization, enabling possible exploration of tasks that involve analyzing and condensing long texts. Possible uses could extend to mobile AI-powered writing assistants, where its compact size and multilingual capabilities might support tasks like drafting or editing content. Additionally, it could be employed for query and prompt rewriting, helping users refine their input for better results. Possible applications in on-device use-cases with limited compute resources might include lightweight tools for language translation or text generation. However, these possible uses require thorough investigation to ensure they align with specific requirements and constraints.

- assistant-like chat

- agentic applications like knowledge retrieval and summarization

- mobile ai powered writing assistants

- query and prompt rewriting

- on-device use-cases with limited compute resources

Possible Applications of Llama3.2 1B Instruct

The Llama3.2 1B Instruct model presents possible applications in areas where multilingual support and efficient processing are key. Possible uses could include assistant-like chat systems, where its ability to handle diverse languages might enhance user interactions. It might also be used for agentic applications such as knowledge retrieval and summarization, offering possible benefits for tasks requiring concise information extraction. Possible applications could extend to mobile AI-powered writing assistants, leveraging its compact size for on-the-go content creation. Additionally, it might support query and prompt rewriting tools, enabling possible improvements in user input refinement. These possible uses require careful evaluation to ensure alignment with specific needs and constraints.

- assistant-like chat

- agentic applications like knowledge retrieval and summarization

- mobile ai powered writing assistants

- query and prompt rewriting

Quantized Versions & Hardware Requirements of Llama3.2 1B Instruct

The Llama3.2 1B Instruct model's medium q4 version requires 4GB–8GB VRAM for deployment, making it suitable for GPUs with at least 8GB VRAM. This quantization balances precision and performance, allowing efficient execution on systems with moderate hardware. System memory should be at least 32GB, and adequate cooling is recommended to handle sustained workloads. Other hardware considerations include a multi-core CPU and a power supply capable of supporting the GPU.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

The Llama3.2 1B Instruct model, developed by Meta Llama Enterprise, features 1b parameters and is optimized for multilingual dialogue use cases such as agentic retrieval and summarization. It supports a 128k context length and is available under the Llama 32 Acceptable Use Policy and Llama 32 Community License Agreement, making it suitable for a range of applications requiring efficient and flexible language processing.

References

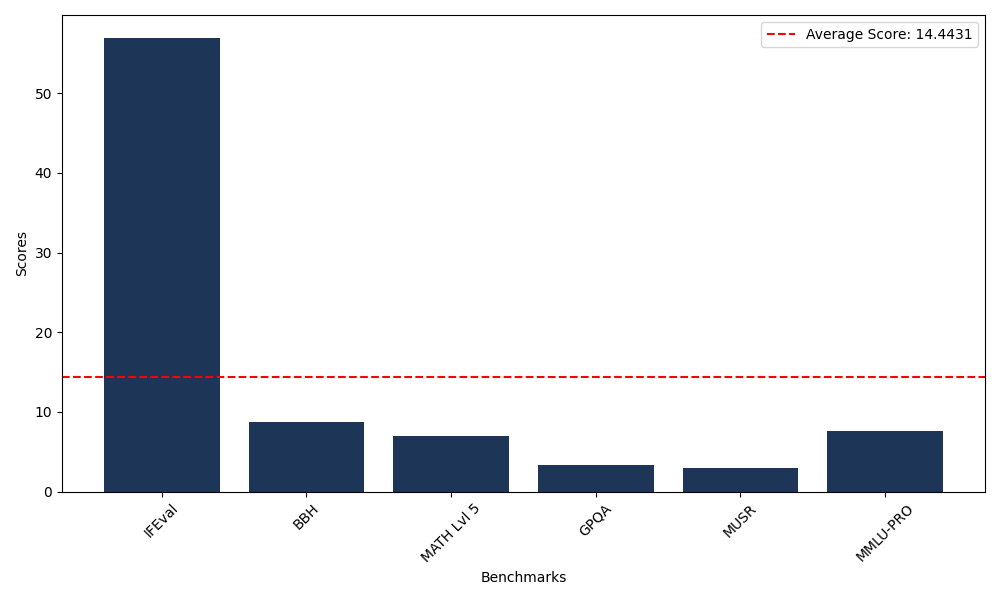

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 56.98 |

| Big Bench Hard (BBH) | 8.74 |

| Mathematical Reasoning Test (MATH Lvl 5) | 7.02 |

| General Purpose Question Answering (GPQA) | 3.36 |

| Multimodal Understanding and Reasoning (MUSR) | 2.97 |

| Massive Multitask Language Understanding (MMLU-PRO) | 7.58 |

Comments

No comments yet. Be the first to comment!

Leave a Comment