Llama3.2 3B Instruct - Model Details

Llama3.2 3B Instruct is a large language model developed by Meta Llama Enterprise with 3b parameters, designed for multilingual dialogue use cases involving agentic retrieval and summarization. It operates under the Llama 32 Acceptable Use Policy (Llama-32-AUP) and the Llama 32 Community License Agreement (LLAMA-32-COMMUNITY), offering flexibility for various applications while adhering to specific usage guidelines.

Description of Llama3.2 3B Instruct

Llama 3.2 is a collection of multilingual large language models (LLMs) with 1B and 3B parameter sizes, optimized for multilingual dialogue, agentic retrieval, and summarization tasks. It employs an optimized transformer architecture enhanced through supervised fine-tuning (SFT) and reinforcement learning with human feedback (RLHF). Trained on up to 9 trillion tokens of publicly available data with a knowledge cutoff in December 2023, the model supports text and code generation. It includes quantized versions for efficient on-device use, making it versatile for diverse applications while maintaining performance across multiple languages and tasks.

Parameters & Context Length of Llama3.2 3B Instruct

Llama3.2 3B Instruct has a 3b parameter size, placing it in the small model category, which ensures fast and resource-efficient performance ideal for simple tasks and on-device applications. Its 128k context length falls into the very long context category, enabling handling of extensive texts but requiring significant computational resources. This combination makes it suitable for multilingual dialogue and summarization while balancing efficiency and capability.

- Name: Llama3.2 3B Instruct

- Parameter Size: 3b

- Context Length: 128k

- Implications: Small parameter size for efficiency, very long context for complex text handling.

Possible Intended Uses of Llama3.2 3B Instruct

Llama3.2 3B Instruct is a multilingual model designed for commercial and research applications across multiple languages, including english, italian, french, portuguese, thai, hindi, german, and spanish. Its 3b parameter size and 128k context length make it a possible tool for assistant-like chat systems, agentic applications such as knowledge retrieval and summarization, and mobile AI-powered writing assistants. It could also serve as a possible solution for query rewriting in multilingual environments. However, these uses are still under exploration, and thorough testing is necessary to ensure alignment with specific requirements. The model’s flexibility and language support open up possible opportunities for developers and researchers, but further investigation is required to determine its effectiveness in real-world scenarios.

- Name: Llama3.2 3B Instruct

- Purpose: Multilingual dialogue, agentic retrieval, summarization, and writing assistance

- Supported Languages: English, Italian, French, Portuguese, Thai, Hindi, German, Spanish

- Possible Uses: Commercial and research applications, assistant-like chat, agentic tasks, mobile writing tools, query rewriting

Possible Applications of Llama3.2 3B Instruct

Llama3.2 3B Instruct is a multilingual model with a 3b parameter size and 128k context length, making it a possible choice for applications like multilingual customer support systems, content creation tools, educational resources, and data summarization services. Its ability to handle complex dialogue and retrieval tasks suggests it could be a possible tool for agentic workflows or mobile writing assistants. However, these uses are still under exploration, and their effectiveness would need to be thoroughly evaluated in specific contexts. The model’s support for multiple languages also opens up possible opportunities for cross-cultural communication tools or research-driven projects, but further testing is essential to confirm suitability. Each application must be thoroughly evaluated and tested before deployment to ensure alignment with intended goals.

- Name: Llama3.2 3B Instruct

- Possible Applications: Multilingual customer support, content creation, educational resources, data summarization, agentic workflows, mobile writing assistants, cross-cultural communication tools

Quantized Versions & Hardware Requirements of Llama3.2 3B Instruct

Llama3.2 3B Instruct with the q4 quantization requires a GPU with at least 12GB VRAM and 8GB–16GB VRAM for optimal performance, making it a possible choice for systems with moderate hardware capabilities. A multi-core CPU and at least 32GB RAM are also recommended to handle the model’s demands. These requirements are general guidelines, and users should evaluate their specific hardware to ensure compatibility. Each application must be thoroughly tested to confirm suitability.

- Name: Llama3.2 3B Instruct

- Quantized Versions: fp16, q2, q3, q4, q5, q6, q8

Conclusion

Llama3.2 3B Instruct is a multilingual large language model with a 3b parameter size and 128k context length, designed for tasks like dialogue, retrieval, and summarization across multiple languages. Its flexibility and efficiency make it a possible tool for commercial and research applications, though thorough evaluation is necessary for specific use cases.

References

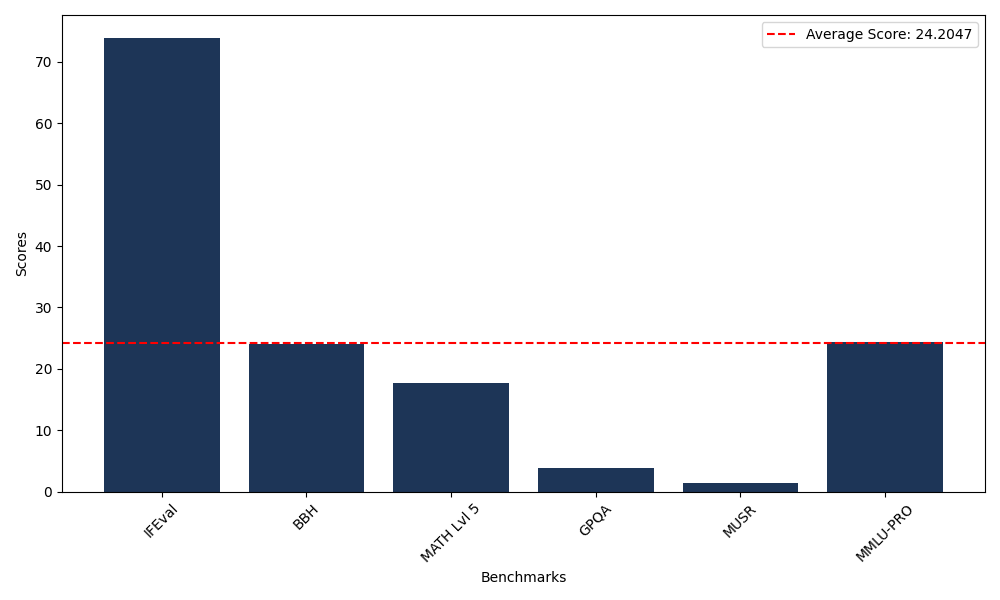

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 73.93 |

| Big Bench Hard (BBH) | 24.06 |

| Mathematical Reasoning Test (MATH Lvl 5) | 17.67 |

| General Purpose Question Answering (GPQA) | 3.80 |

| Multimodal Understanding and Reasoning (MUSR) | 1.37 |

| Massive Multitask Language Understanding (MMLU-PRO) | 24.39 |

Comments

No comments yet. Be the first to comment!

Leave a Comment