Llama3.3 70B Instruct - Model Details

Llama3.3 70B Instruct is a large language model developed by Meta Llama Enterprise with 70b parameters, designed for multilingual dialogue with enhanced performance. It is licensed under the Llama 33 Acceptable Use Policy (Llama-33-AUP) and the Llama 33 Community License Agreement (LLAMA-33-CCLA), making it suitable for a wide range of applications while adhering to specific usage guidelines. As an instruct model, it prioritizes interactive and context-aware responses across multiple languages.

Description of Llama3.3 70B Instruct

Llama3.3 70B Instruct is an instruction-tuned generative model with 70B parameters designed for multilingual dialogue and optimized to outperform many open-source and closed chat models on industry benchmarks. It is trained on 15 trillion tokens of publicly available data up to December 2023 and supports multilingual text and code generation. The model includes safety fine-tuning to align with human preferences for helpfulness and safety, making it suitable for interactive and context-aware applications across multiple languages. It is maintained by Meta Llama Enterprise under the Llama 33 Acceptable Use Policy (Llama-33-AUP) and Llama 33 Community License Agreement (LLAMA-33-CCLA).

Parameters & Context Length of Llama3.3 70B Instruct

The Llama3.3 70B Instruct model features 70b parameters, placing it in the very large models category, which enables advanced performance for complex tasks but demands significant computational resources. Its 128k context length falls under very long contexts, allowing it to process and generate responses for extended texts, though this requires substantial memory and processing power. These specifications make the model highly capable for intricate multilingual dialogue and long-form content creation, but they also necessitate optimized infrastructure for efficient deployment.

- Parameter Size: 70b (Very Large Models: 70B+)

- Context Length: 128k (Very Long Contexts: 128K+)

Possible Intended Uses of Llama3.3 70B Instruct

The Llama3.3 70B Instruct model offers possible applications in areas such as commercial use, where it could support tasks like content creation or customer interaction, and research use, potentially aiding in language analysis or experimental workflows. Its multilingual capabilities make it a possible tool for assistant-like chat systems across multiple languages, including Italian, Spanish, French, Portuguese, English, Thai, Hindi, and German. It could also be used for natural language generation tasks, such as drafting text or generating code, though these possible uses would require testing to ensure alignment with specific goals. The model’s design suggests it could handle complex dialogue scenarios, but further exploration is needed to confirm its effectiveness in real-world settings.

- commercial use

- research use

- assistant-like chat

- natural language generation tasks

Possible Applications of Llama3.3 70B Instruct

The Llama3.3 70B Instruct model presents possible applications in areas such as commercial use, where it could support tasks like content creation or customer interaction, and research use, potentially aiding in language analysis or experimental workflows. Its multilingual capabilities make it a possible tool for assistant-like chat systems across multiple languages, including Italian, Spanish, French, Portuguese, English, Thai, Hindi, and German. It could also be used for natural language generation tasks, such as drafting text or generating code, though these possible uses would require testing to ensure alignment with specific goals. The model’s design suggests it could handle complex dialogue scenarios, but further exploration is needed to confirm its effectiveness in real-world settings.

- commercial use

- research use

- assistant-like chat

- natural language generation tasks

Quantized Versions & Hardware Requirements of Llama3.3 70B Instruct

The Llama3.3 70B Instruct model’s medium q4 version requires significant hardware resources, with VRAM needs of at least 32GB for efficient operation, though higher VRAM (up to 64GB or more) may be necessary for optimal performance. This version balances precision and speed, making it possible to run on high-end GPUs like the A100 or RTX 4090, but users should verify their graphics card’s capabilities. System memory should be at least 32GB, and adequate cooling and power supply are essential. The q4 quantization reduces model size compared to full precision but still demands robust hardware for large-scale tasks.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

The Llama3.3 70B Instruct is a large language model with 70b parameters developed by Meta Llama Enterprise, designed for multilingual dialogue and optimized for complex tasks with a 128k context length. It supports commercial use, research, and natural language generation while adhering to specific licensing terms and requiring robust hardware for deployment.

References

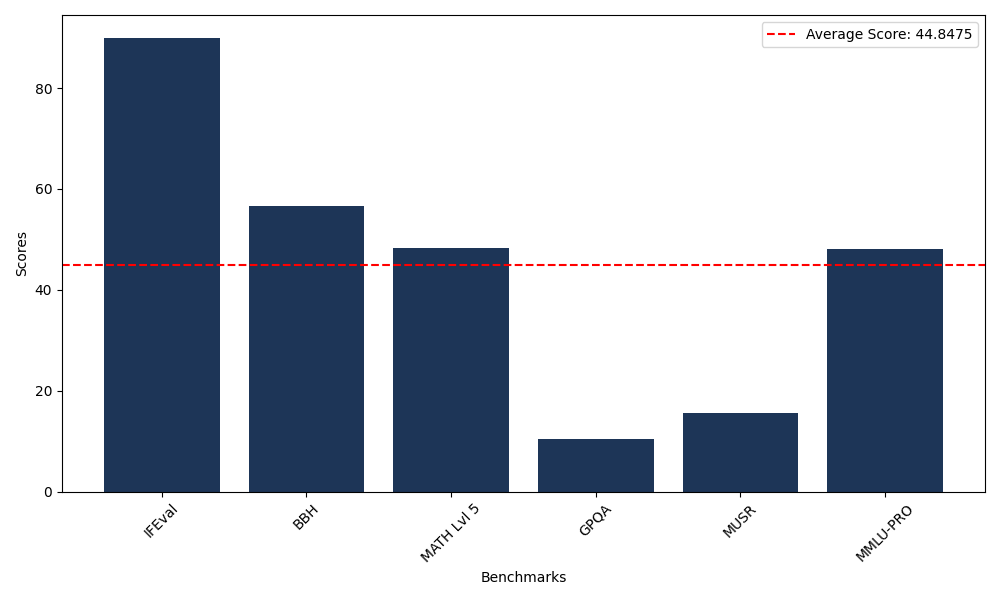

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 89.98 |

| Big Bench Hard (BBH) | 56.56 |

| Mathematical Reasoning Test (MATH Lvl 5) | 48.34 |

| General Purpose Question Answering (GPQA) | 10.51 |

| Multimodal Understanding and Reasoning (MUSR) | 15.57 |

| Massive Multitask Language Understanding (MMLU-PRO) | 48.13 |

Comments

No comments yet. Be the first to comment!

Leave a Comment