Marco O1 7B - Model Details

Marco O1 7B is a large language model developed by Aidc-Ai, a company specializing in advanced AI research. With 7b parameters, it is designed for efficient and scalable performance. The model is released under the Apache License 2.0, allowing broad usage and modification. Its primary focus is on fine-tuning with a CoT dataset to enhance reasoning capabilities, making it suitable for complex problem-solving tasks.

Description of Marco O1 7B

Marco-o1 is a large language model designed for open-ended problem-solving, leveraging techniques like Chain-of-Thought (CoT) fine-tuning, Monte Carlo Tree Search (MCTS), and reasoning action strategies to enhance its ability to tackle complex tasks. It emphasizes multilingual applications and improves translation of colloquial nuances, aiming to bridge gaps in real-world language understanding. While inspired by OpenAI's o1, it remains an ongoing research effort with limitations in fully achieving o1-like performance, reflecting its experimental and evolving nature.

Parameters & Context Length of Marco O1 7B

Marco O1 7B has 7b parameters, placing it in the mid-scale range of open-source LLMs, offering a balance between performance and resource efficiency for moderate complexity tasks. Its 128k context length falls into the very long context category, enabling it to process extensive texts but requiring significant computational resources. This combination allows the model to handle complex reasoning tasks while maintaining scalability, though its 7b parameter count may limit its ability to tackle the most demanding applications compared to larger models.

- Parameter Size: 7b – mid-scale models balance performance and efficiency for moderate complexity.

- Context Length: 128k – very long contexts enable handling of extensive texts but demand high resources.

Possible Intended Uses of Marco O1 7B

Marco O1 7B is a model designed for complex problem-solving tasks, translation of colloquial and nuanced language, and exploration of open-ended questions with unclear standards, making it a potential tool for scenarios requiring flexible reasoning. Its monolingual nature in English and Chinese suggests possible applications in cross-lingual analysis or localized content creation, though further testing would be needed to confirm effectiveness. The model’s focus on Chain-of-Thought (CoT) fine-tuning could enable possible uses in educational tools, creative writing assistance, or research-oriented tasks where iterative reasoning is critical. However, these possible applications remain speculative and would require thorough evaluation to ensure alignment with specific goals.

- Intended Uses: complex problem-solving tasks, translation of colloquial and nuanced language, exploration of open-ended questions with unclear standards

- Supported Languages: english, chinese

- Purpose: designed for reasoning and language tasks with potential for diverse applications

- Model Name: Marco O1 7B

Possible Applications of Marco O1 7B

Marco O1 7B is a model that could be used for possible applications in areas such as complex problem-solving tasks, translation of colloquial and nuanced language, exploration of open-ended questions with unclear standards, and support for multilingual content creation. Its 7b parameter size and 128k context length suggest it might be suitable for possible uses in educational tools, creative writing assistance, or research-driven projects where iterative reasoning and language flexibility are key. However, these possible applications require careful validation to ensure they align with specific needs, as the model’s performance in these areas remains unproven. The monolingual focus on English and Chinese further highlights its possible role in localized or cross-lingual tasks, though this would need thorough testing.

- complex problem-solving tasks

- translation of colloquial and nuanced language

- exploration of open-ended questions with unclear standards

- support for multilingual content creation

Quantized Versions & Hardware Requirements of Marco O1 7B

Marco O1 7B in its medium q4 version requires a GPU with at least 16GB VRAM for efficient operation, making it suitable for systems with mid-range graphics cards. This quantization balances precision and performance, allowing the model to run on hardware that might struggle with higher-precision formats like fp16. The 7b parameter size ensures it remains accessible for many users, though q8 and fp16 versions would demand more resources.

- fp16, q4, q8

Conclusion

Marco O1 7B is a large language model developed by Aidc-Ai, featuring 7b parameters and a 128k context length, designed for complex reasoning tasks and multilingual applications with a focus on Chain-of-Thought (CoT) fine-tuning. It operates under the Apache License 2.0, offering flexibility for research and development while emphasizing enhanced problem-solving capabilities and translation of colloquial nuances.

References

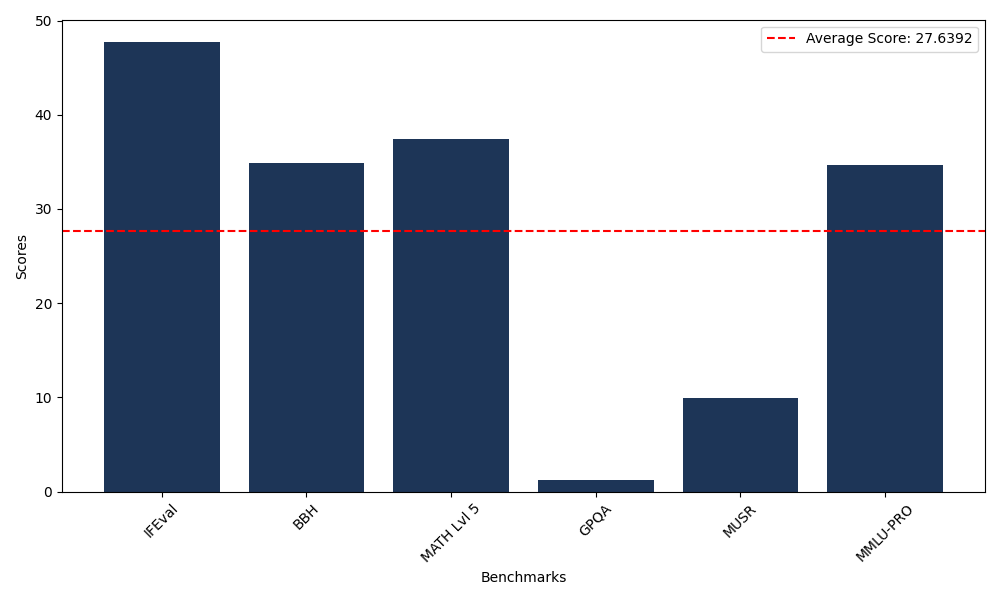

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 47.71 |

| Big Bench Hard (BBH) | 34.84 |

| Mathematical Reasoning Test (MATH Lvl 5) | 37.46 |

| General Purpose Question Answering (GPQA) | 1.23 |

| Multimodal Understanding and Reasoning (MUSR) | 9.96 |

| Massive Multitask Language Understanding (MMLU-PRO) | 34.63 |

Comments

No comments yet. Be the first to comment!

Leave a Comment