Mistral 7B - Model Details

Mistral 7B is a large language model developed by Mistral Ai, a company focused on advancing AI capabilities. With 7B parameters, it is designed to deliver high performance across various tasks. The model is released under the Apache License 2.0, ensuring open access and flexibility for users. It outperforms larger models like Llama 2 13B and Llama 1 34B on benchmarks while maintaining strong proficiency in code and English tasks.

Description of Mistral 7B

Mistral-7B-v0.1 is a large language model with 7 billion parameters designed as a pretrained base model for generative text tasks. It outperforms Llama 2 13B on all benchmarks tested, demonstrating strong performance in various applications. Unlike some models, it lacks built-in moderation mechanisms, making it suitable for users who require flexibility in deployment and content filtering. Its open architecture allows for customization while maintaining high efficiency and accuracy in text generation.

Parameters & Context Length of Mistral 7B

The Mistral 7B model features 7B parameters, placing it in the small to mid-scale category, which ensures fast and resource-efficient performance for tasks requiring moderate complexity. Its 4k context length allows handling of short to moderate-length texts but limits its ability to process very long documents. This combination makes it ideal for applications prioritizing speed and simplicity over extreme scalability.

- Mistral 7B: 7B parameters, 4k context length, implications: small/mid-scale efficiency, short/medium context handling.

- 7B: Small to mid-scale model, optimized for resource efficiency and moderate tasks.

- 4k: Short context length, suitable for brief texts but limited for extended content.

- Implications: Balances performance and accessibility, ideal for applications where speed and simplicity are critical.

Possible Intended Uses of Mistral 7B

Mistral 7B is a versatile large language model with 7B parameters and a 4k context length, offering possible applications across various domains. Its design allows for possible use in research to explore natural language processing, data analysis, or algorithm development, though further testing is needed to confirm effectiveness. In development, it could support prototyping tools, code generation, or AI experimentation, but its suitability for specific tasks requires careful evaluation. For education, it might aid in creating interactive learning materials, language practice, or content summarization, though its performance in these areas needs thorough investigation. These possible uses highlight the model’s flexibility but underscore the importance of validating its capabilities for each application.

- Mistral 7B

- Research

- Development

- Education

Possible Applications of Mistral 7B

Mistral 7B is a large language model with 7B parameters and a 4k context length, offering possible applications in areas like content creation, where it could generate text for creative or technical purposes, though its effectiveness for specific tasks requires further exploration. It might also support possible use in code generation, assisting developers with drafting or optimizing scripts, but this would need thorough testing. For possible educational tools, it could aid in creating interactive learning resources or language practice exercises, though its suitability for such roles remains to be validated. Additionally, it could facilitate possible research tasks, such as analyzing datasets or generating hypotheses, but these uses demand careful evaluation. Each application must be thoroughly evaluated and tested before deployment to ensure alignment with specific requirements.

- Mistral 7B

- Content creation

- Code generation

- Educational tools

- Research tasks

Quantized Versions & Hardware Requirements of Mistral 7B

Mistral 7B in its medium q4 version requires a GPU with at least 16GB VRAM for efficient operation, as it balances precision and performance for models up to 8B parameters. This configuration ensures smooth execution on systems with 32GB+ RAM and adequate cooling. While possible to run on consumer-grade GPUs, users should verify compatibility with their hardware. The q4 variant is optimized for medium-scale tasks, but other quantized versions may offer different trade-offs.

- Mistral 7B: fp16, q2, q3, q4, q5, q6, q8

Conclusion

Mistral 7B is a large language model with 7B parameters and a 4k context length, released under the Apache License 2.0. It serves as a base model without built-in moderation, offering flexibility for applications requiring customization and efficiency.

References

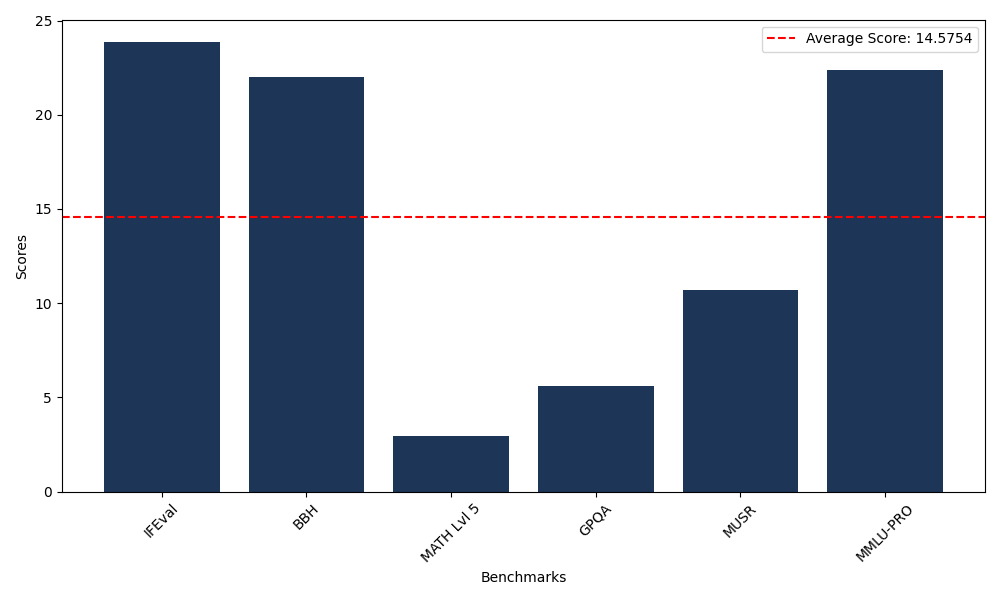

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 23.86 |

| Big Bench Hard (BBH) | 22.02 |

| Mathematical Reasoning Test (MATH Lvl 5) | 2.95 |

| General Purpose Question Answering (GPQA) | 5.59 |

| Multimodal Understanding and Reasoning (MUSR) | 10.68 |

| Massive Multitask Language Understanding (MMLU-PRO) | 22.36 |

Comments

No comments yet. Be the first to comment!

Leave a Comment