Mistral 7B Instruct - Model Details

Mistral 7B Instruct is a large language model developed by Mistral Ai, a company specializing in advanced AI research. With 7 billion parameters, it is designed to deliver strong performance across a range of tasks. The model is released under the Apache License 2.0, allowing for flexible use and modification. Mistral 7B Instruct is particularly noted for outperforming larger models like Llama 2 13B and Llama 1 34B on benchmarks while maintaining high proficiency in code and English tasks.

Description of Mistral 7B Instruct

The Mistral-7B-Instruct-v0.2 Large Language Model (LLM) is an instruct fine-tuned version of the Mistral-7B-v0.2 model, optimized for interactive tasks and user guidance. It builds on the Mistral-7B-v0.1 foundation with significant improvements, including a 32k context window (up from 8k in earlier versions), Rope-theta = 1e6 for enhanced positional encoding, and the removal of sliding-window attention to improve efficiency and scalability. These updates enhance its ability to handle complex, long-context interactions while maintaining strong performance in code and language tasks. The model is part of the Mistral series, developed by Mistral Ai, and designed for applications requiring precise, context-aware responses.

Parameters & Context Length of Mistral 7B Instruct

The Mistral 7B Instruct model features 7b parameters, placing it in the small to mid-scale range of open-source LLMs, offering a balance between efficiency and performance for moderate complexity tasks. Its 32k context length enables handling long texts, making it suitable for extended conversations or document analysis, though it requires more computational resources compared to shorter contexts. This combination allows the model to manage intricate tasks while maintaining responsiveness.

- Parameter Size: 7b (Small to mid-scale models, efficient for moderate tasks)

- Context Length: 32k (Long context, ideal for extended texts but resource-intensive)

Possible Intended Uses of Mistral 7B Instruct

The Mistral 7B Instruct model is designed for tasks that require instruction following, text generation, and question answering, making it a versatile tool for a range of applications. Its 7b parameter size and 32k context length suggest it could support possible uses such as creating detailed narratives, assisting with complex queries, or generating structured content. However, these possible uses would need careful evaluation to ensure they align with specific requirements and constraints. The model’s architecture may also enable possible uses in scenarios requiring nuanced understanding of user instructions or extended contextual awareness, though further testing would be necessary. As with any large language model, the possible uses should be explored with attention to their practicality and effectiveness in real-world settings.

- Instruction following

- Text generation

- Question answering

Possible Applications of Mistral 7B Instruct

The Mistral 7B Instruct model offers possible applications in areas such as instruction-based task automation, where its design for precise guidance could support possible uses like generating structured workflows or executing multi-step queries. Its text generation capabilities might enable possible uses in creative writing or content drafting, while its question-answering skills could facilitate possible uses for interactive learning tools or information retrieval systems. These possible applications would require thorough evaluation to ensure alignment with specific needs, as the model’s performance in real-world scenarios depends on contextual factors and training data. Each application must be thoroughly evaluated and tested before use.

- Instruction following

- Text generation

- Question answering

Quantized Versions & Hardware Requirements of Mistral 7B Instruct

The Mistral 7B Instruct model's medium q4 version is optimized for a balance between precision and performance, requiring a GPU with at least 16GB VRAM and 32GB of system memory to run efficiently. This configuration allows for smoother execution compared to higher-precision formats like fp16, making it a possible choice for users with mid-range hardware. However, the exact requirements may vary depending on the workload and implementation.

fp16, q2, q3, q4, q5, q6, q8

Conclusion

Mistral 7B Instruct is a 7b parameter large language model with a 32k context length, designed for instruction following and text generation, and released under the Apache License 2.0. It balances efficiency and performance, making it suitable for tasks requiring nuanced understanding and extended contextual awareness.

References

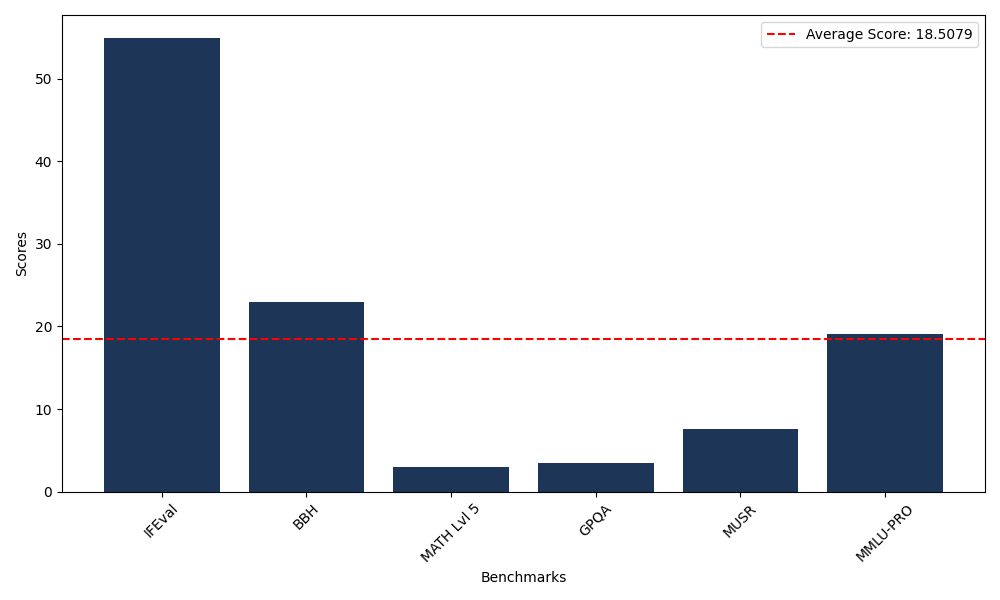

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 54.96 |

| Big Bench Hard (BBH) | 22.91 |

| Mathematical Reasoning Test (MATH Lvl 5) | 3.02 |

| General Purpose Question Answering (GPQA) | 3.47 |

| Multimodal Understanding and Reasoning (MUSR) | 7.61 |

| Massive Multitask Language Understanding (MMLU-PRO) | 19.08 |

Comments

No comments yet. Be the first to comment!

Leave a Comment