Neural Chat 7B - Model Details

Neural Chat 7B is a large language model developed by Intel, featuring 7 billion parameters. It is fine-tuned and optimized for improved performance on benchmarks. The model is designed to deliver efficient and effective language processing capabilities, leveraging Intel's expertise in computational optimization. While specific licensing details are not provided, the model reflects Intel's commitment to advancing AI technologies through targeted refinement and performance enhancement.

Description of Neural Chat 7B

Neural Chat 7B is a fine-tuned 7B parameter large language model based on Mistral-7B-v0.1, trained on the Open-Orca/SlimOrca dataset using Direct Performance Optimization (DPO) with Intel/orca_dpo_pairs. It is specifically optimized for Intel Gaudi 2 processors and supports a context length of 8192 tokens, enhancing its efficiency and performance in complex language tasks. The model leverages advanced training techniques to improve benchmark results while maintaining scalability and adaptability for diverse applications.

Parameters & Context Length of Neural Chat 7B

Neural Chat 7B features a 7B parameter size, placing it in the small to mid-scale range of open-source LLMs, offering a balance between performance and resource efficiency for moderate complexity tasks. Its 8K context length allows handling of moderate-length texts, making it suitable for tasks requiring extended input but not the longest possible sequences. The model’s design prioritizes efficiency on Intel Gaudi 2 processors, ensuring practical deployment without excessive computational demands. This combination of parameters and context length makes it versatile for applications where scalability and responsiveness are critical.

- Parameter Size: 7b

- Context Length: 8k

Possible Intended Uses of Neural Chat 7B

Neural Chat 7B is a versatile large language model with 7B parameters and an 8K context length, making it a possible tool for tasks like text generation, question answering, and code generation. Its design suggests it could be used for creating concise or structured content, assisting with information retrieval, or generating programming solutions. However, these are possible applications that require further testing to confirm their effectiveness in specific scenarios. The model’s optimization for Intel Gaudi 2 processors also implies potential for efficient deployment in environments where resource usage is a priority. While the intended uses are broad, they remain potential rather than guaranteed, and thorough evaluation is necessary before practical implementation.

- text generation

- question answering

- code generation

Possible Applications of Neural Chat 7B

Neural Chat 7B is a large language model with 7B parameters and an 8K context length, making it a possible candidate for tasks like content creation, interactive dialogue systems, or technical documentation assistance. Its design suggests it could be used for generating structured text, answering queries in specific domains, or supporting code-related tasks. However, these are possible applications that require further exploration to determine their suitability for specific scenarios. The model’s optimization for Intel Gaudi 2 processors also implies potential for efficient deployment in environments where resource constraints are a factor. While the model’s capabilities hint at these possible uses, each application must be thoroughly evaluated and tested before implementation.

- content creation

- interactive dialogue systems

- technical documentation assistance

- code-related tasks

Quantized Versions & Hardware Requirements of Neural Chat 7B

Neural Chat 7B with the q4 quantization requires a GPU with at least 16GB VRAM and a system with 32GB RAM for optimal performance, making it a possible choice for users with mid-range hardware. This version balances precision and efficiency, suitable for deployment on devices with moderate computational resources. The exact requirements may vary based on workload and implementation, so users should verify compatibility with their setup.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Neural Chat 7B is a 7B parameter large language model optimized for Intel Gaudi 2 processors, featuring an 8K context length and designed for efficient performance on benchmarks. It supports possible applications in text generation, question answering, and code generation, though further evaluation is needed for specific use cases.

References

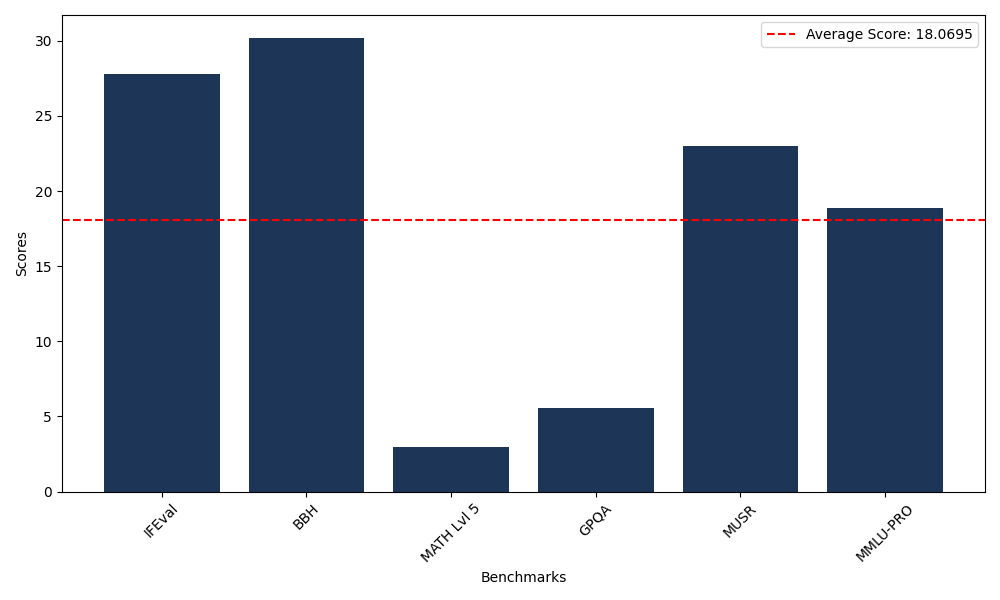

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 27.78 |

| Big Bench Hard (BBH) | 30.21 |

| Mathematical Reasoning Test (MATH Lvl 5) | 2.95 |

| General Purpose Question Answering (GPQA) | 5.59 |

| Multimodal Understanding and Reasoning (MUSR) | 23.02 |

| Massive Multitask Language Understanding (MMLU-PRO) | 18.87 |

Comments

No comments yet. Be the first to comment!

Leave a Comment