Notux 8X7B - Model Details

Notux 8X7B is a large language model developed by Argilla, a company dedicated to advancing human-centric AI solutions. It features a parameter size of 8x7b, designed to balance performance and efficiency. The model is released under the MIT License, ensuring open access and flexibility for developers. Its primary focus lies in refining techniques for human-centric fine-tuning, aiming to enhance collaboration between AI systems and human users.

Description of Notux 8X7B

Notux 8X7B is a preference-tuned variant of mistralai/Mixtral-8x7B-Instruct-v0.1 trained on the argilla/ultrafeedback-binarized-preferences-cleaned dataset using DPO (Direct Preference Optimization). It belongs to the Notus family of models, where the Argilla team explores data-first and preference tuning approaches like dDPO. The model builds on a MoE (Mixture of Experts) architecture that was already fine-tuned with DPO, emphasizing human-centric alignment through preference-based optimization.

Parameters & Context Length of Notux 8X7B

Notux 8X7B has 8x7b parameters, placing it in the mid-scale category of open-source LLMs, offering a balance between performance and resource efficiency for moderate complexity tasks. Its 4k context length is suitable for short to moderate tasks but limits its ability to handle very long texts. This configuration makes it ideal for applications requiring quick processing without excessive computational demands, though it may struggle with extended or highly complex input sequences.

- Name: Notux 8X7B

- Parameter Size: 8x7b

- Context Length: 4k

- Implications: Mid-scale performance, short to moderate context handling, efficient for simpler tasks.

Possible Intended Uses of Notux 8X7B

Notux 8X7B is a versatile model with possible applications in text generation for various tasks, code generation and debugging, and multilingual communication support. Its multilingual capabilities in German, English, Spanish, French, and Italian suggest possible uses in cross-language collaboration or content creation. However, these possible functions require further exploration to ensure alignment with specific needs. The model’s design may also enable possible experimentation in dynamic task environments where adaptability and language diversity are key. While the intended uses highlight its flexibility, they remain possible scenarios that demand careful validation before practical implementation.

- text generation for various tasks

- code generation and debugging

- multilingual communication support

Possible Applications of Notux 8X7B

Notux 8X7B is a versatile model with possible applications in text generation for creative or informational tasks, possible uses in code generation and debugging for software development, and possible scenarios for multilingual communication support across German, English, Spanish, French, and Italian. Its possible utility in dynamic environments where adaptability and language diversity are key highlights its flexibility. However, these possible functions require thorough evaluation to ensure they meet specific requirements. The model’s possible value in non-critical domains underscores the need for careful testing before deployment.

- text generation for various tasks

- code generation and debugging

- multilingual communication support

Quantized Versions & Hardware Requirements of Notux 8X7B

Notux 8X7B in its medium q4 version requires a GPU with at least 16GB VRAM and 12GB–24GB VRAM for optimal performance, making it suitable for systems with mid-range graphics cards. A multi-core CPU and at least 32GB RAM are also recommended to handle the model’s demands. These possible hardware requirements ensure a balance between precision and efficiency, though specific configurations may vary.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Notux 8X7B is a large language model developed by Argilla, featuring 8x7b parameters and released under the MIT License, with a focus on human-centric fine-tuning techniques. It is part of the Notus family, utilizing DPO (Direct Preference Optimization) to enhance alignment through preference-based training, aiming to improve collaboration between AI systems and human users.

References

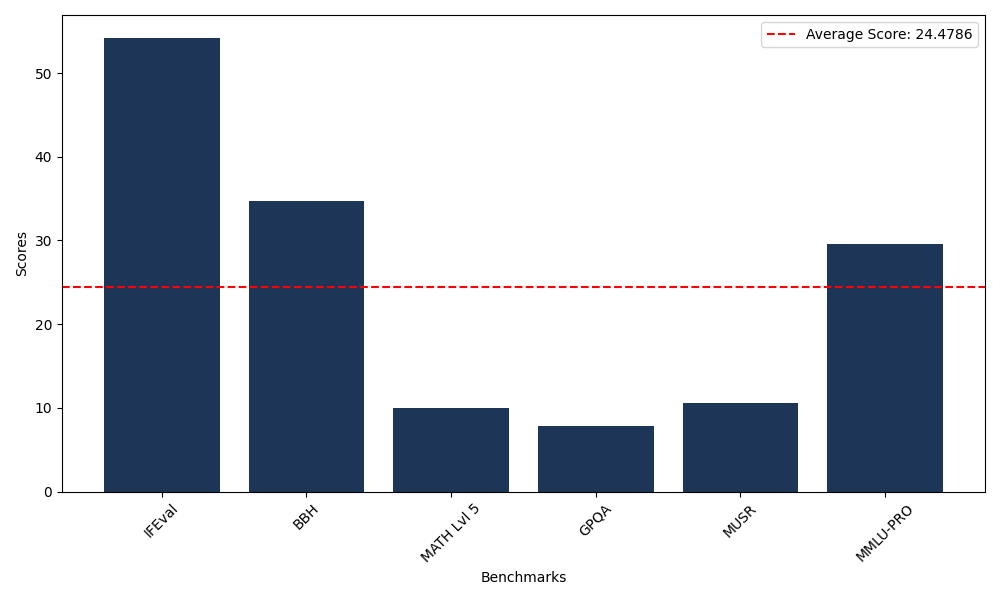

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 54.22 |

| Big Bench Hard (BBH) | 34.76 |

| Mathematical Reasoning Test (MATH Lvl 5) | 9.97 |

| General Purpose Question Answering (GPQA) | 7.83 |

| Multimodal Understanding and Reasoning (MUSR) | 10.53 |

| Massive Multitask Language Understanding (MMLU-PRO) | 29.56 |

Comments

No comments yet. Be the first to comment!

Leave a Comment