Nous Hermes2 Mixtral 8X7B - Model Details

Nous Hermes2 Mixtral 8X7B is a large language model developed by Nousresearch, a company specializing in advanced AI research. It features 8x7b parameters, making it a robust model for complex tasks. The model is released under the Apache License 2.0, ensuring open access and flexibility for users. Trained with DPO on 1 million high-quality entries, it excels in diverse tasks and supports structured dialogues, offering a balanced approach to natural language understanding and generation.

Description of Nous Hermes2 Mixtral 8X7B

Nous Hermes 2 Mixtral 8x7B DPO is a flagship large language model developed by Nous Research, designed as an 8x7B parameter mixture of experts (MoE) architecture. It is trained on over 1 million high-quality entries, primarily generated by GPT-4, alongside diverse open-source datasets, enabling state-of-the-art performance across a wide range of tasks. The model combines supervised fine-tuning (SFT) with direct preference optimization (DPO), offering enhanced alignment and dialogue capabilities. An SFT-only version is also available for specific use cases. Its focus on structured dialogues and task efficiency makes it a versatile tool for complex natural language processing scenarios.

Parameters & Context Length of Nous Hermes2 Mixtral 8X7B

Nous Hermes 2 Mixtral 8x7B DPO features 8x7b parameters, placing it in the mid-scale category of open-source LLMs, offering a balance between performance and resource efficiency for moderate complexity tasks. Its 4k context length falls into the short context range, making it suitable for concise interactions but limiting its ability to handle extended texts. The model’s parameter size enables efficient training and inference while maintaining robust capabilities for structured dialogues and task-oriented processing, though it may struggle with highly complex or lengthy input scenarios.

- Name: Nous Hermes 2 Mixtral 8x7B DPO

- Parameter_Size: 8x7b

- Context_Length: 4k

- Implications: Mid-scale performance for moderate tasks, short context limits for long texts.

Possible Intended Uses of Nous Hermes2 Mixtral 8X7B

Nous Hermes 2 Mixtral 8x7B DPO is a versatile model that could support a range of possible applications, though these require further exploration to confirm their effectiveness. Its parameter size and training on high-quality data suggest it might be suitable for possible tasks like generating code for data visualization, where structured and precise outputs are needed. It could also be used for possible creative writing projects, such as crafting cyberpunk poems, leveraging its ability to handle stylistic and narrative elements. Additionally, possible uses in backtranslation might involve transforming input text into prompts, potentially aiding in iterative refinement of content. These possible uses highlight the model’s adaptability but underscore the need for careful testing and validation before deployment.

- Name: Nous Hermes 2 Mixtral 8x7B DPO

- Intended_Uses: code generation for data visualization, creative writing of cyberpunk poems, backtranslation to create prompts from input text

- Purpose: exploratory applications requiring creativity, code generation, or text transformation

- Important Information: these uses are potential and require thorough investigation before practical implementation.

Possible Applications of Nous Hermes2 Mixtral 8X7B

Nous Hermes 2 Mixtral 8x7B DPO could have possible applications in areas like code generation for data visualization, where its structured output capabilities might be possible to enhance interactive tools. It could also be possible to use for creative writing tasks, such as generating cyberpunk poems, leveraging its ability to handle stylistic and narrative elements. Possible uses might include backtranslation workflows to refine prompts, offering possible value in iterative content creation. Additionally, it could be possible to explore its role in dialogue systems requiring structured interactions, though these possible applications require thorough testing. Each application must be thoroughly evaluated and tested before use.

- Name: Nous Hermes 2 Mixtral 8x7B DPO

- Possible Applications: code generation for data visualization, creative writing of cyberpunk poems, backtranslation to create prompts from input text

- Important Information: these are potential uses that require rigorous evaluation before practical implementation.

Quantized Versions & Hardware Requirements of Nous Hermes2 Mixtral 8X7B

Nous Hermes 2 Mixtral 8x7B DPO’s medium q4 version, a 4-bit quantized variant, is designed for balanced performance and precision, requiring at least 16GB VRAM for efficient operation, though 24GB VRAM or more is recommended for smoother handling of its 8x7b parameter size. This version reduces memory demands compared to higher-precision formats like fp16, making it possible to run on mid-range GPUs, but users should verify compatibility with their hardware. System memory of at least 32GB and adequate cooling are also possible prerequisites.

- Quantized Versions: fp16, q2, q3, q4, q5, q6, q8

- Name: Nous Hermes 2 Mixtral 8x7B DPO

- Important Information: Hardware requirements vary by quantization; ensure compatibility before deployment.

Conclusion

Nous Hermes 2 Mixtral 8x7B DPO is a large language model with 8x7b parameters, trained on 1 million high-quality entries using DPO for enhanced dialogue and task performance, and released under the Apache License 2.0. It features a 4k context length and is optimized for structured interactions, making it suitable for diverse applications while requiring careful evaluation for specific use cases.

References

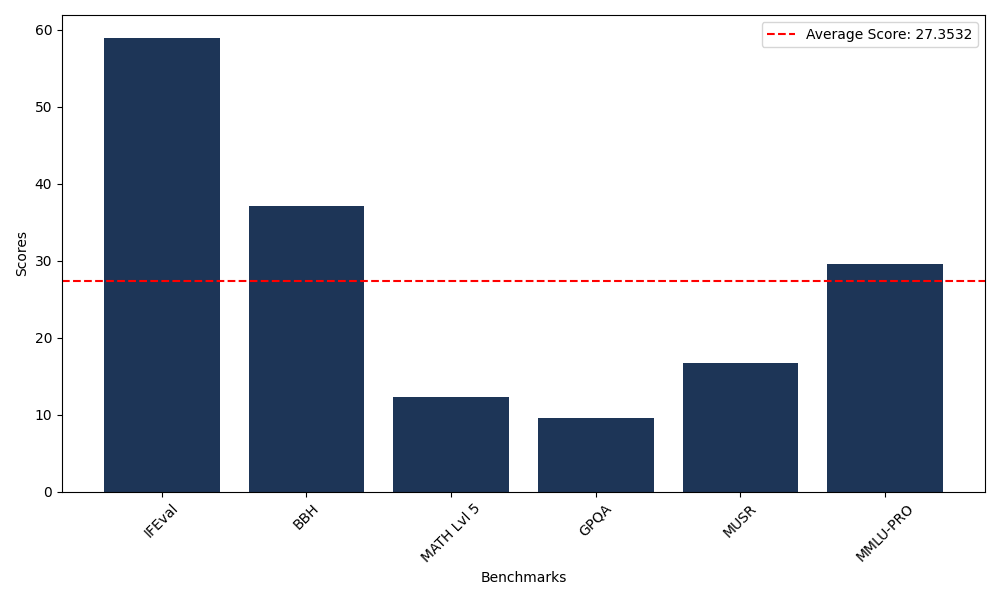

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 58.97 |

| Big Bench Hard (BBH) | 37.11 |

| Mathematical Reasoning Test (MATH Lvl 5) | 12.24 |

| General Purpose Question Answering (GPQA) | 9.51 |

| Multimodal Understanding and Reasoning (MUSR) | 16.68 |

| Massive Multitask Language Understanding (MMLU-PRO) | 29.62 |

Comments

No comments yet. Be the first to comment!

Leave a Comment