Openthinker 7B - Model Details

Openthinker 7B is a large language model developed by Bespoke Labs, featuring 7 billion parameters. It is released under the Apache License 2.0, making it freely available for both research and commercial use. Built upon the Qwen2.5 foundation, this model is fine-tuned to outperform DeepSeek-R1 on specific benchmarks, emphasizing its effectiveness in open-source AI applications.

Description of Openthinker 7B

Openthinker 7B is a fine-tuned version of Qwen/Qwen2.5-7B-Instruct trained on the OpenThoughts-114k dataset, which is derived from DeepSeek-R1. It is fully open-source, with accessible weights, datasets, and code. The model was trained on 4x8xH100 nodes for 20 hours using specific hyperparameters, emphasizing efficiency and scalability. Its design focuses on enhancing performance on open-source benchmarks while maintaining transparency and reproducibility.

Parameters & Context Length of Openthinker 7B

Openthinker 7B has 7b parameters, placing it in the small to mid-scale range of open-source LLMs, which ensures efficiency for tasks requiring moderate computational resources. Its 1k context length is classified as short, making it well-suited for concise interactions but less effective for extended text processing. The model’s design balances accessibility and performance, prioritizing speed and simplicity for applications where resource constraints are critical.

- Parameter Size: 7b (Implications: Efficient for simple tasks, ideal for resource-constrained environments)

- Context Length: 1k (Implications: Limited to short texts, suitable for brief queries or focused outputs)

Possible Intended Uses of Openthinker 7B

Openthinker 7B is a model with 7b parameters and a 1k context length, designed for tasks that require efficiency and accessibility. Its possible uses include math problem solving, where it might assist with step-by-step reasoning or pattern recognition, though this would need validation through specific testing. Code generation and debugging could be another possible application, as it might help users draft or refine code snippets, but its effectiveness in complex programming scenarios remains to be thoroughly explored. General knowledge问答 could also be a possible use case, enabling it to provide concise answers to factual questions, though its accuracy and depth would require further evaluation. These possible uses highlight the model’s flexibility but underscore the need for careful investigation before deployment in real-world scenarios.

- Math problem solving

- Code generation and debugging

- General knowledge问答

Possible Applications of Openthinker 7B

Openthinker 7B is a 7b-parameter model with a 1k context length, offering possible applications in areas where efficiency and accessibility are key. Possible uses could include math problem solving, where it might assist with structured reasoning or pattern identification, though this would require validation through specific testing. Possible applications in code generation and debugging might allow users to draft or refine code snippets, but its effectiveness in complex programming scenarios remains to be thoroughly explored. Possible uses in general knowledge问答 could enable concise factual responses, though accuracy and depth would need further assessment. Possible applications in creative writing or content generation might also be viable, depending on the model’s ability to maintain coherence and relevance. These possible uses highlight the model’s adaptability but emphasize the need for rigorous evaluation before deployment.

- Openthinker 7B: Possible application in math problem solving

- Openthinker 7B: Possible application in code generation and debugging

- Openthinker 7B: Possible application in general knowledge问答

- Openthinker 7B: Possible application in creative writing or content generation

Quantized Versions & Hardware Requirements of Openthinker 7B

Openthinker 7B with the q4 quantization offers a possible balance between precision and performance, requiring a GPU with at least 16GB VRAM and 32GB system memory for smooth operation. This version is optimized for efficiency, making it suitable for users with mid-range hardware. However, the exact compatibility depends on the specific GPU model and available VRAM. fp16 and q8 are also available, providing alternatives for different performance and resource needs.

Openthinker 7B: fp16, q4, q8

Conclusion

Openthinker 7B is a 7b-parameter open-source language model trained on the OpenThoughts-114k dataset, fine-tuned from Qwen2.5-7B-Instruct to outperform DeepSeek-R1 on specific benchmarks, with available weights, code, and datasets under the Apache License 2.0. Its design emphasizes efficiency and accessibility, making it suitable for research and applications requiring transparency and reproducibility.

References

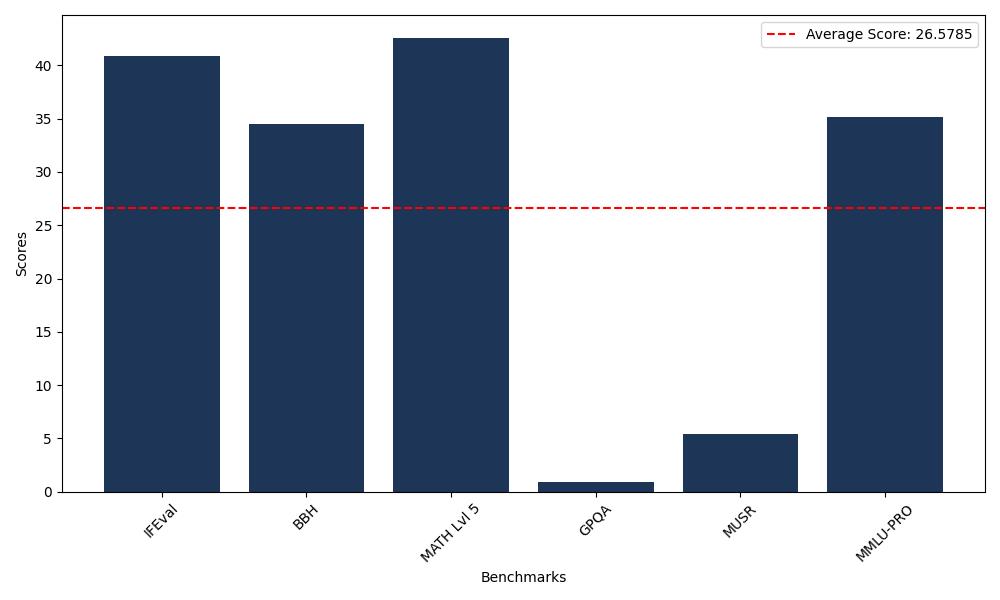

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 40.89 |

| Big Bench Hard (BBH) | 34.51 |

| Mathematical Reasoning Test (MATH Lvl 5) | 42.60 |

| General Purpose Question Answering (GPQA) | 0.89 |

| Multimodal Understanding and Reasoning (MUSR) | 5.42 |

| Massive Multitask Language Understanding (MMLU-PRO) | 35.16 |

Comments

No comments yet. Be the first to comment!

Leave a Comment