Orca Mini 70B - Model Details

Orca Mini 70B is a large language model developed by the community maintainer Psmathur-Orca, featuring 70 billion parameters to support complex tasks. It operates under the Creative Commons Attribution Non Commercial Share Alike 4.0 International (CC-BY-NC-SA-4.0) license, allowing for flexible use while requiring proper attribution and non-commercial distribution. Designed with advanced architectures, Orca Mini 70B is optimized for diverse applications, balancing performance and accessibility in natural language processing.

Description of Orca Mini 70B

Orca Mini 70B is a Llama2-70b model trained on Orca Style datasets, designed for efficient deployment with 4bit quantization that enables it to run on GPUs with 45GB VRAM. It has been rigorously evaluated on benchmarks such as ARC, HellaSwag, and MMLU, demonstrating strong performance across diverse tasks. The model emphasizes accessibility and scalability, making advanced language capabilities available on standard hardware while maintaining high accuracy.

Parameters & Context Length of Orca Mini 70B

Orca Mini 70B is a large language model with 70b parameters, placing it in the very large models category, which excels at complex tasks but demands significant computational resources. Its context length of 4k tokens falls under short contexts, making it effective for concise tasks but limiting its ability to process extended texts. The 70b parameter size enables advanced reasoning and versatility, while the 4k context length ensures efficiency for shorter interactions. These choices reflect a balance between power and accessibility, prioritizing performance for specific use cases over broader scalability.

- Parameter Size: 70b

- Context Length: 4k

Possible Intended Uses of Orca Mini 70B

Orca Mini 70B is a large language model designed for text generation, code generation, and question answering, with possible applications in areas like content creation, software development, and interactive learning. Its 70b parameter size and 4k context length suggest it could possibly support tasks requiring nuanced understanding or structured output, though possible limitations may arise from its context constraints or resource demands. Possible uses might include drafting documents, assisting with coding challenges, or providing explanations, but these could require further testing to ensure effectiveness. The model’s purpose aligns with general-purpose tasks, but possible outcomes would depend on specific implementation and validation.

- text generation

- code generation

- question answering

Possible Applications of Orca Mini 70B

Orca Mini 70B is a large language model with 70b parameters and a 4k context length, making it possible to support tasks requiring nuanced understanding or structured output. Possible applications could include generating creative content, assisting with coding tasks, or providing explanations for complex topics, though possible limitations may arise from its context constraints or resource requirements. Possible uses might also extend to interactive learning tools or drafting technical documents, but these could require further testing to ensure alignment with specific needs. Possible outcomes would depend on the model’s adaptability and the nature of the task, emphasizing the need for careful validation.

- text generation

- code generation

- question answering

- technical documentation

Quantized Versions & Hardware Requirements of Orca Mini 70B

Orca Mini 70B’s medium q4 version requires a GPU with at least 48GB VRAM (e.g., multiple A100 or RTX 4090/6000 series GPUs) to balance precision and performance, with system memory of at least 32GB and adequate cooling. Possible applications for this version may include tasks needing moderate computational power, but possible limitations could arise from hardware constraints. Important considerations include verifying GPU compatibility and ensuring sufficient resources for stable operation.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Orca Mini 70B is a large language model with 70b parameters, optimized for diverse applications through advanced architectures. It utilizes 4-bit quantization for efficient GPU deployment and features a 4k context length, making it suitable for tasks requiring nuanced understanding and structured output.

References

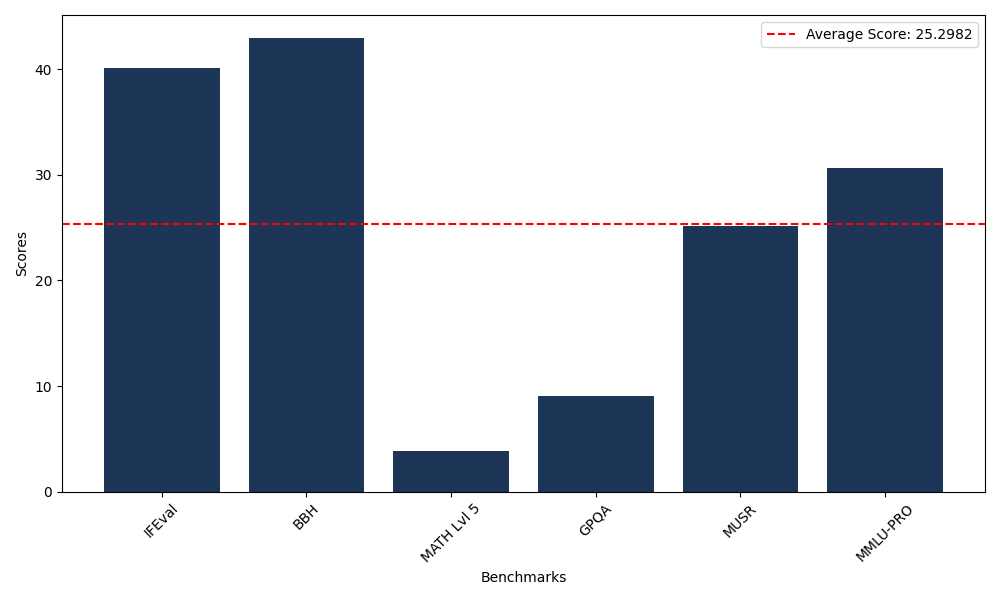

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 40.15 |

| Big Bench Hard (BBH) | 42.98 |

| Mathematical Reasoning Test (MATH Lvl 5) | 3.85 |

| General Purpose Question Answering (GPQA) | 9.06 |

| Multimodal Understanding and Reasoning (MUSR) | 25.12 |

| Massive Multitask Language Understanding (MMLU-PRO) | 30.64 |

Comments

No comments yet. Be the first to comment!

Leave a Comment