Orca2 7B - Model Details

Orca2 7B is a large language model developed by Microsoft, featuring 7b parameters under the Microsoft Research License Terms (MSRLT). It focuses on enhancing reasoning abilities through synthetic data fine-tuning.

Description of Orca2 7B

Orca 2 is a research-focused large language model developed by Microsoft to enhance the reasoning capabilities of Small Language Models (SLMs). It is a fine-tuned version of LLaMA-2, optimized for tasks like reasoning, reading comprehension, math problem solving, and text summarization. The model leverages synthetic training data moderated through Microsoft Azure content filters. It is not optimized for chat interactions and requires task-specific fine-tuning. Its performance is heavily influenced by the distribution of the tuning data.

Parameters & Context Length of Orca2 7B

Orca2 7B is a 7b parameter model with a 4k context length, placing it in the category of small-scale models and short-context systems. Its 7b parameter size ensures fast and resource-efficient performance, ideal for simple tasks but less suited for highly complex or large-scale operations. The 4k context length allows it to handle short to moderate tasks but limits its effectiveness for extended text processing. These characteristics make it a balanced choice for specific applications where efficiency and simplicity are prioritized over extreme scalability or long-context demands.

- Name: Orca2 7B

- Parameter Size: 7b (Small Models: fast and resource-efficient, suitable for simple tasks)

- Context Length: 4k (Short Contexts: suitable for short tasks, limited in long texts)

- Implications: Efficient for targeted tasks but constrained by its size and context limitations.

Possible Intended Uses of Orca2 7B

Orca2 7B is a model designed for research on small language models (SLMs), assessing model capabilities, and building better frontier models. Its 7b parameter size and 4k context length make it a possible tool for exploring how synthetic data can enhance reasoning and task-specific performance. Possible applications include analyzing the impact of fine-tuning strategies on model behavior, experimenting with data distribution effects, or testing scalability in controlled environments. Potential areas of exploration might involve optimizing training methodologies, evaluating generalization across domains, or comparing performance against other models. These possible uses require careful validation to ensure alignment with specific goals and constraints.

- Intended Uses: research on slms, assessing model capabilities, building better frontier models

- Name: Orca2 7B

- Purpose: experimental analysis, capability evaluation, model development

- Important Info: Designed for research, not optimized for direct deployment or specific real-world tasks.

Possible Applications of Orca2 7B

Orca2 7B is a possible tool for exploring research on small language models (SLMs), assessing model capabilities, and building better frontier models. Its 7b parameter size and 4k context length make it a possible candidate for tasks like analyzing synthetic data’s impact on reasoning, experimenting with fine-tuning strategies, or testing scalability in controlled environments. Possible applications might include evaluating how different data distributions affect performance, comparing model behavior across domains, or supporting educational initiatives for language model development. Possible uses could also extend to content generation for non-critical tasks or assisting in academic research. However, each possible application requires thorough evaluation to ensure alignment with specific goals and constraints.

- Name: Orca2 7B

- Applications: research on slms, assessing model capabilities, building better frontier models

- Important Info: Designed for experimental and academic purposes, not for direct deployment in high-stakes scenarios.

Quantized Versions & Hardware Requirements of Orca2 7B

Orca2 7B in its medium q4 version requires a GPU with at least 8GB VRAM for efficient operation, though specific needs may vary based on workload and quantization. Possible applications include research and experimentation, but hardware compatibility should be verified. System memory of at least 32GB and adequate cooling are recommended. Important info: The q4 version balances precision and performance, making it suitable for mid-range hardware.

- Quantized Versions: fp16, q2, q3, q4, q5, q6, q8

- Name: Orca2 7B

- Important Info: Hardware requirements depend on quantization and model size; thorough testing is advised.

Conclusion

Orca2 7B is a 7b parameter large language model developed by Microsoft to enhance reasoning capabilities through synthetic data fine-tuning, designed for research and experimental tasks rather than direct deployment. It supports multiple quantized versions, including q4, which balances precision and performance, making it suitable for mid-range hardware with at least 8GB VRAM.

References

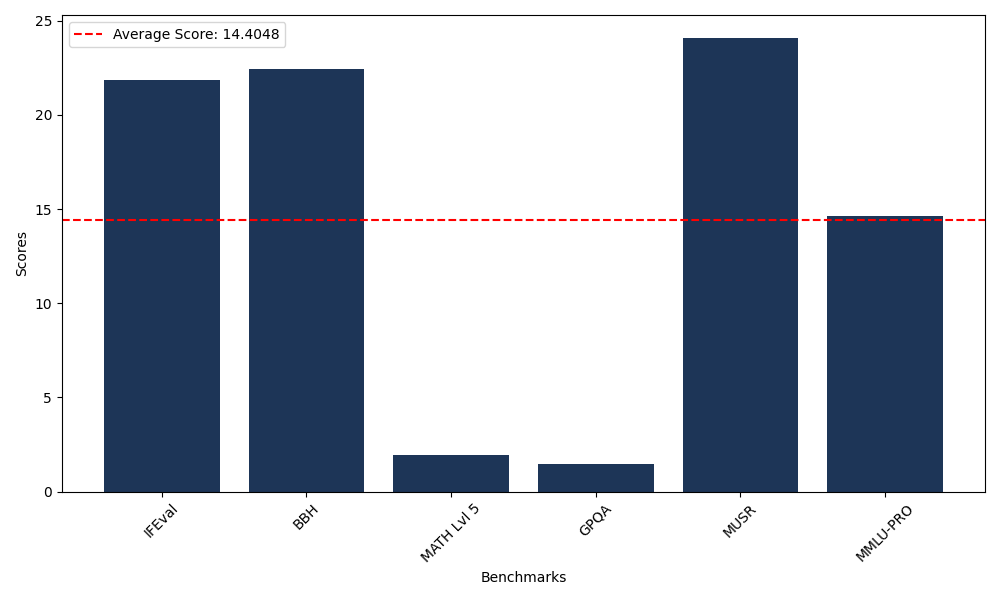

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 21.83 |

| Big Bench Hard (BBH) | 22.43 |

| Mathematical Reasoning Test (MATH Lvl 5) | 1.96 |

| General Purpose Question Answering (GPQA) | 1.45 |

| Multimodal Understanding and Reasoning (MUSR) | 24.09 |

| Massive Multitask Language Understanding (MMLU-PRO) | 14.65 |

Comments

No comments yet. Be the first to comment!

Leave a Comment