Phi 2.7B

Phi 2.7B is a large language model developed by Microsoft with 2.7b parameters, designed to excel in common-sense reasoning and language understanding. It operates under the MIT License, making it accessible for a wide range of applications. The model emphasizes robust comprehension and logical inference, leveraging its parameter scale to deliver nuanced responses across diverse tasks.

Description of Phi 2.7B

Phi-2 is a Transformer-based model with 2.7 billion parameters trained on a 250 billion token dataset comprising NLP synthetic data and filtered web data. It achieves near state-of-the-art performance in benchmarks for common-sense reasoning, language understanding, and logical reasoning. Designed for research on safety challenges like toxicity reduction and bias mitigation, it avoids reinforcement learning from human feedback. The model supports question-answering, chat, and code generation but is not optimized for production use.

Parameters & Context Length of Phi 2.7B

Phi-2.7B has 2.7b parameters, placing it in the small to mid-scale range of open-source LLMs, which typically offer fast and resource-efficient performance for simpler tasks. Its 2k context length falls within short contexts, making it suitable for concise interactions but limiting its ability to process extended texts. The parameter size enables efficient training and inference while maintaining strong performance in reasoning and language understanding, though it may struggle with highly complex or data-intensive tasks. The context length restricts its use in scenarios requiring extensive contextual awareness, such as long document analysis or multi-turn conversations with deep historical context.

- Parameter Size: 2.7b

- Context Length: 2k

Possible Intended Uses of Phi 2.7B

Phi-2.7B is a versatile model with 2.7b parameters designed for tasks in qa format, chat format, and code format, though these are possible applications that require further exploration. Its architecture supports possible use in generating structured responses for question-answering scenarios, engaging in conversational interactions, or assisting with coding tasks, but these possible functions must be validated through rigorous testing. The model’s design emphasizes common-sense reasoning and language understanding, which could enable possible benefits in educational tools, creative writing aids, or basic automation workflows. However, its 2k context length and lack of optimization for production environments mean possible uses should be carefully evaluated for suitability. The MIT License allows for flexible experimentation, but possible applications remain speculative until thoroughly tested.

- qa format

- chat format

- code format

Possible Applications of Phi 2.7B

Phi-2.7B is a model with 2.7b parameters that could have possible applications in areas like educational tools, creative writing assistance, or basic automation workflows, though these possible uses require careful validation. Its qa format support might enable possible improvements in interactive learning platforms, while its chat format could aid in possible conversational interfaces for non-critical tasks. The code format capability might offer possible benefits for simple coding tutorials or script generation, but these possible functions need thorough testing. The model’s design prioritizes common-sense reasoning, which could make it possible to support tasks like content summarization or language translation, though such possible applications must be rigorously assessed before deployment.

- qa format

- chat format

- code format

Quantized Versions & Hardware Requirements of Phi 2.7B

Phi-2.7B in its q4 quantized version requires a GPU with at least 12GB VRAM and 32GB system memory to operate efficiently, making it suitable for systems with mid-range hardware. This medium quantization balances precision and performance, allowing possible deployment on consumer-grade GPUs without excessive resource demands. However, possible variations in workload or model behavior may necessitate adjustments.

- fp16, q2, q3, q4, q5, q6, q8

Conclusion

Phi-2.7B is a large language model developed by Microsoft with 2.7b parameters, designed for common-sense reasoning and language understanding while operating under the MIT License. It supports qa format, chat format, and code format but is not optimized for production use, with a 2k context length and q4 quantized version requiring 12GB VRAM for efficient deployment.

References

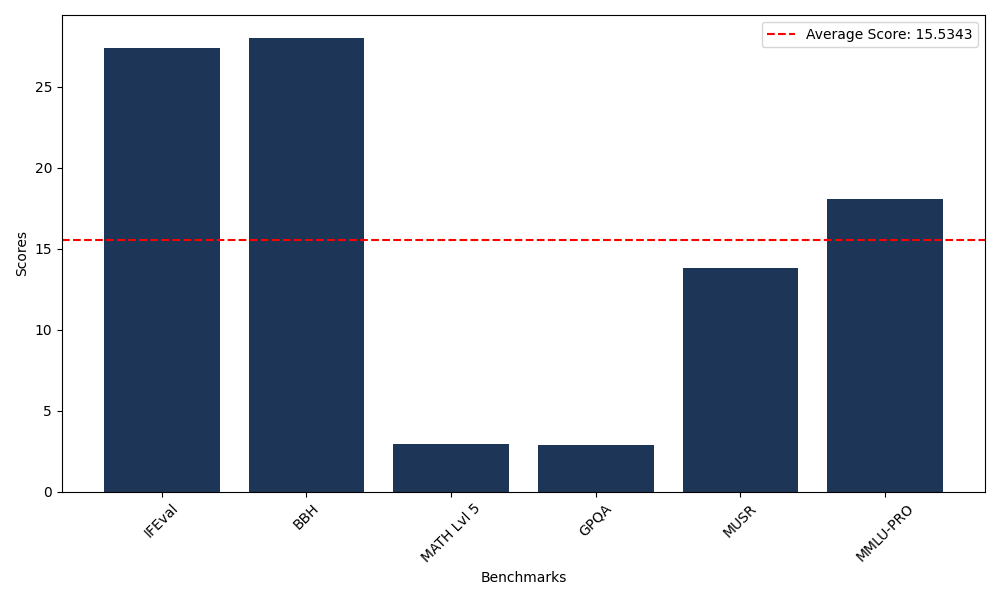

Benchmarks

| Benchmark Name | Score |

|---|---|

| Instruction Following Evaluation (IFEval) | 27.39 |

| Big Bench Hard (BBH) | 28.04 |

| Mathematical Reasoning Test (MATH Lvl 5) | 2.95 |

| General Purpose Question Answering (GPQA) | 2.91 |

| Multimodal Understanding and Reasoning (MUSR) | 13.84 |

| Massive Multitask Language Understanding (MMLU-PRO) | 18.09 |